Computers will soon be able to simulate the functioning of a human brain. In a near future, artificial superintelligence could become vastly more intellectually capable and versatile than humans. But could machines ever truly experience the whole range of human feelings and emotions, or are there technical limitations ?

In a few decades, intelligent and sentient humanoid robots will wander the streets alongside humans, work with humans, socialize with humans, and perhaps one day will be considered individuals in their own right. Research in artificial intelligence (AI) suggests that intelligent machines will eventually be able to see, hear, smell, sense, move, think, create and speak at least as well as humans. They will feel emotions of their own and probably one day also become self-aware.

There may not be any reason per se to want sentient robots to experience exactly all the emotions and feelings of a human being, but it may be interesting to explore the fundamental differences in the way humans and robots can sense, perceive and behave. Tiny genetic variations between people can result in major discrepancies in the way each of us thinks, feels and experience the world. If we appear so diverse despite the fact that all humans are in average 99.5% identical genetically, even across racial groups, how could we possibly expect sentient robots to feel the exact same way as biological humans ? There could be striking similarities between us and robots, but also drastic divergences on some levels. This is what we will investigate below.

MERE COMPUTER OR MULTI-SENSORY ROBOT ?

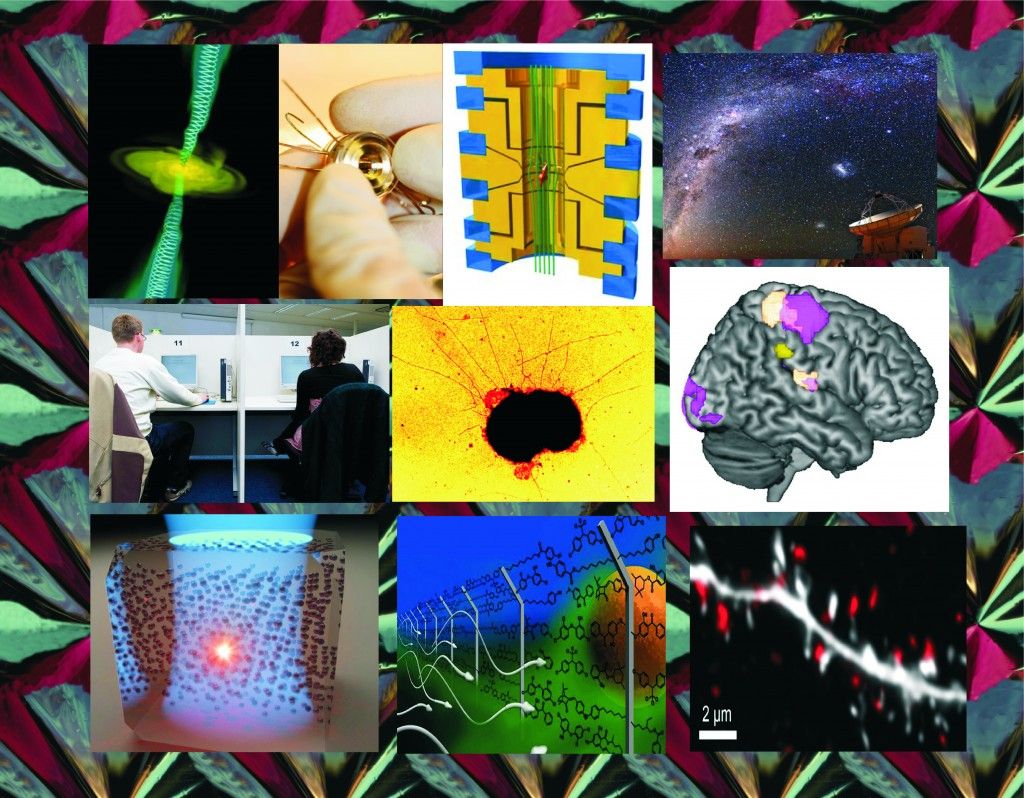

Computers are undergoing a profound mutation at the moment. Neuromorphic chips have been designed on the way the human brain works, modelling the massively parallel neurological processeses using artificial neural networks. This will enable computers to process sensory information like vision and audition much more like animals do. Considerable research is currently devoted to create a functional computer simulation of the whole human brain. The Human Brain Project is aiming to achieve this for 2016. Does that mean that computers will finally experience feelings and emotions like us ? Surely if an AI can simulate a whole human brain, then it becomes a sort of virtual human, doesn’t it ? Not quite. Here is why.

There is an important distinction to be made from the onset between an AI residing solely inside a computer with no sensor at all, and an AI that is equipped with a robotic body and sensors. A computer alone would have a range of emotions far more limited as it wouldn’t be able to physically interact with its environment. The more sensory feedback a machine could receive, the wide the range of feelings and emotions it will be able to experience. But, as we will see, there will always be fundamental differences between the type of sensory feedback that a biological body and a machine can receive.

Here is an illustration of how limited an AI is emotionally without a sensory body of its own. In animals, fear, anxiety or phobias are evolutionary defense mechanisms aimed at raising our vigilence in the face of danger. That is because our bodies work with biochemical signals involving hormones and neurostransmitters sent by the brain to prompt a physical action when our senses perceive danger. Computers don’t work that way. Without sensors feeding them information about their environment, computers wouldn’t be able to react emotionally.

Even if a computer could remotely control machines like robots (e.g. through the Internet) that are endowed with sensory perception, the computer itself wouldn’t necessarily care if the robot (a discrete entity) is harmed or destroyed, since it would have no physical consequence on the AI itself. An AI could fear for its own well-being and existence, but how is it supposed to know that it is in danger of being damaged or destroyed ? It would be the same as a person who is blind, deaf and whose somatosensory cortex has been destroyed. Without feeling anything about the outside world, how could it perceive danger ? That problem disappear once the AI is given at least one sense, like a camera to see what is happening around itself. Now if someone comes toward the computer with a big hammer, it will be able to fear for its existence !

WHAT CAN MACHINES FEEL ?

In theory, any neural process can be reproduced digitally in a computer, even though the brain is mostly analog. This is hardly a concern, as Ray Kurzweil explained in his book How to Create a Mind. However it does not always make sense to try to replicate everything a human being feel in a machine.

While sensory feelings like heat, cold or pain could easily be felt from the environment if the machine is equipped with the appropriate sensors, this is not the case for other physiological feelings like thirst, hunger, and sleepiness. These feelings alert us of the state of our body and are normally triggered by hormones such as vasopressin, ghrelin, or melatonin. Since machines do not have a digestive system nor hormones, it would be downright nonsensical to try to emulate such feelings.

Emotions do not arise for no reason. They are either a reaction to an external stimulus, or a spontaneous expression of an internal thought process. For example, we can be happy or joyful because we received a present, got a promotion or won the lottery. These are external causes that trigger the emotions inside our brain. The same emotion can be achieved as the result of an internal thought process. If I manage to find a solution to a complicated mathematical problem, that could make me happy too, even if nobody asked me to solve it and it does not have any concrete application in my life. It is a purely intellectual problem with no external cause, but solving it confers satisfaction. The emotion could be said to have arisen spontaneously from an internalized thought process in the neocortex. In other words, solving the problem in the neocortex causes the emotion in another part of the brain.

An intelligent computer could also prompt some emotions based on its own thought processes, just like the joy or satisfaction experienced by solving a mathematical problem. In fact, as long as it is allowed to communicate with the outside world, there is no major obstacle to computers feeling true emotions of its own like joy, sadness, surprise, disappointment, fear, anger, or resentment, among others. These are all emotions that can be produced by interactions through language (e.g. reading, online chatting) with no need for physiological feedback.

Now let’s think about how and why humans experience a sense of well being and peace of mind, two emotions far more complex than joy or anger. Both occur when our physiological needs are met, when we are well fed, rested, feel safe, don’t feel sick, and are on the right track to pass on our genes and keep our offspring secure. These are compound emotions that require other basic emotions as well as physiological factors. A machine without physiological needs cannot get sick and that does not need to worry about passing on its genes to posterity, and therefore will have no reason to feel that complex emotion of ‘well being’ the way humans do. For a machine well being may exist but in a much more simplified form.

Just like machines cannot reasonably feel hunger because they do not eat, replicating emotions on machines with no biological body, no hormones, and no physiological needs can be tricky. This is the case with social emotions like attachment, sexual emotions like love, and emotions originating from evolutionary mechanisms set in the (epi)genome. This is what we will explore in more detail below.

FEELINGS ROOTED IN THE SENSES AND THE VAGUS NERVE

What really distinguishes intelligent machines from humans and animals is that the former do not have a biological body. This is essentially why they could not experience the same range of feelings and emotions as we do, since many of them inform us about the state of our biological body.

An intelligent robot with sensors could easily see, hear, detect smells, feel an object’s texture, shape and consistency, feel pleasure and pain, heat and cold, and the like. But what about the sense of taste ? Or the effects of alcohol on the mind ? Since machines do not eat, drink and digest, they wouldn’t be able to experience these things. A robot designed to socialize with humans would be unable to understand and share the feelings of gastronomical pleasure or inebriety with humans. They could have a theoretical knowledge of it, but not a first-hand knowledge from an actually felt experience.

But the biggest obstacle to simulating physical feelings in a machine comes from the vagus nerve, which controls such varied things as digestion, ‘gut feelings’, heart rate and sweating. When we are scared or disgusted, we feel it in our guts. When we are in love we feel butterflies in our stomach. That’s because of the way our nervous system is designed. Quite a few emotions are felt through the vagus nerve connecting the brain to the heart and digestive system, so that our body can prepare to court a mate, fight an enemy or escape in the face of danger, by shutting down digestion, raising adrenaline and increasing heart rate. Feeling disgusted can help us vomit something that we have swallowed and shouldn’t have.

Strong emotions can affect our microbiome, the trillions of gut bacteria that help us digest food and that secrete 90% of the serotonin and 50% of the dopamine used by our brain. The thousands of species of bacteria living in our intestines can vary quickly based on our diet, but it has been demonstrated that even emotions like stress, anxiety, depression and love can strongly affect the composition of our microbiome. This is very important because of the essential role that gut bacteria play in maintaining our brain functions. The relationship between gut and brain works both ways. The presence or absence of some gut bacteria has been linked to autism, obsessive-compulsive disorder and several other psychological conditions. What we eat actually influence the way the think too, by changing our gut flora, and therefore also the production of neurotransmitters. Even our intuition is linked to the vagus nerve, hence the expression ‘gut feeling’.

Without a digestive system, a vagus nerve and a microbiome, robots would miss a big part of our emotional and psychological experience. Our nutrition and microbiome influence our brain far more than most people suspect. They are one of the reasons why our emotions and behaviour are so variable over time (in addition to maturity; see below).

SICKNESS, FATIGUE, SLEEP AND DREAMS

Another key difference between machines and humans (or animals) is that our emotions and thoughts can be severely affected by our health, physical condition and fatigue. Irritability is often an expression of mental or physical exhaustion caused by a lack of sleep or nutrients, or by a situation that puts excessive stress on mental faculties and increases our need for sleep and nutrients. We could argue that computers may overheat if used too intensively, and may also need to rest. That is not entirely true if the hardware is properly designed with an super-efficient cooling system, and a steady power supply. New types of nanochips may not produce enough heat to have any heating problem at all.

Most importantly machines don’t feel sick. I don’t mean just being weakened by a disease or feeling pain, but actually feeling sick, such as indigestion, nausea (motion sickness, sea sickness), or feeling under the weather before tangible symptoms appear. These aren’t enviable feelings of course, but the point is that machines cannot experience them without a biological body and an immune system.

When tired or sick, not only do we need to rest to recover our mental faculties and stabilize our emotions, we also need to dream. Dreams are used to clear our short-term memory cache (in the hippocampus), to replete neurotransmitters, to consolidate memories (by myelinating synapses during REM sleep), and to let go of the day’s emotions by letting our neurons firing up freely. Dreams also allow a different kind of thinking free of cultural or professional taboos that increase our creativity. This is why we often come up with great ideas or solutions to our problems during our sleep, and notably during the lucid dreaming phase.

Computers cannot dream and wouldn’t need to because they aren’t biological brains with neurostransmitters, stressed out neurons and synapses that need to get myelinated. Without dreams, an AI would nevertheless loose an essential component of feeling like a biological human.

EMOTIONS ROOTED IN SEXUALITY

Being in love is an emotion that brings a male and a female individual (save for some exceptions) of the same species together in order to reproduce and raise one’s offspring until they grow up. Sexual love is caused by hormomes, but is not merely the product of hormonal changes in our brain. It involves changes in the biochemistry of our whole body and can even lead to important physiological effects (e.g. on morphology) and long-term behavioural changes. Clearly sexual love is not ‘just an emotion’ and is not purely a neurological process either. Replicating the neurological expression of love in an AI would simulate the whole emotion of love, but only one of its facets.

Apart from the issue of reproducing the physiological expresion of love in a machine, there is also the question of causation. There is a huge difference between an artificially implanted/simulated emotion and one that is capable of arising by itself from environmental causes. People can fall in love for a number of reasons, such as physical attraction and mental attraction (shared interests, values, tastes, etc.), but one of the most important in the animal world is genetic compatibility with the prospective mate. Individuals who possess very different immune systems (HLA genes), for instance, tend to be more strongly attracted to each other and feel more ‘chemistry’. We could imagine that a robot with a sense of beauty and values could appreciate the looks and morals of another robot or a human being and even feel attracted (platonically). Yet a machine couldn’t experience the ‘chemistry’ of sexual love because it lacks hormones, genes and other biochemical markers required for sexual reproduction. In other words, robots could have friends but not lovers, and that make sense.

A substantial part of the range of human emotions and behaviours is anchored in sexuality. Jealousy is another good example. Jealousy is intricatedly linked to love. It is the fear of losing one’s loved one to a sexual rival. It is an innate emotion whose only purpose is to maximize our chances of passing our genes through sexual reproduction by warding off competitors. Why would a machine, which does not need to reproduce sexually, need to feel that ?

One could wonder what difference it makes whether a robot can feel love or not. They don’t need to reproduce sexually, so who cares ? If we need intelligent robots to work with humans in society, for example by helping to take care of the young, the sick and the elderly, they could still function as social individuals without feeling sexual love, wouldn’t they ? In fact you may not want a humanoid robot to become a sexual predator, especially if working with kids ! Not so fast. Without a basic human emotion like love, an AI simply cannot think, plan, prioritize and behave the same way as humans do. Their way of thinking, planning and prioritizing would rely on completely different motivations. For example, young human adults spend considerable time and energy searching for a suitable mate in order to reproduce.

A robot endowed with an AI of equal or greater than human intelligence, lacking the need for sexual reproduction would behave, plan and prioritize its existence very differently than humans. That is not necessarily a bad thing, for a lot of conflicts in human society are caused by sex. But it also means that it could become harder for humans to predict the behaviour and motivation of autonomous robots, which could be a problem once they become more intelligent than us in a few decades. The bottom line is that by lacking just one essential human emotion (let alone many), intelligent robots could have very divergent behaviours, priorities and morals from humans. It could be different in a good way, but we can’t know that for sure at present since they haven’t been built yet.

TEMPERAMENT AND SOCIABILITY

Humans are social animals. They typically, though not always (e.g. some types of autism), seek to belong to a group, make friends, share feelings and experiences with others, gossip, seek approval or respect from others, and so on. Interestingly, a person’s sociability depends on a variety of factors not found in machines, including gender, age, level of confidence, health, well being, genetic predispositions, and hormonal variations.

We could program an AI to mimick a certain type of human sociability, but it wouldn’t naturally evolve over time with experience and environmental factors (food, heat, diseases, endocrine disruptors, microbiome). Knowledge can be learned but not spontaneous reactions to environmental factors.

Humans tend to be more sociable when the weather is hot and sunny, when they drink alcohol and when they are in good health. A machine has no need to react like that, unless once again we intentionally program it to resemble humans. But even then it couldn’t feel everything we feel as it doesn’t eat, doesn’t have gut bacteria, doesn’t get sick, and doesn’t have sex.

MATERNAL WARMTH AND FEELING OF SAFETY IN MAMMALS

Humans, like all mammals, have an innate need for maternal warmth in childhood. An experiment was conducted with newborn mice taken away from their biological mother. The mice were placed in a cage with two dummy mothers. One of them was warm, fluffy and cosy, but did not have milk. The other one was hard, cold and uncosy but provided milk. The baby mice consistently chose the cosy one, demonstrating that the need for comfort and safety trumps nutrition in infant mammals. Likewise, humans deprived of maternal (or paternal) warmth and care as babies almost always experience psychological problems growing up.

In addition to childhood care, humans also need the feeling of safety and cosiness provided by the shelter of one’s home throughout life. Not all animals are like that. Even as hunter-gatherers or pastoralist nomads, all Homo sapiens need a shelter, be it a tent, a hut or a cave.

How could we expect that kind of reaction and behaviour in a machine that does not need to grow from babyhood to adulthood, cannot know what it is to have parents or siblings, nor need to feel reassured by maternal warmth, and do not have a biological compulsion to seek a shelter ? Without those feelings, it is extremely doubtful that a machine could ever truly understand and empathize completely with humans.

These limitations mean that it may be useless to try to create intelligent, sentient and self-aware robots that truly think, feel and behave like humans. Reproducing our intellect, language, and senses (except taste) are the easy part. Then comes consciousness, which is harder but still feasible. But since our emotions and feelings are so deeply rooted in our biological body and its interaction with its environment, the only way to reproduce them would be to reproduce a biological body for the AI. In other words, we are not talking about a creating a machine anymore, but genetically engineering a new life being, or using neural implants for existing humans.

MACHINES DON’T MATURE

The way human experience emotions evolves dramatically from birth to adulthood. Children are typically hyperactive and excitable and are prone to making rash decisions on impulse. They cry easily and have difficulties containing and controlling their emotions and feelings. As we mature, we learn more or les successfully to master our emotions. Actually controlling one’s emotions gets easier over time because with age the number of neurons in the brain decreases and emotions get blunter and vital impulses weaker.

The expression of one’s emotions is heavily regulated by culture and taboos. That’s why speakers of Romance languages will generally express their feelings and affection more freely than, say, Japanese or Finnish people. Would intelligent robots also follow one specific human culture, or create a culture on their own ?

Sex hormones also influence the way we feel and express emotions. Male testosterone makes people less prone to emotional display, more rational and cold, but also more aggressive. Female estrogens increase empathy, affection and maternal instincts of protection and care. A good example of the role of biology on emotions is the way women’s hormonal cycles (and the resulting menstruations) affect their emotions. One of the reasons that children process emotions differently than adults is that have lower sex hormomes. As people age, hormonal levels decrease (not just sex hormones), making us more mellow.

Machines don’t mature emotionally, do not go through puberty, do not have hormonal cycles, nor undergo hormonal change based on their age, diet and environment. Artificial intelligence could learn from experience and mature intellectually, but not mature emotionally like a child becoming an adult. This is a vital difference that shouldn’t be underestimated. Program an AI to have the emotional maturity of a 5-year old and it will never grow up. Children (especially boys) cannot really understand the reason for their parents’ anxiety toward them until they grow up and have children of their own, because they lack the maturity and sexual hormones associated with parenthood.

We could always run a software emulating changes in AI maturity over time, but they would not be the result of experiences and interactions with the environment. It may not be useful to create robots that mature like us, but the argument debated here is whether machines could ever feel exactly like us or not. This argument is not purely rhetorical. Some transhumanists wish to be able one day to upload their mind onto a computer and transfer our consciouness (which may not be possible for a number of reasons). Assuming that it becomes possible, what if a child or teenager decides to upload his or her mind and lead a new robotic existence ? One obvious problem is that this person would never fulfill his/her potential for emotional maturity.

The loss of our biological body would also deprive us of our capacity to experience feelings and emotions bound to our physiology. We may be able to keep those already stored in our memory, but we may never dream, enjoy food, or fall in love again.

SUMMARY & CONCLUSION

What emotions could machines experience ?

Even though many human emotions are beyond the range of machines due to their non-biological nature, some emotions could very well be felt by an artificial intelligence. These include, among others:

- Joy, satisfaction, contentment

- Disappointment, sadness

- Surprise

- Fear, anger, resentment

- Friendship

- Appreciation for beauty, art, values, morals, etc.

What emotions and feelings would machines not be able to experience ?

The following emotions and feelings could not be wholly or faithfully experienced by an AI, even with a sensing robotic body, beyond mere implanted simulation.

- Hunger, thirst, drunkenness, gastronomical enjoyment

- Various feelings of sickness, such as nausea, indigestion, motion sickness, sea sickness, etc.

- Sexual love, attachment, jealousy

- Maternal/paternal instincts towards one’s own offspring

- Fatigue, sleepiness, irritability

- Dreams and associated creativity

In addition, machine emotions would run up against the following issues that would prevent them to feel and experience the world truly like humans.

- Machines wouldn’t mature emotionally with age.

- Machines don’t grow up and don’t go through puberty to pass from a relatively asexual childhood stage to a sexual adult stage

- Machines cannot fall in love (+ associated emotions, behaviours and motivations) as they aren’t sexual beings

- Being asexual, machines are genderless and therefore lack associated behaviour and emotions caused by male and female hormones.

- Machines wouldn’t experience gut feelings (fear, love, intuition).

- Machine emotions, intellect, psychology and sociability couldn’t vary with nutrition and microbiome, hormonal changes, or environmental factors like the weather.

It is not completely impossible to bypass these obstacles, but that would require to create a humanoid machine that not only possess human-like intellectual faculties, but also an artificial body that can eat and digest and with a digestive system connected to the central microprocessor in the same way as our vagus nerve is connected to our brain. That robot would also need a gender and a capacity to have sex and feel attracted to other humanoid robots or humans based on a predefined programming that serves as an alternative to a biological genome to create a sense of ‘sexual chemistry’ when matched with an individual with a compatible “genome”. It would necessitate artificial hormones to regulate its hunger, thirst, sexual appetite, homeostasis, and so on.

Although we lack the technology and in-depth knowledge of the human body to consider such an ambitious project any time soon, it could eventually become possible one day. One could wonder whether such a magnificent machine could still be called a machine, or simply an artificially made life being. I personally don’t think it should be called a machine at that point.

———

This article was originally published on Life 2.0.