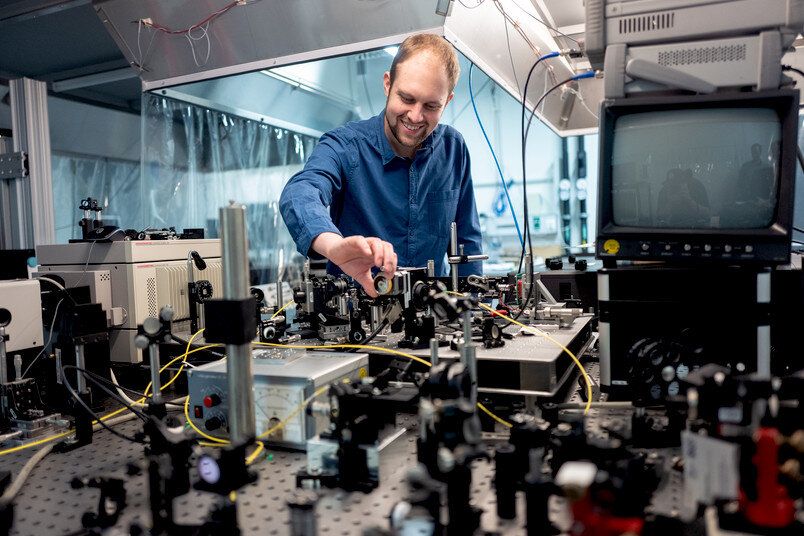

Engineers at Ruhr-Universität Bochum have developed a novel concept for rapid data transfer via optical fibre cables. In current systems, a laser transmits light signals through the cables and information is coded in the modulation of light intensity. The new system, a semiconductor spin laser, is based on a modulation of light polarisation instead. Published on 3 April 2019 in the journal Nature, the study demonstrates that spin lasers have the capacity of working at least five times as fast as the best traditional systems, while consuming only a fraction of energy. Unlike other spin-based semiconductor systems, the technology potentially works at room temperature and doesn’t require any external magnetic fields. The Bochum team at the Chair of Photonics and Terahertz Technology implemented the system in collaboration with colleagues from Ulm University and the University at Buffalo.

Rapid data transfer is currently an energy guzzler

Due to physical limitations, data transfer that is based on a modulation of light intensity without utilizing complex modulation formats can only reach frequencies of around 40 to 50 gigahertz. In order to achieve this speed, high electrical currents are necessary. “It’s a bit like a Porsche where fuel consumption dramatically increases if the car is driven fast,” compares Professor Martin Hofmann, one of the engineers from Bochum. “Unless we upgrade the technology soon, data transfer and the Internet are going to consume more energy than we are currently producing on Earth.” Together with Dr. Nils Gerhardt and Ph.D. student Markus Lindemann, Martin Hofmann is therefore researching into alternative technologies.

Read more