Chip giant shouldn’t rely on gas to help meet its mammoth power demands.

Tim Daiss

Li and colleagues used a technique called distributed acoustic sensing (DAS), which, while new to the world of seismology, is already used to monitor pipelines and power cables for defects. The method involves sending laser light pulses over optical fibers and measuring the intensity of the signals reflected back from imperfections in the fiber. Slight stretching or contracting of the fiber (say, from an) can change the reflected signals.

Based on the pulse’s time of return, you can pinpoint when and where along the cable the disturbance occurred. Because light gets reflected from thousands of imperfection points along fibers, a kilometers-long stretch of cable can act as thousands of seismometers. This means significantly more seismic data, leading to higher resolution, which allows pinpointing the location of smaller seismic activity.

The Caltech researchers have converted preexisting optical cables into a DAS array. Telecom companies usually lay down more fiber than they need, and the research team taps into some of this “dark” unused fiber. With permission from the California Broadband Cooperative, the team set up a DAS transceiver at one end of a length of fiber-optic cable along the border between California and Nevada.

Distributed acoustic sensing (DAS)※1, which measures the strain on optical fibers installed on the seafloor, has enabled earthquakes to be observed along fiber optic cable transects, in contrast to the conventional observations using ocean bottom instruments. DAS observations were conducted on seafloor fiber optic cables offshore of Muroto, Japan to observe slow earthquakes※2 in the Nankai Trough region.

Simulations of superfluid helium show it follows the same unusual entropy rule that black holes do.

About 10 minutes with a therapy dog soothed kids who felt anxious about being emergency room patients.

A clinical trial found that after spending time with a dog, young patients reported a significantly larger decrease in their anxiety than kids who didn’t have dog time.

Physicists have measured a nuclear reaction that can occur in neutron star collisions, providing direct experimental data for a process that had previously only been theorized. The study, led by the University of Surrey, provides new insight into how the universe’s heaviest elements are forged—and could even drive advancements in nuclear reactor physics.

Working in collaboration with the University of York, the University of Seville, and TRIUMF, Canada’s national particle accelerator center, the breakthrough marks the first-ever measurement of a weak r-process reaction cross-section using a radioactive ion beam, in this case studying the 94 Sr(α, n)97 Zr reaction. This is where a radioactive form of strontium (strontium-94) absorbs an alpha particle (a helium nucleus), then emits a neutron and transforms into zirconium-97.

The study has been published in Physical Review Letters.

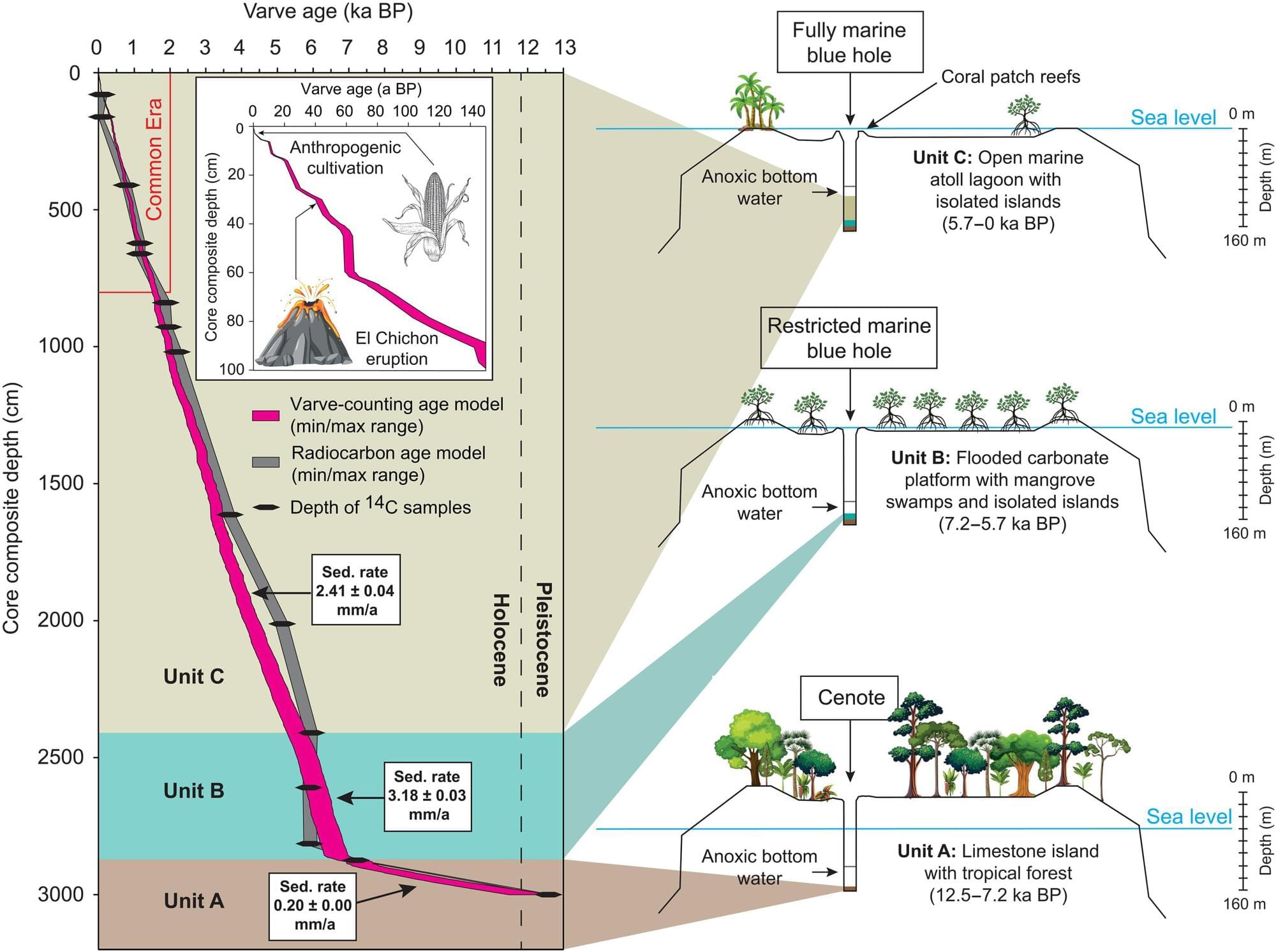

Using a sediment core taken from the Great Blue Hole off the coast of the Central American state of Belize, researchers from the universities of Frankfurt, Cologne, Göttingen, Hamburg and Bern have analyzed the local climate history of the last 5,700 years.

Investigations of the sediment layers from the 30-meter-long core revealed that storms have increased over the long term and that tropical cyclones have become much more frequent in recent decades. The results were published under the title “An annually resolved 5700-year storm archive reveals drivers of Caribbean cyclone frequency” in the journal Science Advances.

The Great Blue Hole is up to 125 meters deep and approximately 300 meters wide, situated in the very shallow Lighthouse Reef, an atoll off the coast of Belize. The hole was formed from a stalactite cave that collapsed at the end of the last ice age and then became flooded by the rising sea level as a result of the melting of the continental ice masses.