When scientists find a round, lumpy object they can’t totally explain, they call it a “blob.” Here are our nine favorite blobs of 2019.

Get the latest international news and world events from around the world.

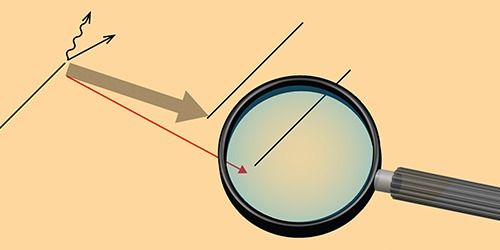

Viewpoint: A Forbidden Transition Allowed for Stars

The discovery of an exceptionally strong “forbidden” beta-decay involving fluorine and neon could change our understanding of the fate of intermediate-mass stars.

Every year roughly 100 billion stars are born and just as many die. To understand the life cycle of a star, nuclear physicists and astrophysicists collaborate to unravel the physical processes that take place in the star’s interior. Their aim is to determine how the star responds to these processes and from that response predict the star’s final fate. Intermediate-mass stars, whose masses lie somewhere between 7 and 11 times that of our Sun, are thought to die via one of two very different routes: thermonuclear explosion or gravitational collapse. Which one happens depends on the conditions within the star when oxygen nuclei begin to fuse, triggering the star’s demise. Researchers have now, for the first time, measured a rare nuclear decay of fluorine to neon that is key to understanding the fate of these “in between” stars [1, 2]. Their calculations indicate that thermonuclear explosion and not gravitational collapse is the more likely expiration route.

The evolution and fate of a star strongly depend on its mass at birth. Low-mass stars—such as the Sun—transition first into red giants and then into white dwarfs made of carbon and oxygen as they shed their outer layers. Massive stars—those whose mass is at least 11 times greater than the Sun’s—also transition to red giants, but in the cores of these giants, nuclear fusion continues until the core has turned completely to iron. Once that happens, the star stops generating energy and starts collapsing under the force of gravity. The star’s core then compresses into a neutron star, while its outer layers are ejected in a supernova explosion. The evolution of intermediate-mass stars is less clear. Predictions indicate that they can explode both via the gravitational collapse mechanism of massive stars and by a thermonuclear process [3– 6]. The key to finding out which happens lies in the properties of an isotope of neon and its ability to capture electrons.

The ‘Quantum Computing’ Decade Is Coming—Here’s Why You Should Care

The ability to process qubits is what allows a quantum computer to perform functions a binary computer simply cannot, like computations involving 500-digit numbers. To do so quickly and on demand might allow for highly efficient traffic flow. It could also render current encryption keys mere speedbumps for a computer able to replicate them in an instant. #QuantumComputing

Multiply 1,048,589 by 1,048,601, and you’ll get 1,099,551,473,989. Does this blow your mind? It should, maybe! That 13-digit prime number is the largest-ever prime number to be factored by a quantum computer, one of a series of quantum computing-related breakthroughs (or at least claimed breakthroughs) achieved over the last few months of the decade.

An IBM computer factored this very large prime number about two months after Google announced that it had achieved “quantum supremacy”—a clunky term for the claim, disputed by its rivals including IBM as well as others, that Google has a quantum machine that performed some math normal computers simply cannot.

SEE ALSO: 5G Coverage May Set Back Accurate Weather Forecasts By 30 Years

Study Finds New Key To Longevity — And It’s In The Gut

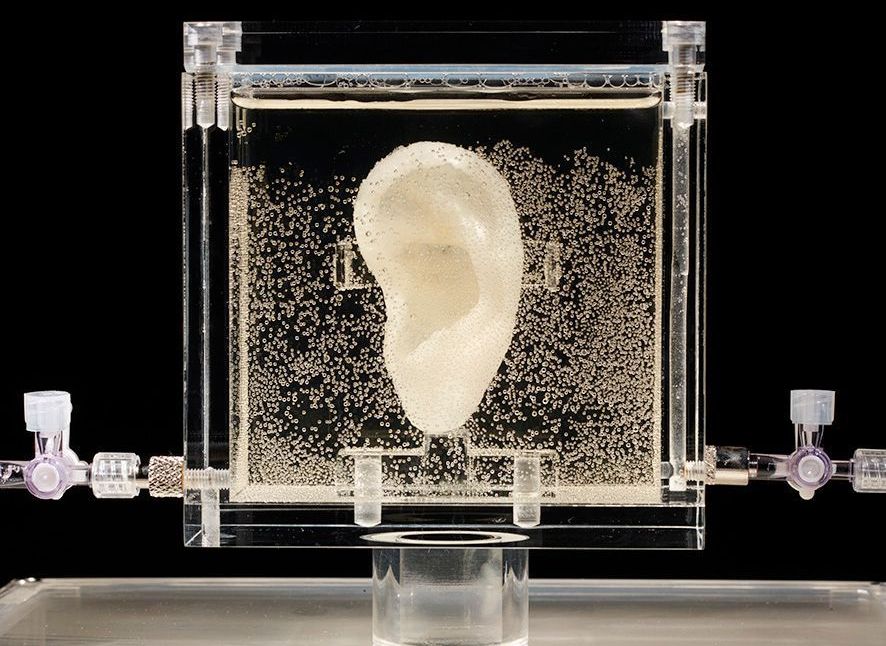

Having a healthy gut should always be a priority when dealing with any health-related issues. It’s connected to various problems like IBS, asthma, thyroid disorders, and even diabetes. A new study, however, is giving us another reason to promote gut health—and we’re excited about it.

Using mice, an international research team has discovered a specific microorganism living in the gut that may affect the aging process.