Cheap technology will sweep away lots of jobs. That’s an argument for a better safety net.

It’s amusing that these people know where this is headed, but arent interested enough to stop it.

The co-chief investment officer and co-chairman of Bridgewater Associates shared his thoughts in a Facebook post on Thursday.

Dalio says he was responding to a question about whether machine intelligence would put enough people out of work that the government will have to pay people to live with a cash handout, a concept known as universal basic income.

My view is that algorithmic/automated decision making is a two edged sword that is improving total productivity but is also eliminating jobs, leading to big wealth and opportunity gaps and populism, and creating a national emergency.

Dont really care about the competition, but this horse race means AI hitting the 100 IQ level at or before 2029 should probably happen.

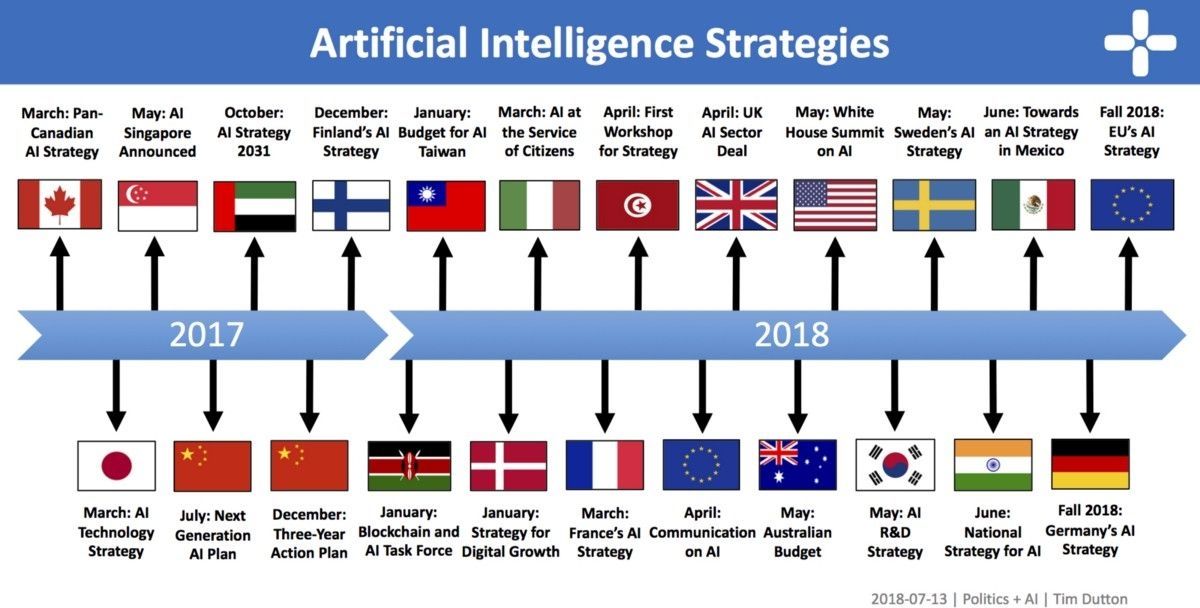

The race to become the global leader in artificial intelligence (AI) has officially begun. In the past fifteen months, Canada, Japan, Singapore, China, the UAE, Finland, Denmark, France, the UK, the EU Commission, South Korea, and India have all released strategies to promote the use and development of AI. No two strategies are alike, with each focusing on different aspects of AI policy: scientific research, talent development, skills and education, public and private sector adoption, ethics and inclusion, standards and regulations, and data and digital infrastructure.

This article summarizes the key policies and goals of each national strategy. It also highlights relevant policies and initiatives that the countries have announced since the release of their initial strategies.

I plan to continuously update this article as new strategies and initiatives are announced. If a country or policy is missing (or if something in the summary is incorrect), please leave a comment and I will update the article as soon as possible.

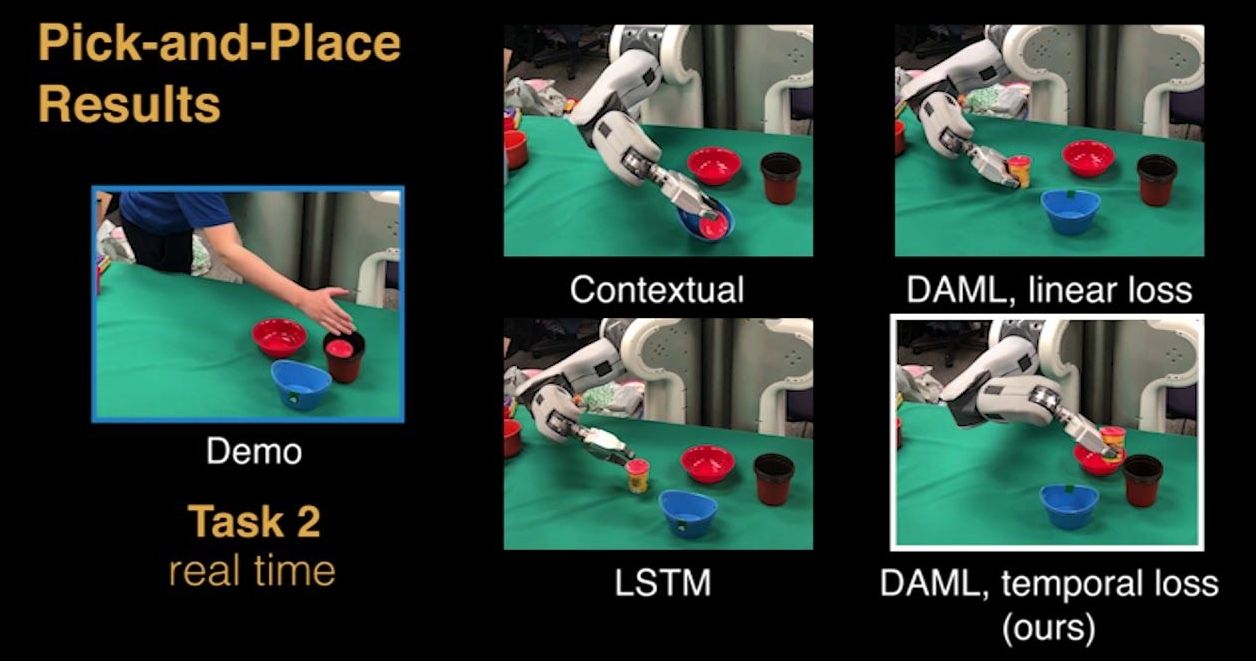

A team of researchers at UC Berkeley has found a way to get a robot to mimic an activity it sees on a video screen just a single time. In a paper they have uploaded to the arXiv preprint server, the team describes the approach they used and how it works.

Robots that learn to do things simply by watching a human carry out an action a single time would be capable of learning many more new actions much more quickly than is now possible. Scientists have been working hard to figure out how to make it happen.

Historically though, robots have been programmed to perform actions like picking up an object by via code that expressly lays out what needs to be done and how. That is how most robots that do things like assemble cars in a factory work. Such robots must still undergo a training process by which they are led through procedures multiple times until they are able to do them without making mistakes. More recently, robots have been programmed to learn purely through observation—much like humans and other animals do. But such imitative learning typically requires thousands of observations. In this new effort, the researchers describe a technique they have developed that allows a robot to perform a desired action by watching a human being do it just a single time.

The 90-pound mechanical beast — about the size of a full-grown Labrador — is intentionally designed to do all this without relying on cameras or any external environmental sensors. Instead, it nimbly “feels” its way through its surroundings in a way that engineers describe as “blind locomotion,” much like making one’s way across a pitch-black room.

“There are many unexpected behaviors the robot should be able to handle without relying too much on vision,” says the robot’s designer, Sangbae Kim, associate professor of mechanical engineering at MIT. “Vision can be noisy, slightly inaccurate, and sometimes not available, and if you rely too much on vision, your robot has to be very accurate in position and eventually will be slow. So we want the robot to rely more on tactile information. That way, it can handle unexpected obstacles while moving fast.”

Researchers will present the robot’s vision-free capabilities in October at the International Conference on Intelligent Robots, in Madrid. In addition to blind locomotion, the team will demonstrate the robot’s improved hardware, including an expanded range of motion compared to its predecessor Cheetah 2, that allows the robot to stretch backwards and forwards, and twist from side to side, much like a cat limbering up to pounce.

The concept of an invisibility cloak sounds like pure science fiction, but hiding something from view is theoretically possible, and in some very-controlled cases it’s experimentally possible too. Now, researchers have developed a new device that works in a completely different way to existing cloaking technology, neatly sidestepping some past issues and potentially helping to hide everyday objects under everyday conditions.

We see objects because light bounces off them in a particular way before landing on your retinas, and they get their colors by reflecting more light of that particular color. The basic concept of cloaking objects involves finding ways to disrupt that process and build them into devices or metamaterials.

Marvin Minsky was one of the founding fathers of artificial intelligence and co-founder of the Massachusetts Institute of Technology’s AI laboratory.

Abstract for scientists

Neuro cluster Brain Model analyses the processes in the brain from the point of view of the computer science. The brain is a massively parallel computing machine which means that different areas of the brain process the information independently from each other. Neuro cluster Brain Model shows how independent massively parallel information processing explains the underlying mechanism of previously unexplainable phenomena such as sleepwalking, dissociative identity disorder (a.k.a. multiple personality disorder), hypnosis, etc.

Write For Us

Posted in singularity

Bottom of the barrel white collar jobs will all probably be automated by 2025.

When Google introduced Google Duplex, its AI assistant designed to speak like a human, the company showed off how the average person could use the tech to save time making reservations and whatnot. What wasn’t touched on was the possibility that Duplex may have a use on the other side of the line, taking over for call center employees and telemarketers.

A report from The Information suggests Google may be making a play to find other applications for its human-sounding assistant and has already started experimenting with ways to use Duplex to do with away roles currently filled by humans—a move that could have ramifications for millions of people.

Preparations are underway for first-ever trip to the Sun, a new resupply mission arrives at the International Space Station, and our Dawn spacecraft captures close-up photos of dwarf planet Ceres — these a few of the stories to tell you about This Week at NASA!