Top 10 upcoming future technologies | trending technologies | 10 upcoming tech.

Future technologies are currently developing at an acclerated rate. Future technology ideas are being converted into real life at a very fast pace.

These Innovative techs will address global challenges and at the same time will make life simple on this planet. Let’s get started and have a look at the top technologies of the future | Emerging technologies.

#futuretechnologies #futuretech #futuristictechnologys #emergingtechnologies #technology #tech #besttechnology #besttech #newtechnology #cybersecurity #blockchain #emergingtech #futuretechnologyideas #besttechnologies #innovativetechs.

Chapters.

00:00 ✅ Intro.

00:23 ✅ 10. Genomics: Device to improve your health.

01:13 ✅ 09. New Energy Solutions for the benefit of our environment.

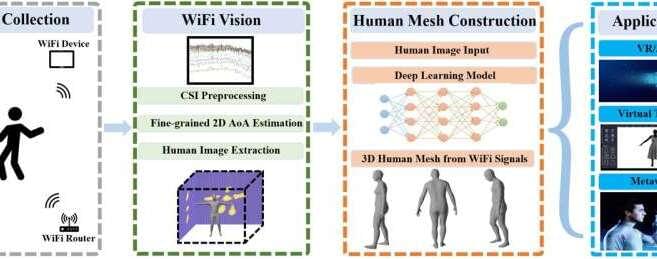

01:53 ✅ 08. Robotic Process Automation: Technology that automates jobs.

02:43 ✅ 07. Edge Computing to tackle limitations of cloud computing.

03:39 ✅ 06. Quantum Computing: Helping to stop the spread of diseases.

04:31 ✅ 05. Augmented reality and virtual reality: Now been employed for training.

05:05 ✅ 04. Blockchain: Delivers valuable security.

05:50 ✅ 03. Internet of things: So many things can connect to the internet and to one another.

06:40 ✅ 02. Cyber Security to improve security.

07:24 ✅ 01. 3D Printing: Used to create prototypesfuturistic technologybest future tech.

Here at Tech Buzzer, we ensure that you are continuously in touch with the latest update and aware of the foundation of the tech industry. Thank you for being with us. Please subscribe to our channel and enjoy the ride.