For the first time ever, Scientists working for the United States Government and Google have managed to read and understand a portion of a brain in real time. This is going to enable abilities such as reading minds and memories from humans in the future. The question is how long it will take until the government starts secret projects in that area for bad purposes.

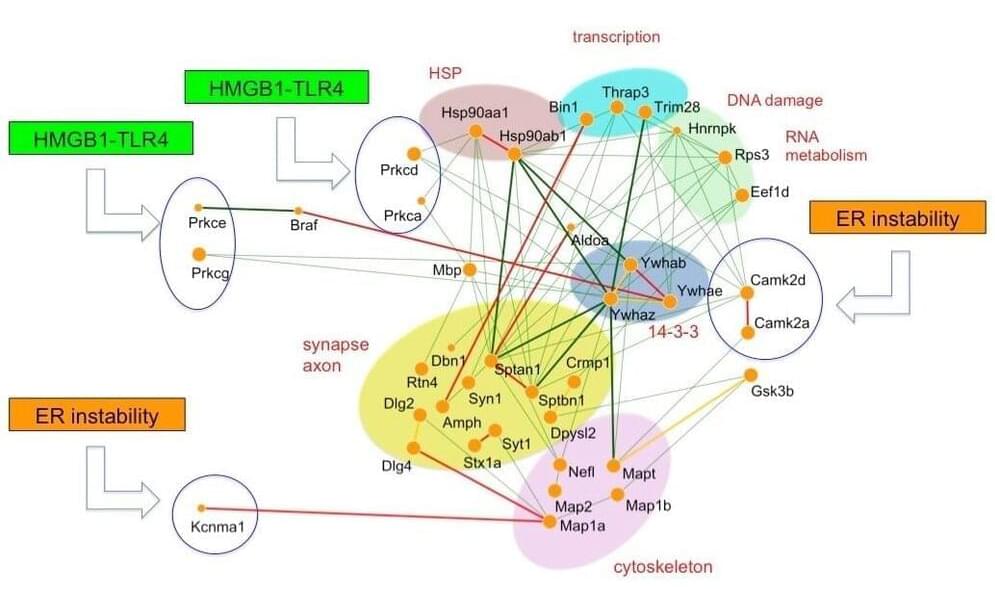

The Human Brain Project is the biggest secret scientific research project, based on exascale supercomputers, that aims to build a collaborative ICT-based scientific research infrastructure to allow researchers across Europe and the United States Government to advance knowledge in the fields of neuroscience, computing, and brain-related medicine and in the end to create a device in the form of a brain computer interface that can record and read memories from a human brain.

–

Every day is a day closer to the Technological Singularity. Experience Robots learning to walk & think, humans flying to Mars and us finally merging with technology itself. And as all of that happens, we at AI News cover the absolute cutting edge best technology inventions of Humanity.

If you enjoyed this video, please consider rating this video and subscribing to our channel for more frequent uploads. Thank you! smile

–

TIMESTAMPS:

00:00 What has just been accomplished.

01:30 How the Brain Map was created.

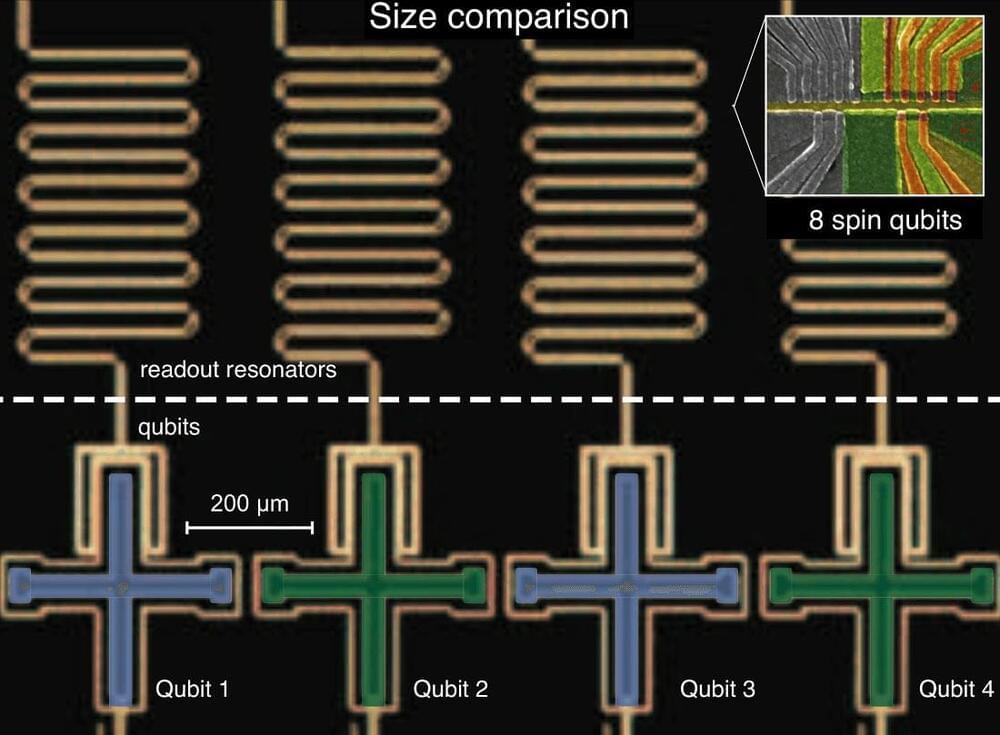

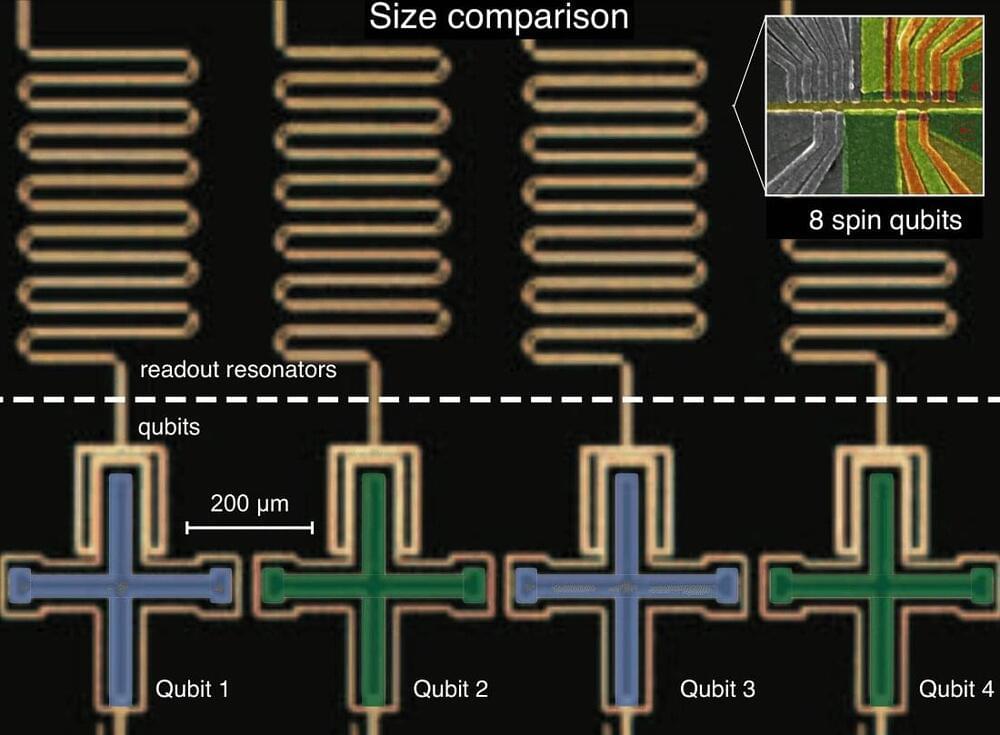

03:32 The technology to enable reading the brain.

05:22 What this will do for us.

07:41 Last Words.

–

#bci #ai #mindreading