In its heyday, UIUC’s Blue Waters was one of the world’s top supercomputers. Anyone who was curious could drop by its 30,000-square-foot machine room for a tour, and spend half an hour strolling among the 288 huge black cabinets, supported by a 24-megawatt power supply, that housed its hundreds of thousands of computational cores.

Blue Waters is gone, but today UIUC is home to not just one, but tens of thousands of vastly superior computers. Although these wondrous machines put Blue Waters to shame, each one weighs just three pounds, can be fueled by coffee and sandwiches, and is only the size of its owner’s two hands curled together. We all carry them between our ears.

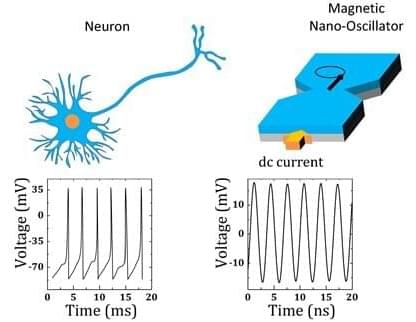

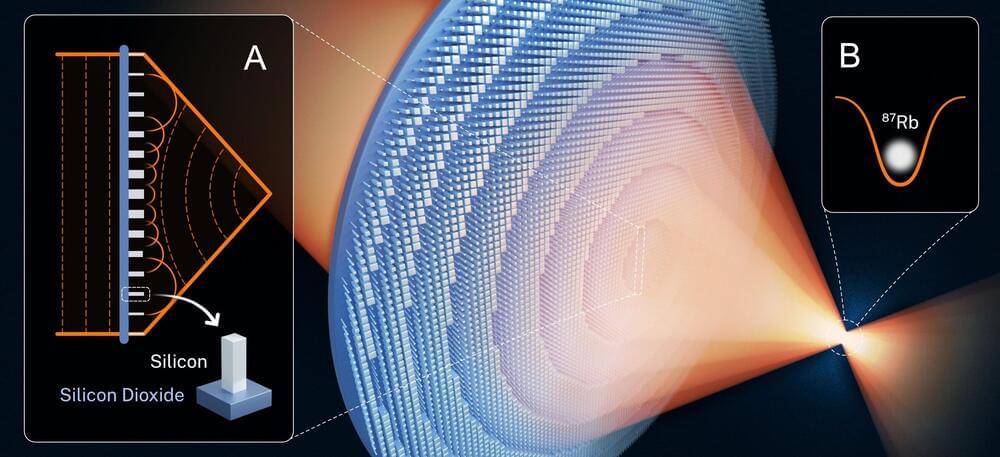

The fact is that humanity is far from having artificial computers that can match the capabilities of the human brain, outside a narrow range of well-defined tasks. Will we ever capture the brain’s magic? To help answer that question, MRL’s Axel Hoffmann recently led the writing of an APL Materials “Perspectives” article that summarizes and reflects on efforts to find so-called “quantum materials” that can mimic brain function.