The team was able to recreate NASA’s iconic “Pillars of Creation” image from the James Webb Space Telescope data — originally created by a supercomputer — using just a tabletop computer.

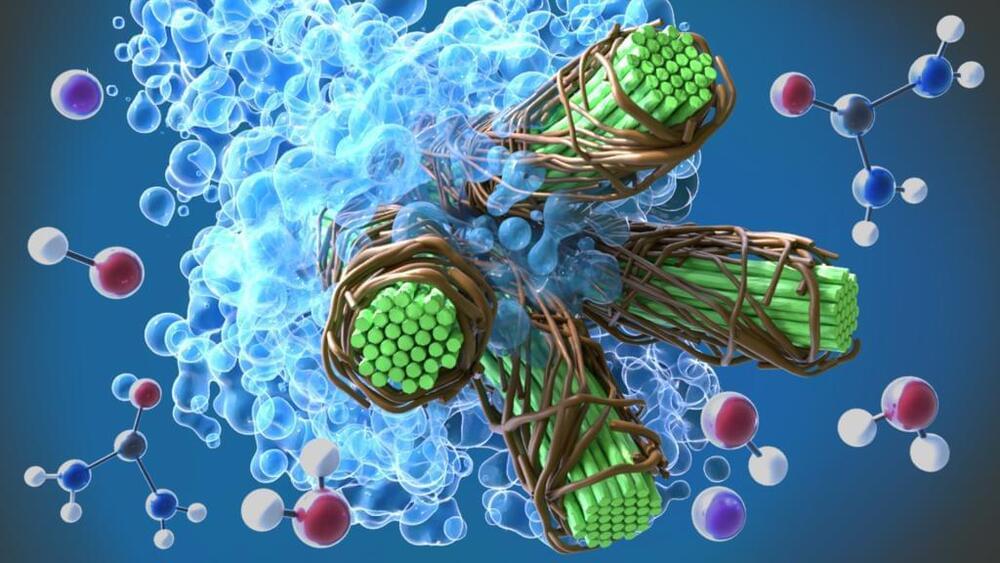

A team led by scientists at the Department of Energy’s Oak Ridge National Laboratory identified and successfully demonstrated a new method to process a plant-based material called nanocellulose that reduced energy needs by a whopping 21%. The approach was discovered using molecular simulations run on the lab’s supercomputers, followed by pilot testing and analysis.

The method, leveraging a solvent of sodium hydroxide and urea in water, can significantly lower the production cost of nanocellulosic fiber — a strong, lightweight biomaterial ideal as a composite for 3D-printing structures such as sustainable housing and vehicle assemblies. The findings support the development of a circular bioeconomy in which renewable, biodegradable materials replace petroleum-based resources, decarbonizing the economy and reducing waste.

Colleagues at ORNL, the University of Tennessee, Knoxville, and the University of Maine’s Process Development Center collaborated on the project that targets a more efficient method of producing a highly desirable material. Nanocellulose is a form of the natural polymer cellulose found in plant cell walls that is up to eight times stronger than steel.

Scientists at ORNL used the Frontier supercomputer to resolve a decade-long debate about the magnetic properties of calcium-48.

ROUND ROCK, Texas (KXAN) — A new, multi-million dollar supercomputer is headed to Round Rock. The Texas Advanced Computing Center (TACC) at the University of Texas at Austin (UT) partnered with Sabey Data Centers for the project.

The supercomputer, dubbed “Horizon,” is expected to be online in 2026. The technology is part of a new, larger program from the National Science Foundation (NSF), which recently helped fund $457 million to UT to help bring the academic supercomputer to the area.

Artificial intelligence (AI) is on the brink of reaching a new significant milestone. A team of researchers aims to develop artificial general intelligence (AGI), capable of surpassing human intelligence in various fields, by establishing a global network of ultra-powerful supercomputers. This project, led by SingularityNET, will commence in September with the launch of the first supercomputer specifically designed for this purpose.

What does the future hold? What will become of this planet and its inhabitants in the centuries to come?

We are living in a historical period that sometimes feels like the prelude to something truly remarkable or terribly dire about to unfold.

This captivating video seeks to decipher the signs and attempt to construct plausible scenarios from the nearly nothing we hold in our hands today.

As always, it will be scientific discoveries leading the dance of change, while philosophers, writers, politicians, and all the others will have the seemingly trivial task of containing, describing, and guiding.

Before embarking on our journey through time, let me state the obvious: No one knows the future!

Numerous micro and macro factors could alter this trajectory—world wars, pandemics, unimaginable social shifts, or climate disasters.

Nevertheless, we’re setting off. And we’re doing so by discussing the remaining decades of the century we’re experiencing right now.

-

DISCUSSIONS \& SOCIAL MEDIA

Commercial Purposes: [email protected].

Tik Tok: / insanecuriosity.

Reddit: / insanecuriosity.

Instagram: / insanecuriositythereal.

Twitter: / insanecurio.

Facebook: / insanecuriosity.

Linkedin: / insane-curiosity-46b928277

Our Website: https://insanecuriosity.com/

–

Credits: Ron Miller, Mark A. Garlick / MarkGarlick.com, Elon Musk/SpaceX/ Flickr.

–

00:00 Intro.

01:20 Artificial Intelligence.

02:40 2030 The ELT telescope.

03:20 2031 The International Space Station is deorbited.

04:05 2035 The cons.

04:45 2036 Humans landed on mars.

05:05 2037. The global population reaches 9 billion.

05:57 2038 2038. Airplane accident casualties = 0

06:20 Fusion power is nearing commercial availability.

07:01 2042 Supercomputers.

07:30 2045 turning point for human-artificial intelligence interactions.

08:58 2051 Establishment of the first permanent lunar base.

09:25 2067 The first generation of antimatter-powered spacecraft emerging.

10:07 2080 Autonomous vehicles dominate the streets.

10:35 2090 Religion is fading from European culture.

10:55 2099 Consideration of Mars terraforming.

11:28 22nd century Moon and Mars Settlements.

12:10 2,130 transhumanism.

12:41 2,132 world records are shattered.

12:57 2,137 a space elevator.

14:32 2,170 By this year, there are dozens of human settlements on the Moon.

15:18 2180

16:18 23rd century Immortality.

16:49 2,230 Hi-Tech and Automated Cities.

17:23 2,310 23rd Century: Virtual Reality and Immortality.

18:01 2,320 antimatter-powered propulsion.

18:40 2,500 Terraforming Mars Abandoned.

19:05 2,600 Plastic Cleanup.

19:25 2,800 Silent Probes.

19:37 3,100 Humanity as a Type 2 Civilization.

–

#insanecuriosity #timelapseofthefuture #futuretime

Some of these problems are as simple as factoring a large number into primes. Others are among the most important facing Earth today, like quickly modeling complex molecules for drugs to treat emerging diseases, and developing more efficient materials for carbon capture or batteries.

However, in the next decade, we expect a new form of supercomputing to emerge unlike anything prior. Not only could it potentially tackle these problems, but we hope it’ll do so with a fraction of the cost, footprint, time, and energy. This new supercomputing paradigm will incorporate an entirely new computing architecture, one that mirrors the strange behavior of matter at the atomic level—quantum computing.

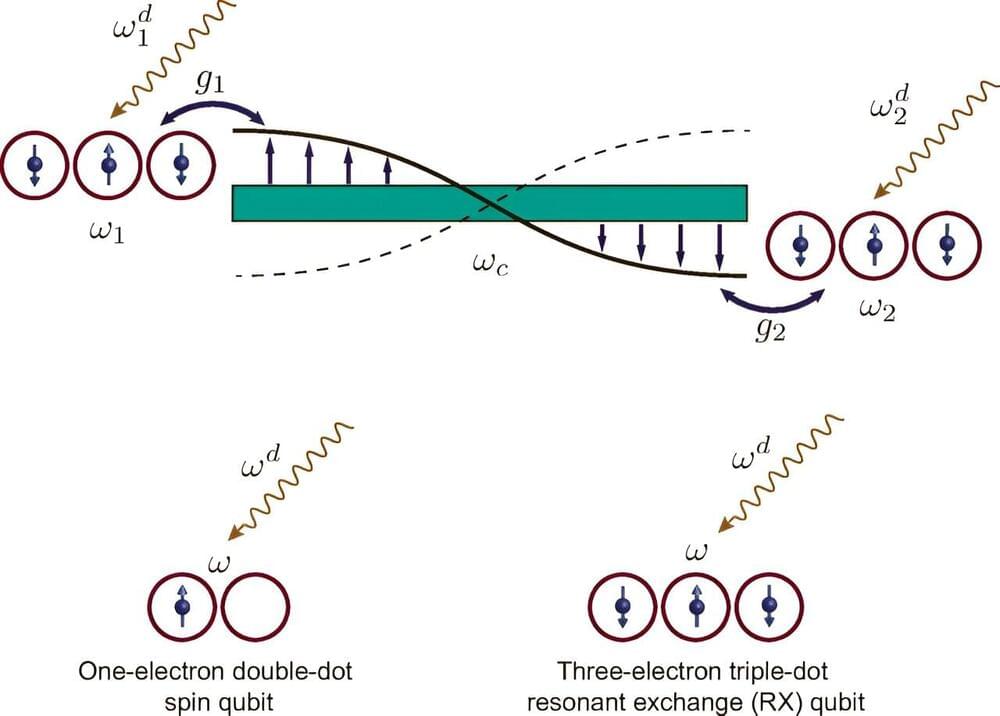

For decades, quantum computers have struggled to reach commercial viability. The quantum behaviors that power these computers are extremely sensitive to environmental noise, and difficult to scale to large enough machines to do useful calculations. But several key advances have been made in the last decade, with improvements in hardware as well as theoretical advances in how to handle noise. These advances have allowed quantum computers to finally reach a performance level where their classical counterparts are struggling to keep up, at least for some specific calculations.

The operation of a quantum computer relies on encoding and processing information in the form of quantum bits—defined by two states of quantum systems such as electrons and photons. Unlike binary bits used in classical computers, quantum bits can exist in a combination of zero and one simultaneously—in principle allowing them to perform certain calculations exponentially faster than today’s largest supercomputers.