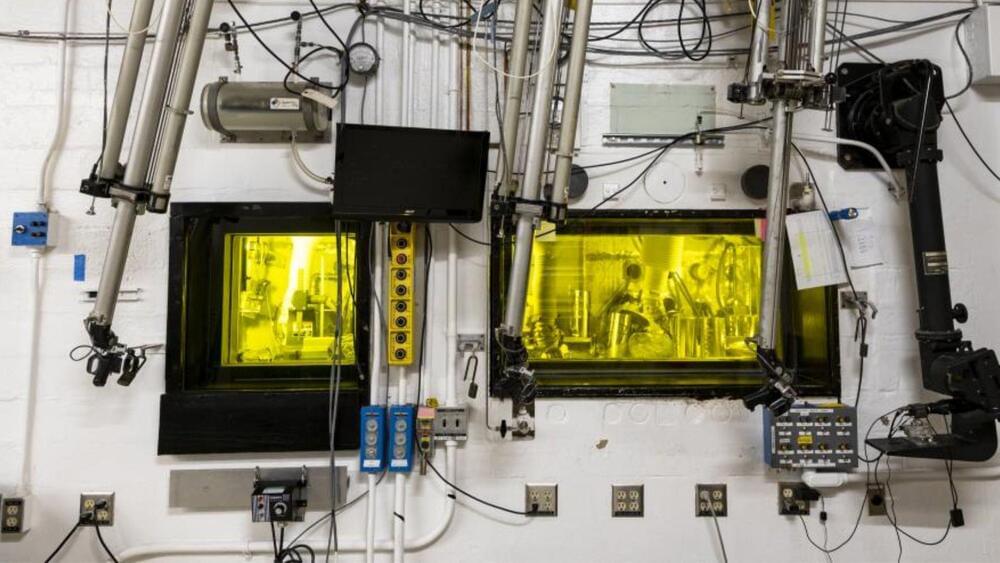

Scientists used a supercomputer to simulate nuclear fission with unprecedented accuracy, confirming the existence of scission neutrons.

Nuclear fission—when the nucleus of an atom splits in two, releasing energy—may seem like a process that is fully understood. First discovered in 1939 and thoroughly studied ever since, fission is a constant factor in modern life, used in everything from nuclear medicine to power-generating nuclear reactors. However, it is a force of nature that still contains mysteries yet to be solved.

Researchers from the University of Washington, Seattle, or UW, and Los Alamos National Laboratory have used the Summit supercomputer at the Department of Energy’s Oak Ridge National Laboratory to answer one of fission’s biggest questions: What exactly happens during the nucleus’s “neck rupture” as it splits in two?

The resulting paper is published in the journal Physical Review Letters.

Crazy: Few would argue that Elon Musk is driven. Despite his various detractors, the entrepreneur has built Tesla and SpaceX into major competitors, if not leaders, in their respective industries. This success comes amid various side endeavors like Neuralink and Twitter/X transition. Now, his xAI team has gotten an AI supercluster up and running in just a few weeks.

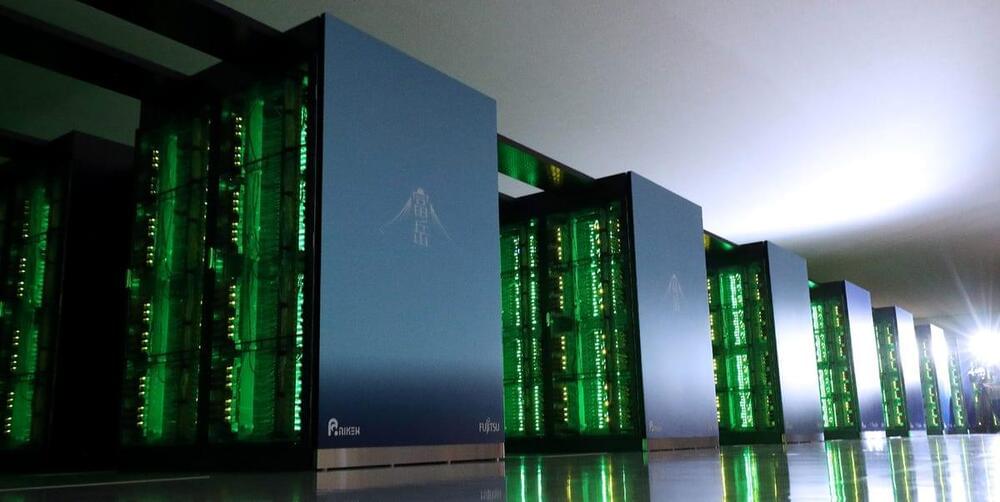

Elon Musk and his xAI team have seemingly done the impossible. The company built a supercluster of 100,000 Nvidia H200 Blackwell GPUs in only 19 days. Nvidia CEO Jensen Huang called the feat “superhuman.” Huang shared the incredible story in an interview with the Tesla Owners Silicon Valley group on X.

According to Huang, constructing a supercomputer of this size would take most crews around four years – three years in planning and one year on shipping, installation, and operational setup. However, in less than three weeks, Musk and his team managed the entire process – from concept to full functionality. The xAI supercluster even completed its first AI training run shortly after the cluster was powered up.

CAMBRIDGE, England, Oct. 15, 2024 — Nu Quantum has announced a proof-of-principle prototype that advances the development of modular, distributed quantum computers by enabling connections across different qubit modalities and providers. The technology, known as the Qubit-Photon Interface, functions similarly to Network Interface Cards (NICs) in classical computing, facilitating communication between quantum computers over a network and supporting the potential growth of quantum infrastructure akin to the impact NICs have had on the Cloud and AI markets.

For quantum computers to achieve practical applications—such as accurately simulating atomic-level interactions—they must scale to 1,000 times their current size. This will require a shift from single quantum processing units (QPUs) to distributed quantum systems composed of hundreds of interconnected QPUs, operating at data center scale, similar to cloud and AI supercomputers.

The efficient transfer of quantum information between matter and light at the quantum level is the biggest challenge to scaling quantum computers, and this is the specific issue that the QPI addresses.

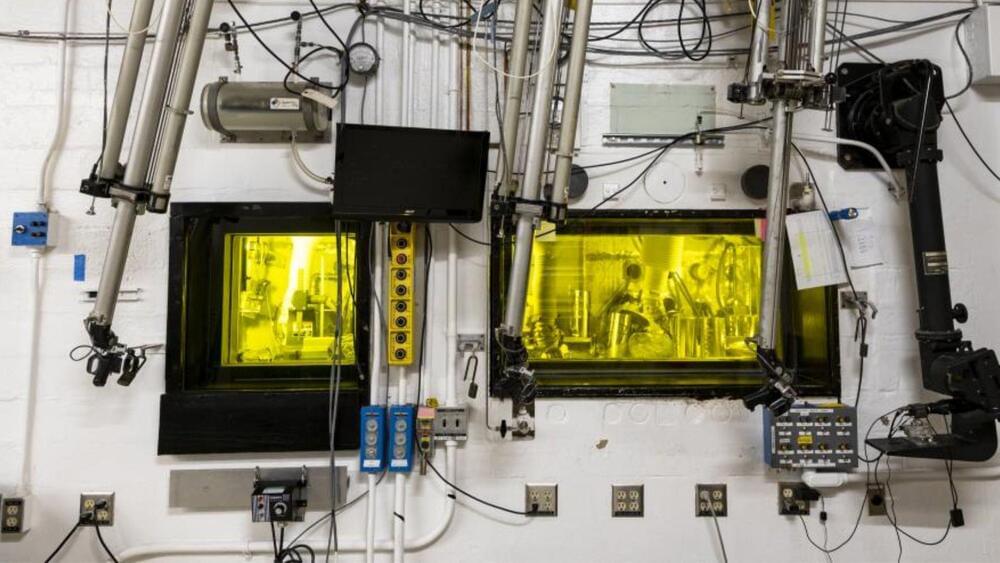

The US government has launched a new supercomputer in Livermore, California.

The Department of Defense (DoD) and National Nuclear Security Administration (NNSA) this month inaugurated a new supercomputing system dedicated to biological defense at the Lawrence Livermore National Laboratory (LLNL).

Specs not shared, but same architecture as upcoming El Capitan system.

Deep inside what we perceive as solid matter, the landscape is anything but stationary. The interior of the building blocks of the atom’s nucleus—particles called hadrons that a high school student would recognize as protons and neutrons—are made up of a seething mixture of interacting quarks and gluons, known collectively as partons.

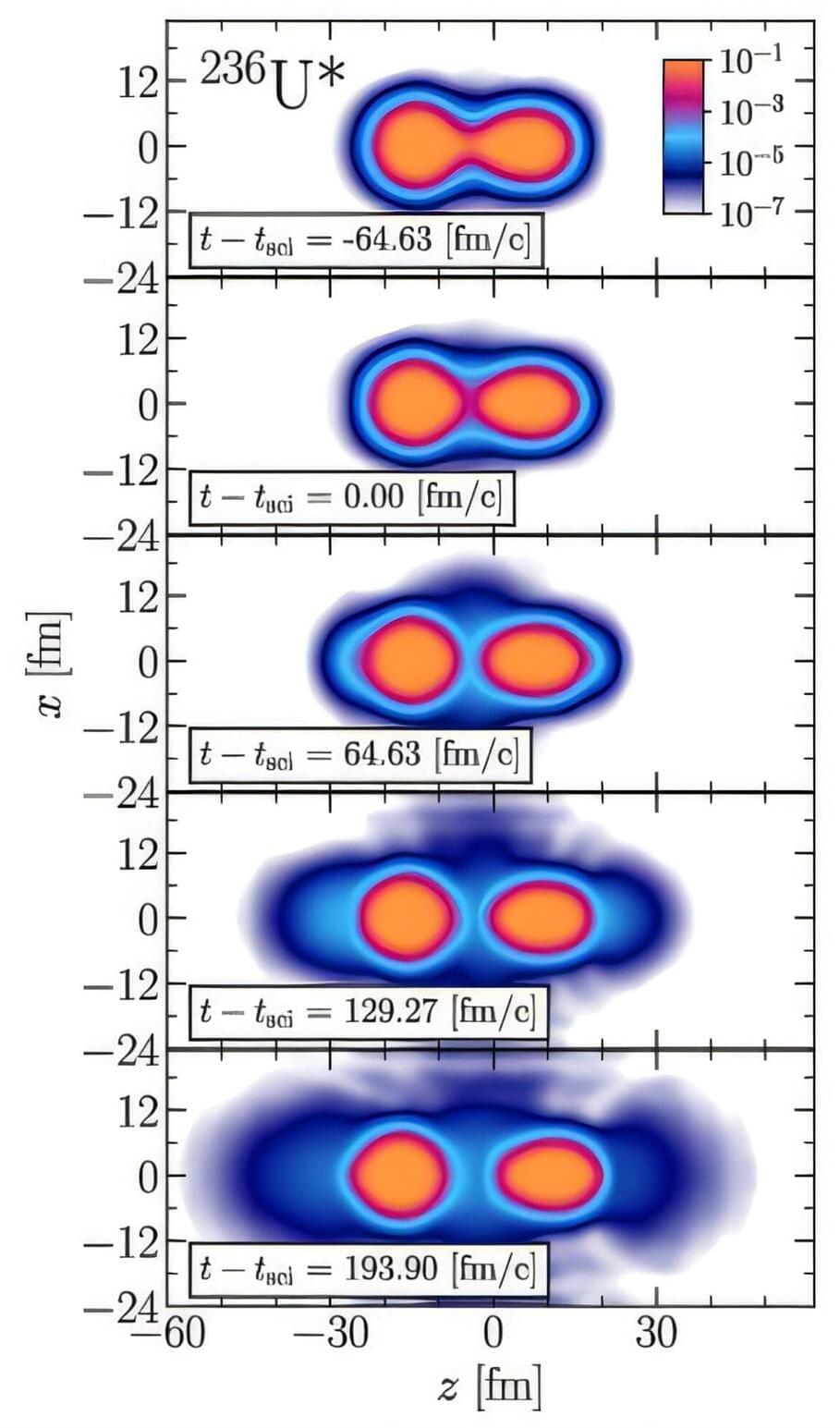

PRESS RELEASE — IQM Quantum Computers (IQM), a global leader in designing, building, and selling superconducting quantum computers, today announced that it has reached a milestone of producing 30 full-stack quantum computers in its manufacturing facility in Finland.

In addition, IQM has also completed the delivery and installation of six full-stack quantum computers to customers worldwide. IQM’s previously announced customers include VTT Technical Research Centre of Finland, Leibniz Supercomputing Centre (LRZ) in Germany as well as Forschungszentrum Jülich in Germany.

With increasing demand for on-premises quantum computers globally, IQM Quantum Computers Co-CEO Mikko Välimäki highlighted the significance of the manufacturing milestone, stating: “One of the key bottlenecks in quantum computer adoption has been prohibitively high prices. We are the first quantum computer manufacturer with the goal of taking quantum computers to a much wider market with industrialized manufacturing capabilities that help drive the prices lower. Looking ahead, our production line has the capacity to deliver up to 20 full-stack quantum computers a year.”

Elon Musk is not smiling: Oracle and Elon Musk’s AI startup xAI recently ended talks on a potential $10 billion cloud computing deal, with xAI opting to build its own data center in Memphis, Tennessee.

At the time, Musk emphasised the need for speed and control over its own infrastructure. “Our fundamental competitiveness depends on being faster than any other AI company. This is the only way to catch up,” he added.

XAI is constructing its own AI data center with 100,000 NVIDIA chips. It claimed that it will be the world’s most powerful AI training cluster, marking a significant shift in strategy from cloud reliance to full infrastructure ownership.