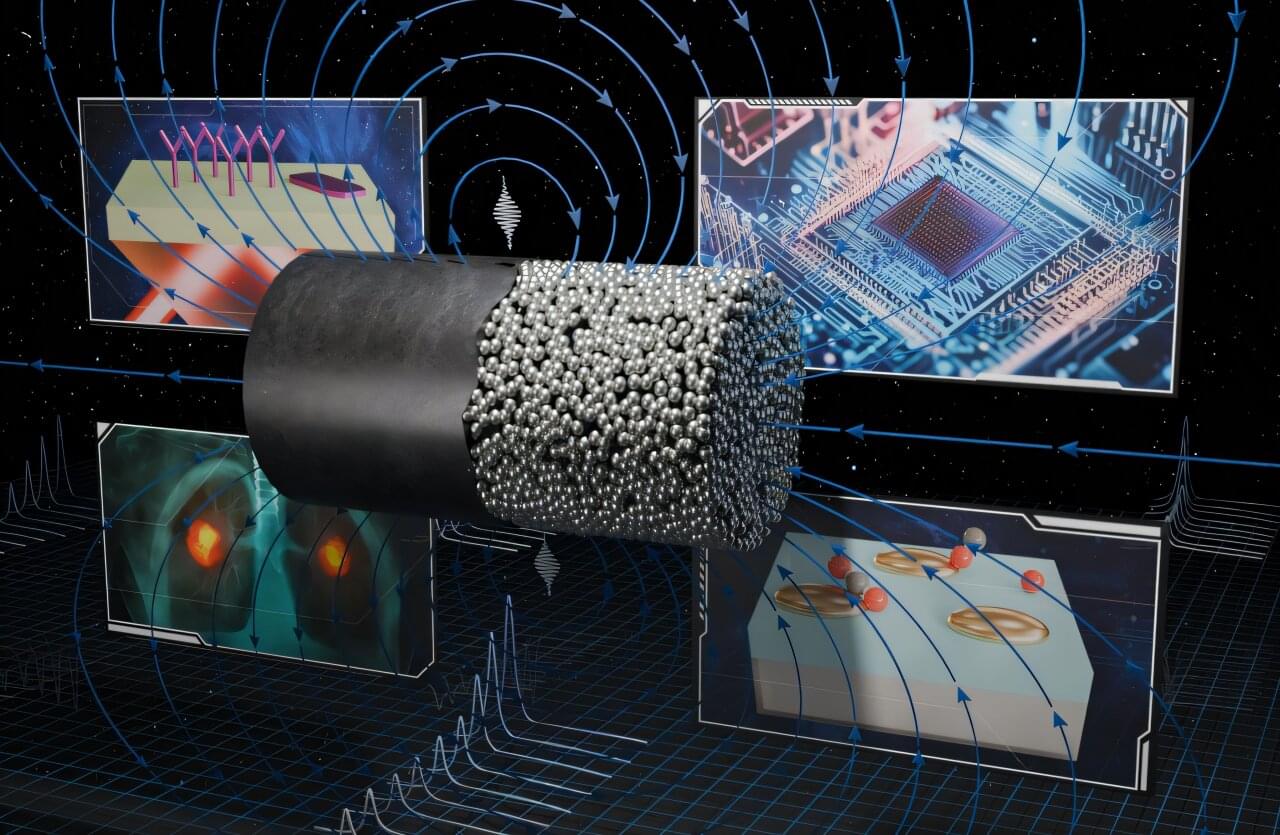

Researchers at IBM and Lockheed Martin teamed up high-performance computing with quantum computing to accurately model the electronic structure of ‘open-shell’ molecules, methylene, which has been a hurdle with classic computing over the years. This is the first demonstration of the sample-based quantum diagonalization (SQD) technique to open-shell systems, a press release said.

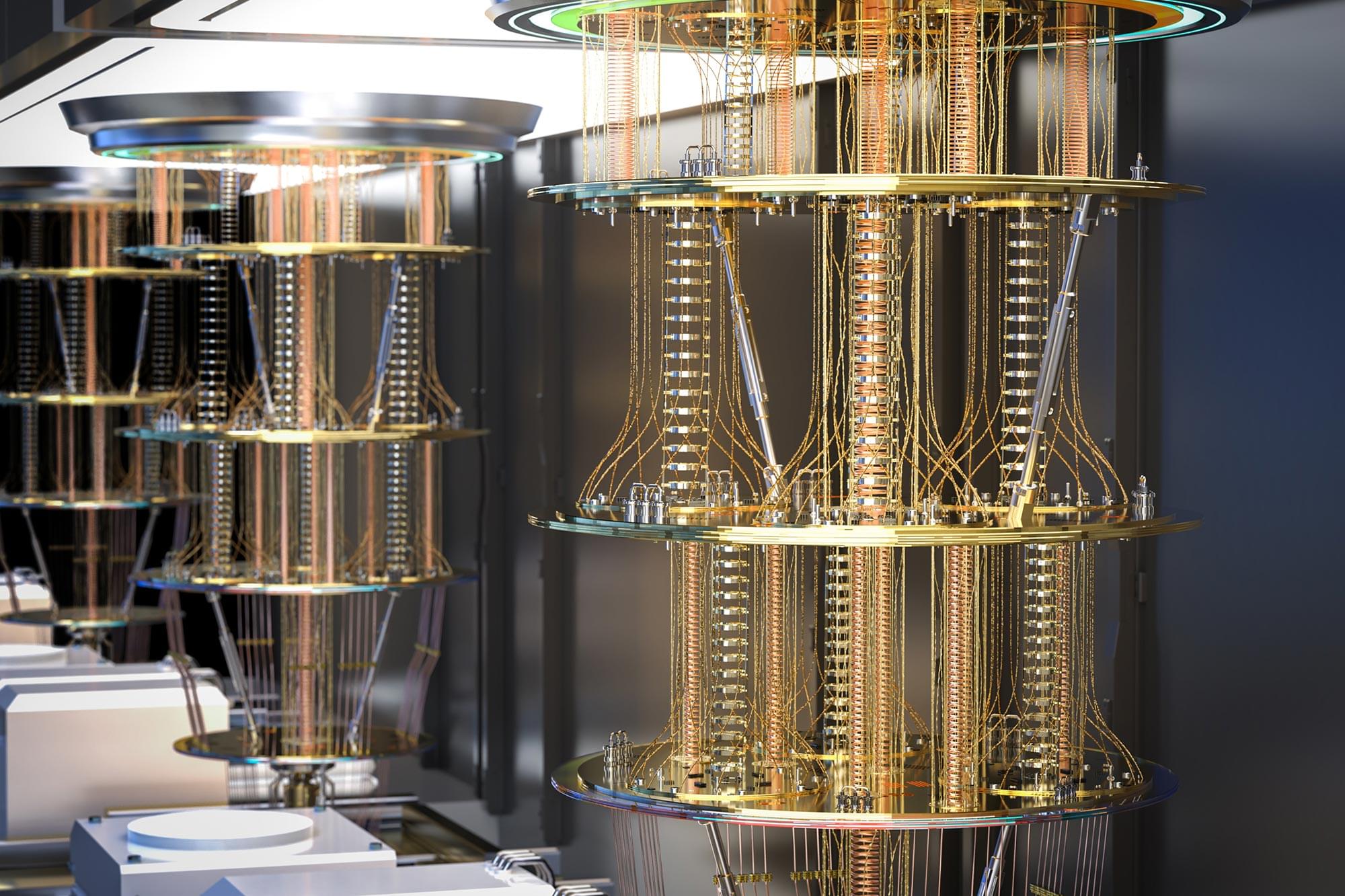

Quantum computing, which promises computations at speeds unimaginable by even the fastest supercomputers of today, is the next frontier of computing. Leveraging quantum states of molecules to serve as quantum bits, these computers supersede computational capabilities that humanity has had access to in the past and open up new research areas.