Back in 2018, a scientist from the University of Texas at Austin proposed a protocol to generate randomness in a way that could be certified as truly unpredictable. That scientist, Scott Aaronson, now sees that idea become a working reality. “When I first proposed my certified randomness protocol in 2018, I had no idea how long I’d need to wait to see an experimental demonstration of it,” said Aaronson, who now directs a quantum center at a major university.

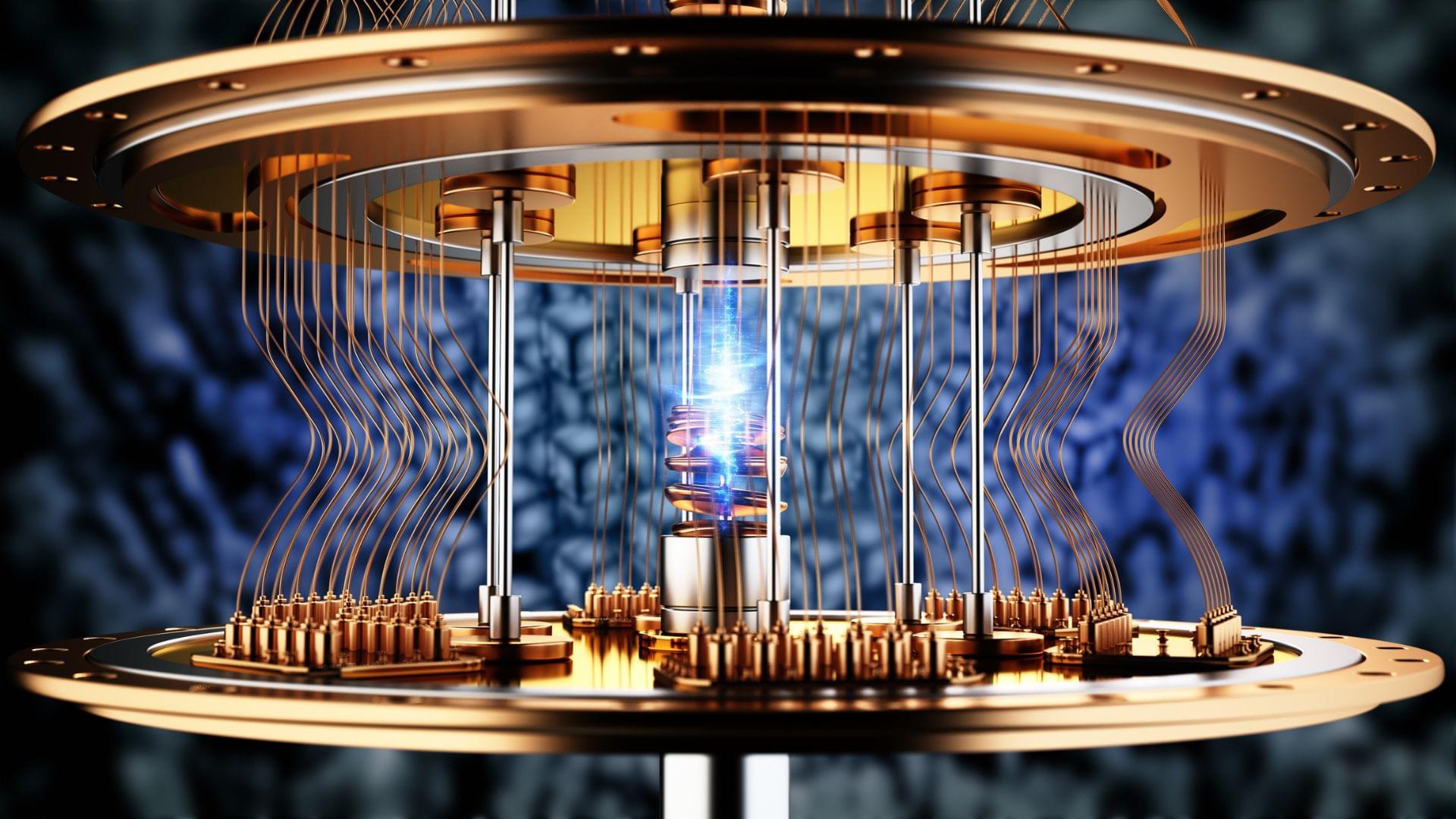

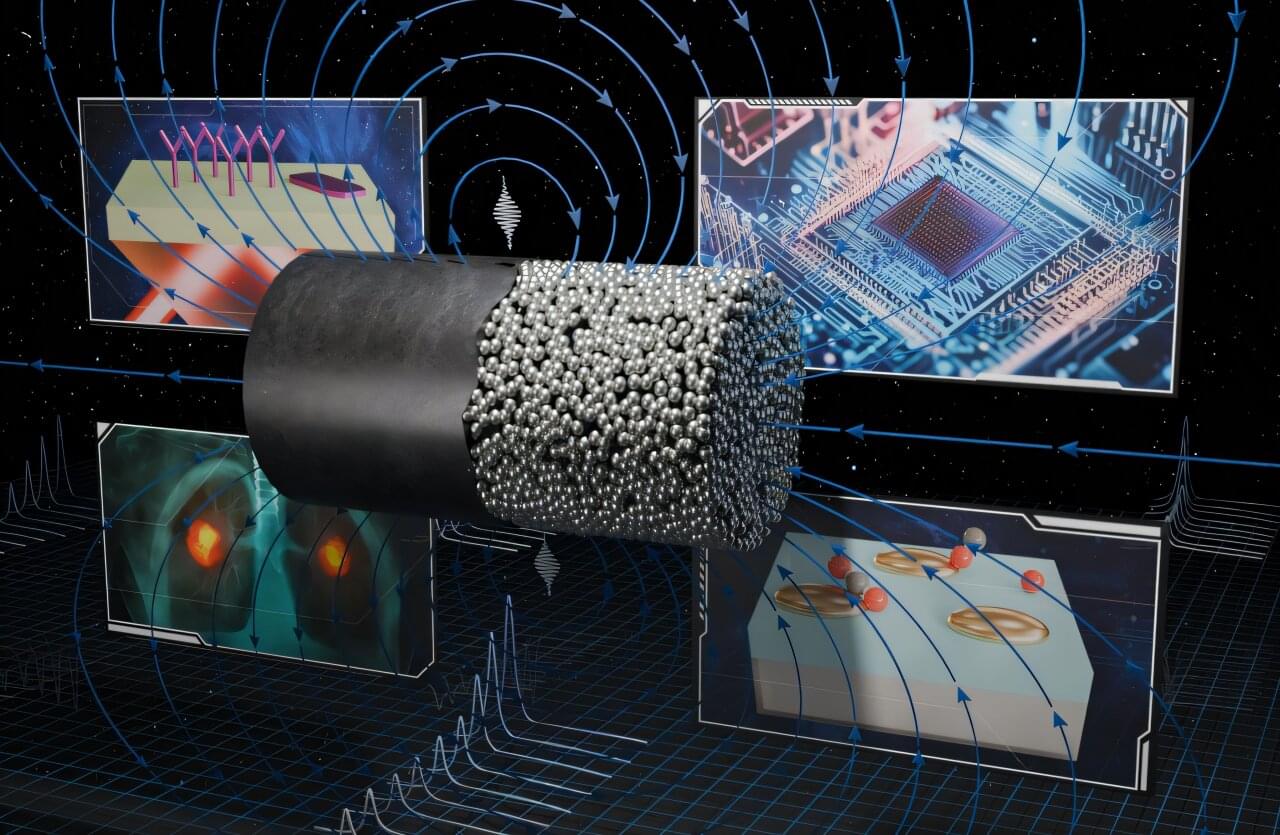

The experiment was carried out on a cutting-edge 56-qubit quantum computer, accessed remotely over the internet. The machine belongs to a company that recently made a significant upgrade to its system. The research team included experts from a large bank’s tech lab, national research centers, and universities.

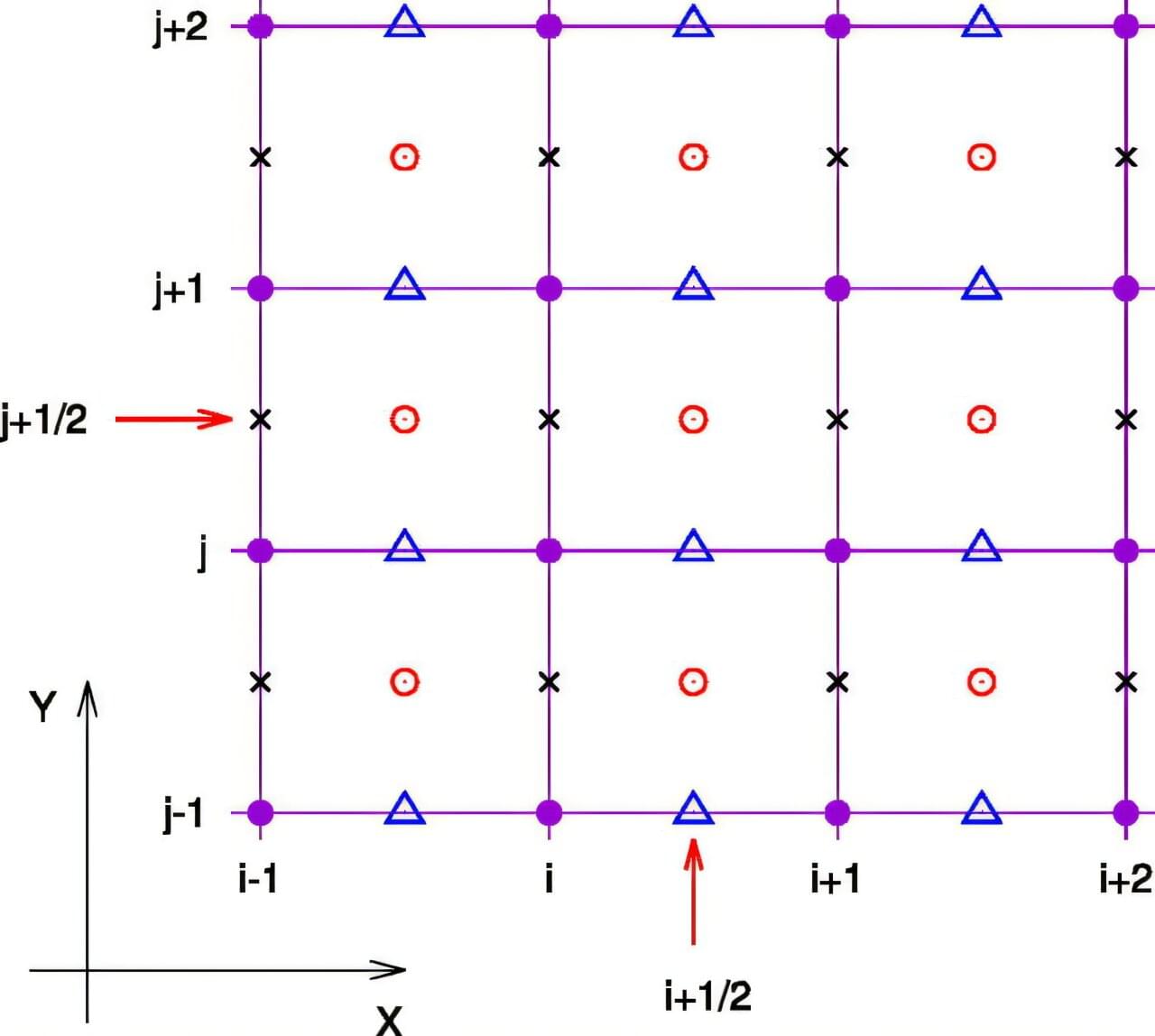

To generate certified randomness, the team used a method called random circuit sampling, or RCS. The idea is to feed the quantum computer a series of tough problems, known as challenge circuits. The computer must solve them by choosing among many possible outcomes in a way that’s impossible to predict. Then, classical supercomputers step in to confirm whether the answers are genuinely random or not.