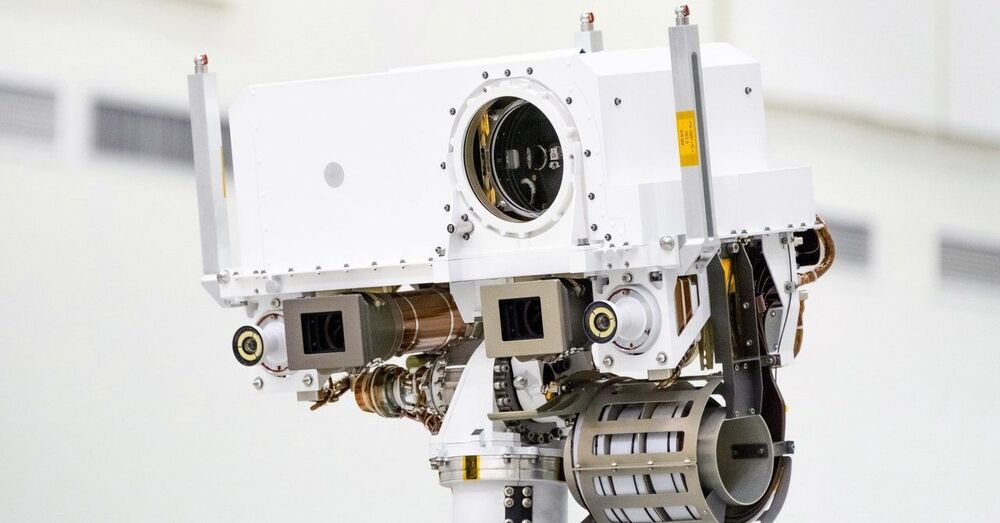

The Red Planet’s red looks different to an Earthling than it would to a Martian—or to a robot with hyperspectral cameras for eyes.

The total amount of data generated worldwide is expected to reach 175 zettabytes (1 ZB equals 1 billion terabytes) by 2025. If 175 ZB were stored on Blu-ray disks, the stack would be 23 times the distance to the moon. There is an urgent need to develop storage technologies that can accommodate this enormous amount of data.

In the absence of a TARDIS or Doc Brown’s DeLorean, how can you go back in time to see what supposedly happened when the universe exploded into being?

Call for ideas! Can your #technology be used to turn the #Moon’s resources into valuable solutions to store power, build infrastructure, grow food or enable life support? Read more and submit your idea to #ESA before 12th March 👉 https://lnkd.in/dKJ72_T?trk=public_post_share-update_update-text.

Voyager Station will be able to accommodate 400 guests, its builders say.

Orbital Assembly Corp. recently unveiled new details about its ambitious Voyager Station, which is projected to be the first commercial space station operating with artificial gravity.

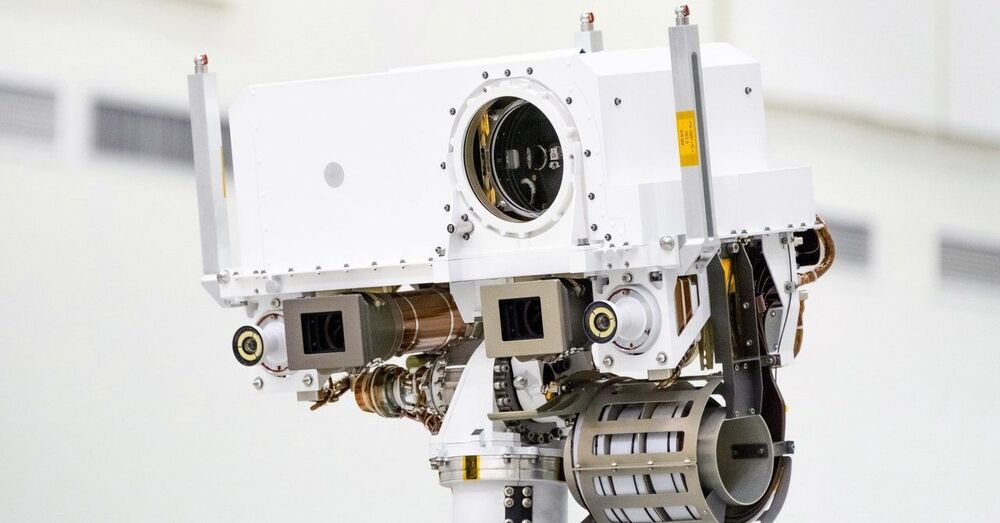

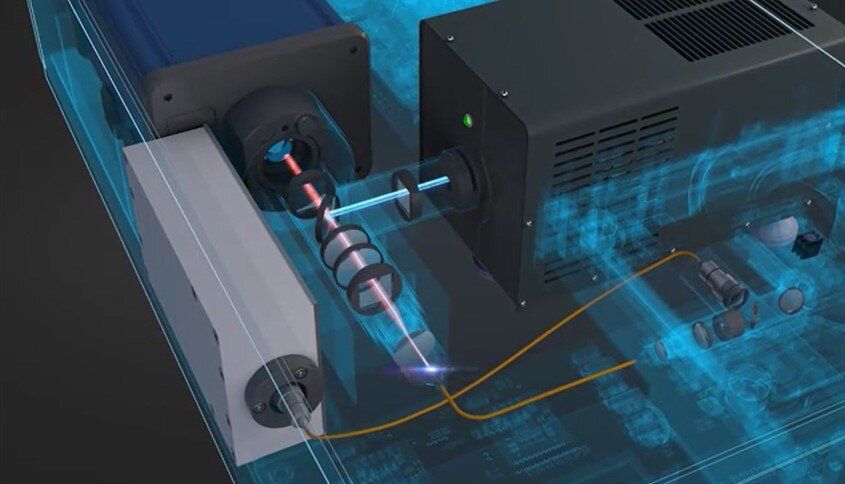

Great new episode with the details on how NASA JPL’s successful Mars rover program kept the Perseverance rover on track. JPL chief engineer Rob Manning gives us an inside look at the strategies NASA used to make sure the latest rover made a spectacular landing.

NASA’s Rob Manning, JPL’s Chief Engineer, discusses management, logistics, innovation and the future of robotic Mars exploration in this unique episode. With this week’s successful landing of the Perseverance rover on an ancient river delta, NASA ups its game at a time when the rest of the country badly needs some encouraging news. Manning talks about how JPL keeps itself on track when finessing complicated billion-dollar initiatives.

Get a guided tour of the Perseverance rover landing on the red planet from NASA Jet Propulsion Laboratory engineer Ian Clark:

A video of Martian Ripples in the highest quality. All the images you’re going to see are taken by NASA rovers and orbiters in Mars, boosted in quality with AI technology.

The wind has shaped the Martian landscape for much of its history and continues to play a major role today. Here we analyzed Martian Ripples and the similarities they have the ripples here on Earth.

Other information that can be found in this video:

📌- Real images of Martian Ripples.

📌- The density of the Martian Atmosphere.

📌- How these ripples are created?!

📌- Size of Martian Ripples.

📌- Rover’s Tire Track on Mars (image)

📌- Perseverance rover mission.

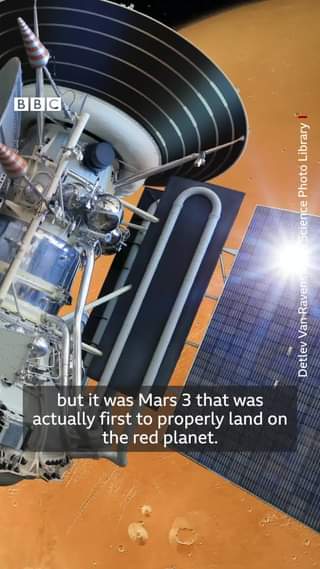

With two missions due to land on Mars in 2021, we look back at 60 years of attempts to get to the Red Planet.