Two researchers share their Hollywood consulting experiences of keeping science straight on the big screen.

This second of three videos describes what helps make the Assured Science System limitless, free-ranging, and self-sustaining while also producing the most robust data from every project of every researcher. It is our novel and powerful electronic infrastructure, The Universe of Research Niches (TURN) that is constructed by extracting the Cause & Effect relationships among all technical terms in the universe of existing primary research data. The capacity of TURN to serve as the common resourceful utility for everybody enables us to transform scientific research into a potent revenue-generating system.

After watching this video, please watch our last video in the series that describes how the Assured Science System works: • How you can maximize your benefits fr…

More specific details of the new system and how to join the effort will be provided personally. Please contact us at https://www.assuredscience.org/contact/

#assuredscience #scientific #science #scientific #scienceexperiment #scientificresearch #funding #turnsystem #scienceandtechnology

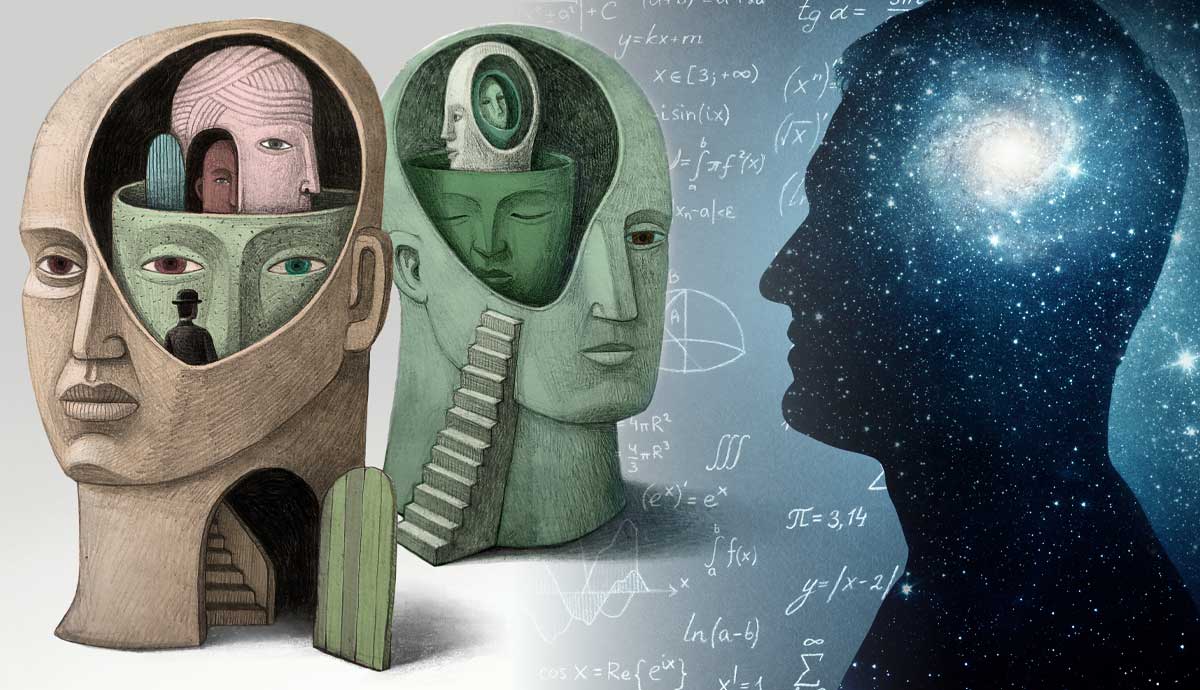

The Open Encyclopedia of Cognitive Science (OECS) is a free, online collection of multidisciplinary peer-reviewed articles on various topics in cognitive science. Officially launched last August by MIT Press, the OECS is a successor to the MIT Encyclopedia of Cognitive Science. It currently has around 80 articles, with more to come, on topics such as: Social Epistemology by Mandi Astola and Mark Alfano The Mind-Body Problem by Tim Crane Bodily Sensations by Frédérique de Vignemont Personal/Subpersonal Distinction by Zoe Drayson Conceptual Analysis by Frank Jackson Natural Kinds by Muhammad Ali Khalidi Cognitive Ontology by Colin Klein Free Will by Neil Levy Experimental Philosophy by Edouard Machery Metacognition by Joëlle Proust …to pick just ten. The editors of OECS are Michael C. Frank of Stanford University and Asifa Majid of the University of Oxford. You can check it out here.

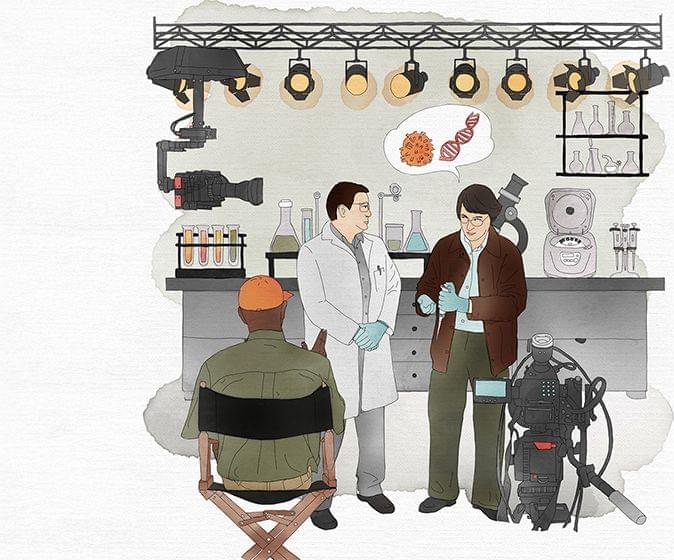

Using the latest brain preservation techniques, could we ever abolish death? And if so, should we?

Watch the Q&A here (exclusively for our Science Supporters): https://youtu.be/iSIIDJS2a2U

Buy Ariel’s book here: https://geni.us/xvi1Ivf.

This lecture was recorded at the Ri on 2 December 2024.

Just as surgeons once believed pain was good for their patients, some argue today that death brings meaning to life. But given humans rarely live beyond a century – even while certain whales can thrive for over two hundred years – it’s hard not to see our biological limits as profoundly unfair.

Yet, with ever-advancing science, will the ends of our lives always loom so close? For from ventilators to brain implants, modern medicine has been blurring what it means to die. In a lucid synthesis of current neuroscientific thinking, Ariel Zeleznikow-Johnston explains that death is no longer the loss of heartbeat or breath, but of personal identity – that the core of our identities is our minds, and that our minds are encoded in the structure of our brains. On this basis, he explores how recently invented brain preservation techniques may offer us all the chance of preserving our minds to enable our future revival, alongside the ethical implications this technology could create.

Join this channel to get access to perks:

Entanglement—linking distant particles or groups of particles so that one cannot be described without the other—is at the core of the quantum revolution changing the face of modern technology.

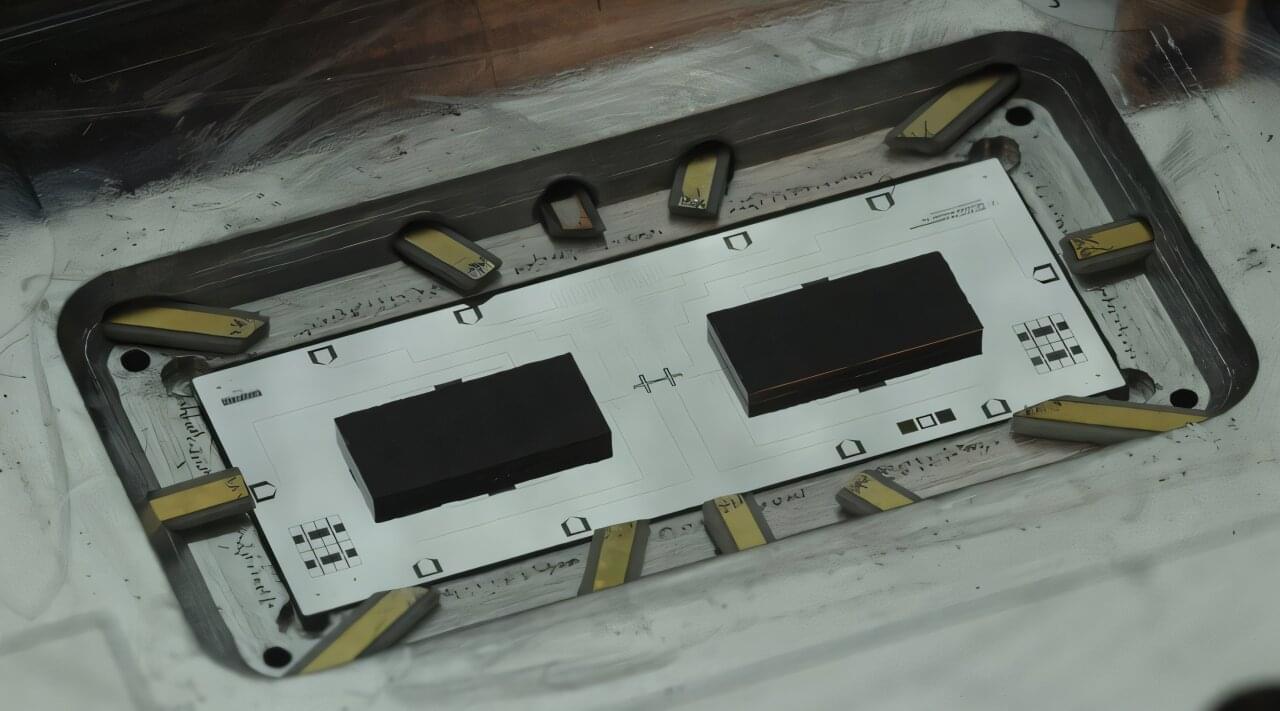

While entanglement has been demonstrated in very small particles, new research from the lab of University of Chicago Pritzker School of Molecular Engineering (UChicago PME) Prof. Andrew Cleland is thinking big, demonstrating high-fidelity entanglement between two acoustic wave resonators.

The paper is published in Nature Communications.

Main episode with Matthew Segall: https://www.youtube.com/watch?v=DeTm4fSXpbM

As a listener of TOE you can get a special 20% off discount to The Economist and all it has to offer! Visit https://www.economist.com/toe.

New Substack! Follow my personal writings and EARLY ACCESS episodes here: https://curtjaimungal.substack.com.

TOE’S TOP LINKS:

- Enjoy TOE on Spotify! https://tinyurl.com/SpotifyTOE

- Become a YouTube Member Here:

https://www.youtube.com/channel/UCdWIQh9DGG6uhJk8eyIFl1w/join.

- Support TOE on Patreon: https://patreon.com/curtjaimungal (early access to ad-free audio episodes!)

- Twitter: https://twitter.com/TOEwithCurt.

- Discord Invite: https://discord.com/invite/kBcnfNVwqs.

- Subreddit r/TheoriesOfEverything: https://reddit.com/r/theoriesofeverything.

#science #philosophy #multiverse #wisdom