Researchers from Tsinghua University, China, have developed an all-analog photoelectronic chip that combines optical and electronic computing to achieve ultrafast and highly energy-efficient computer vision processing, surpassing digital processors.

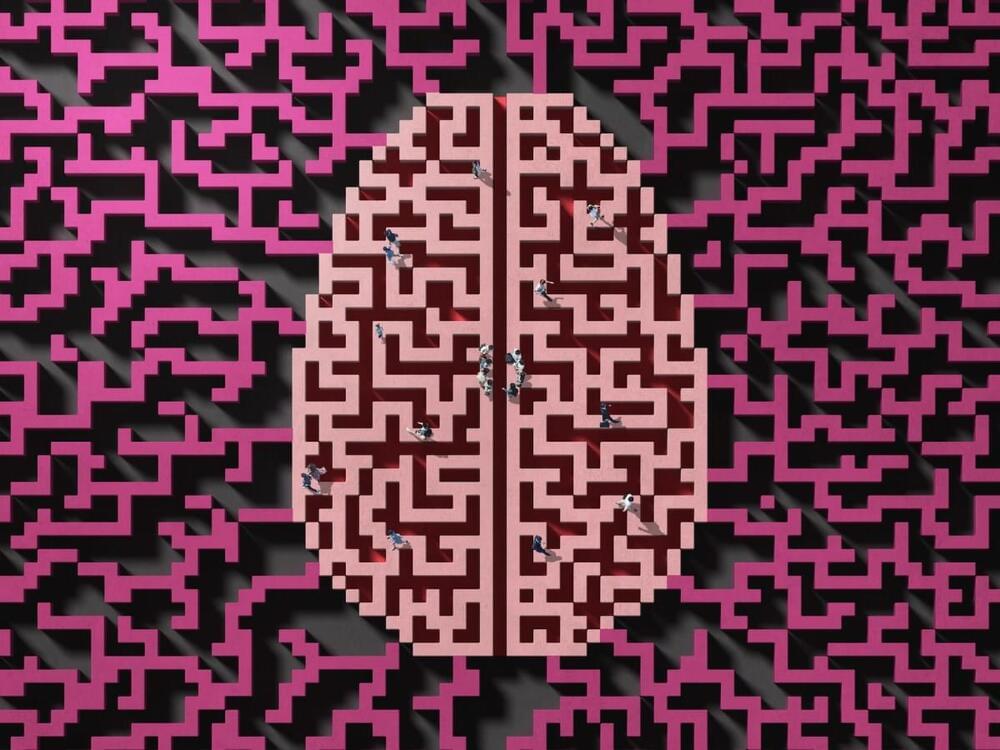

Computer vision is an ever-evolving field of artificial intelligence focused on enabling machines to interpret and understand visual information from the world, similar to how humans perceive and process images and videos.

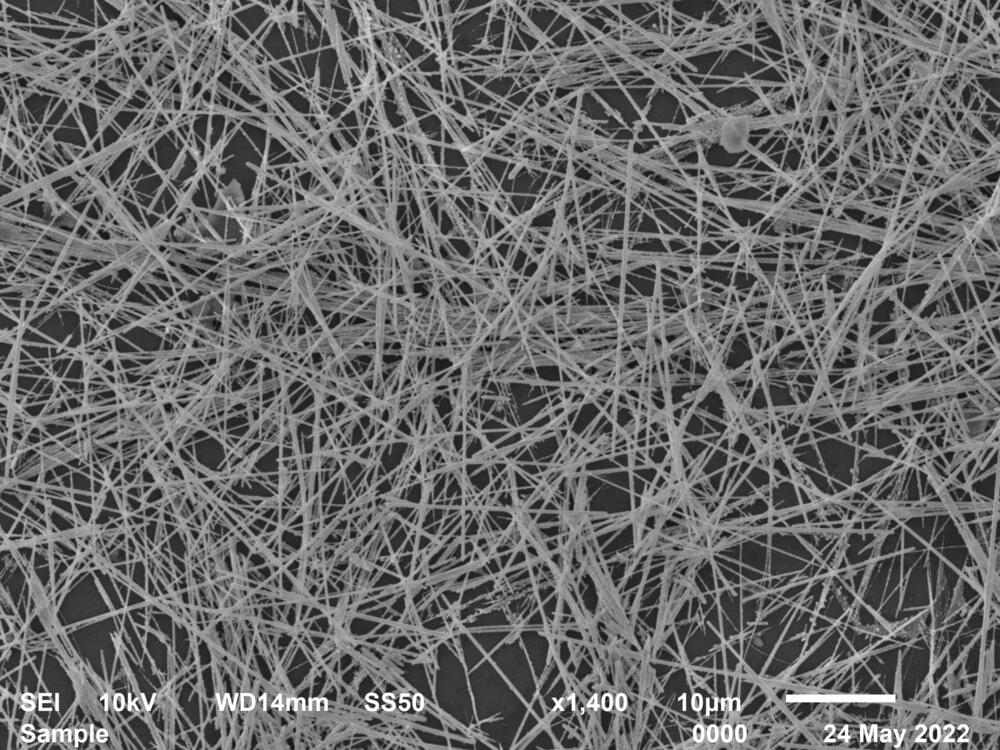

It involves tasks such as image recognition, object detection, and scene understanding. This is done by converting analog signals from the environment into digital signals for processing by neural networks, enabling machines to make sense of visual information. However, this analog-to-digital conversion consumes significant time and energy, limiting the speed and efficiency of practical neural network implementations.