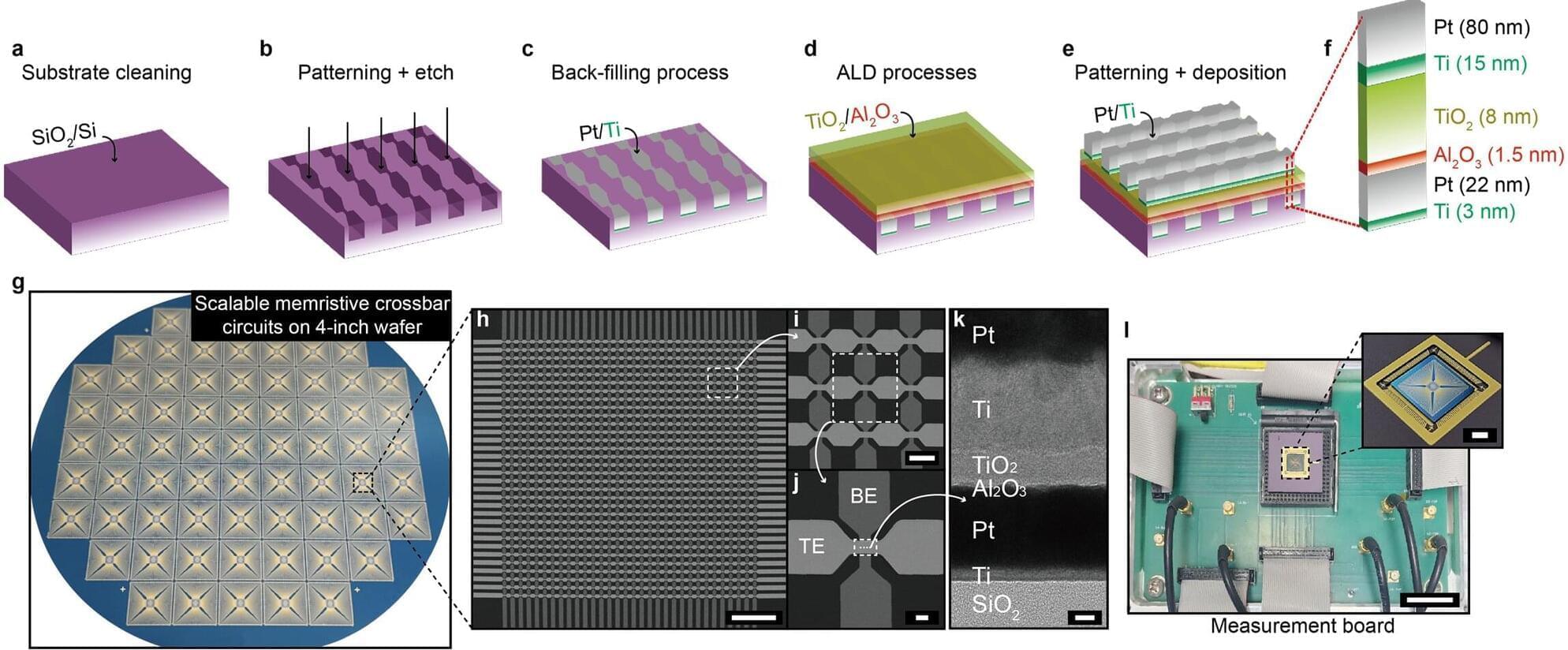

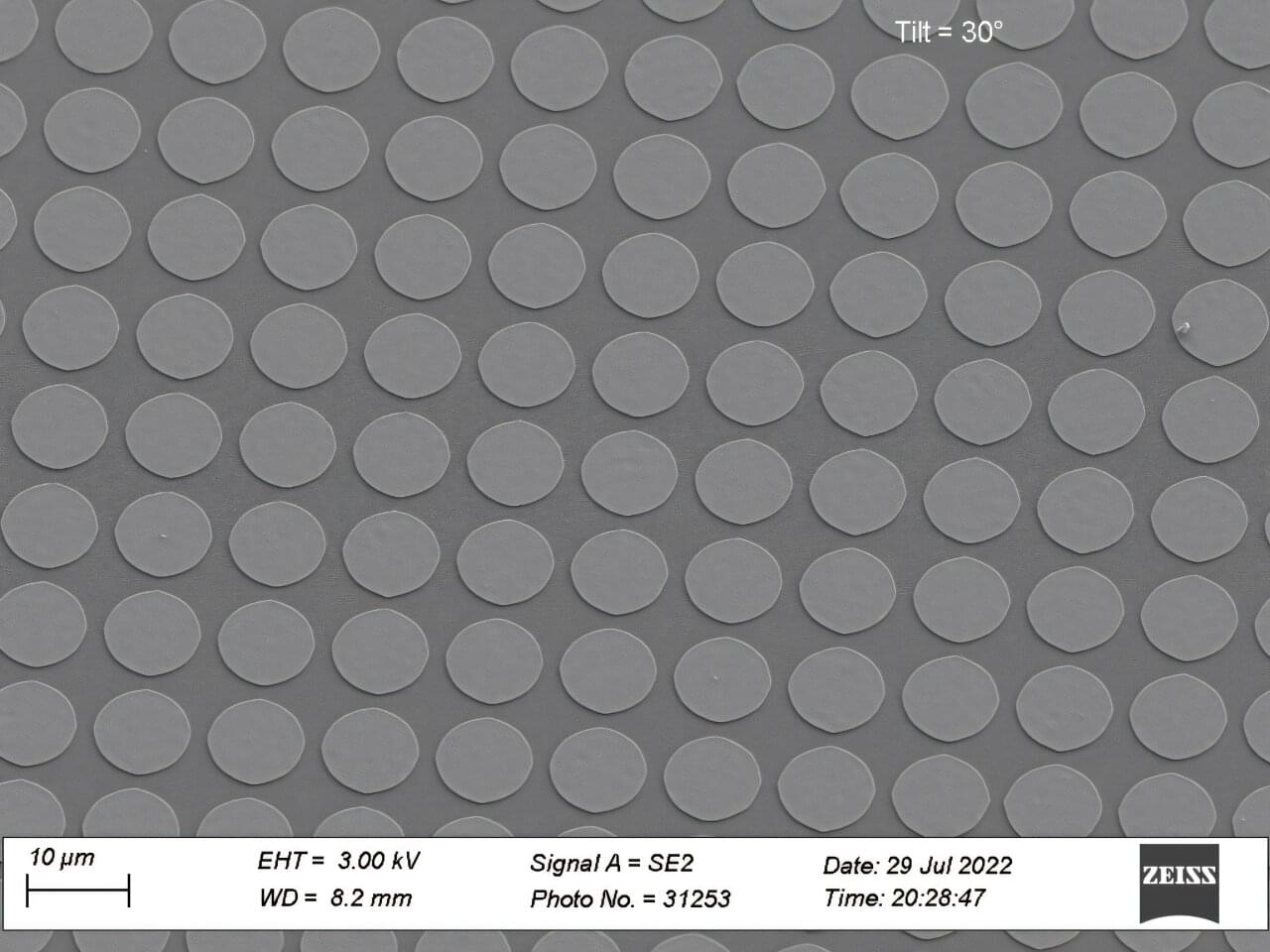

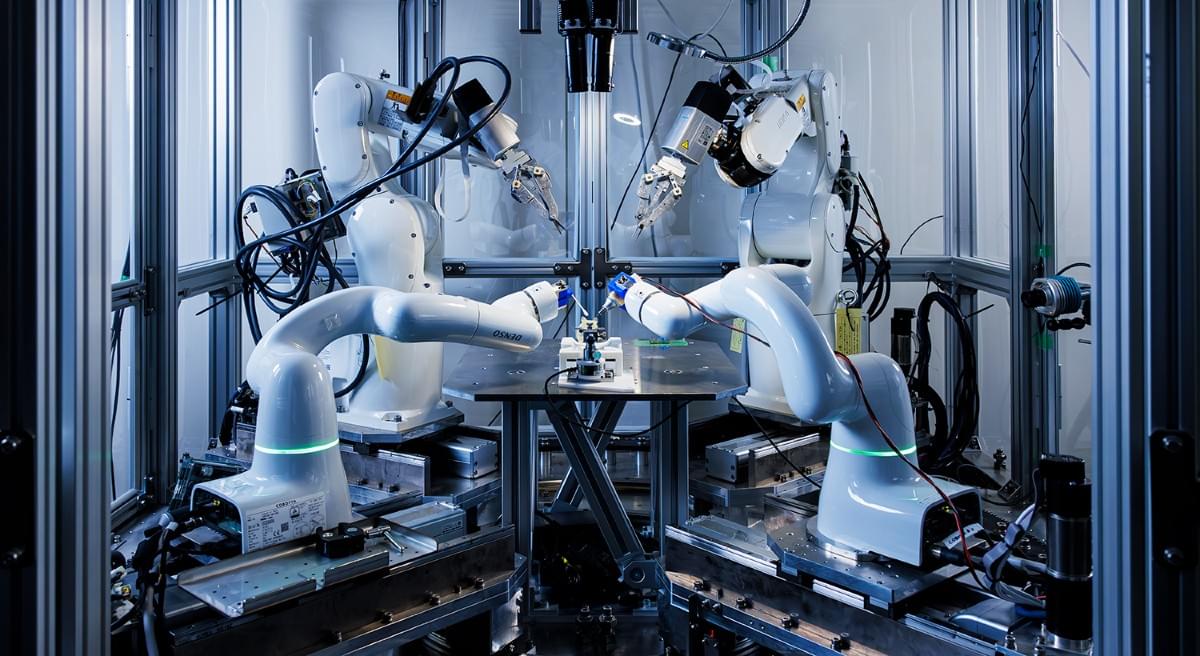

A research team led by Professor Sanghyeon Choi from the Department of Electrical Engineering and Computer Science at DGIST has successfully developed a memristor, which is gaining recognition as a next-generation semiconductor device, through mass-integration at the wafer scale.

The study, published in the journal Nature Communications, proposes a new technological platform for implementing a highly integrated AI semiconductor replicating the human brain, overcoming the limitations of conventional semiconductors.

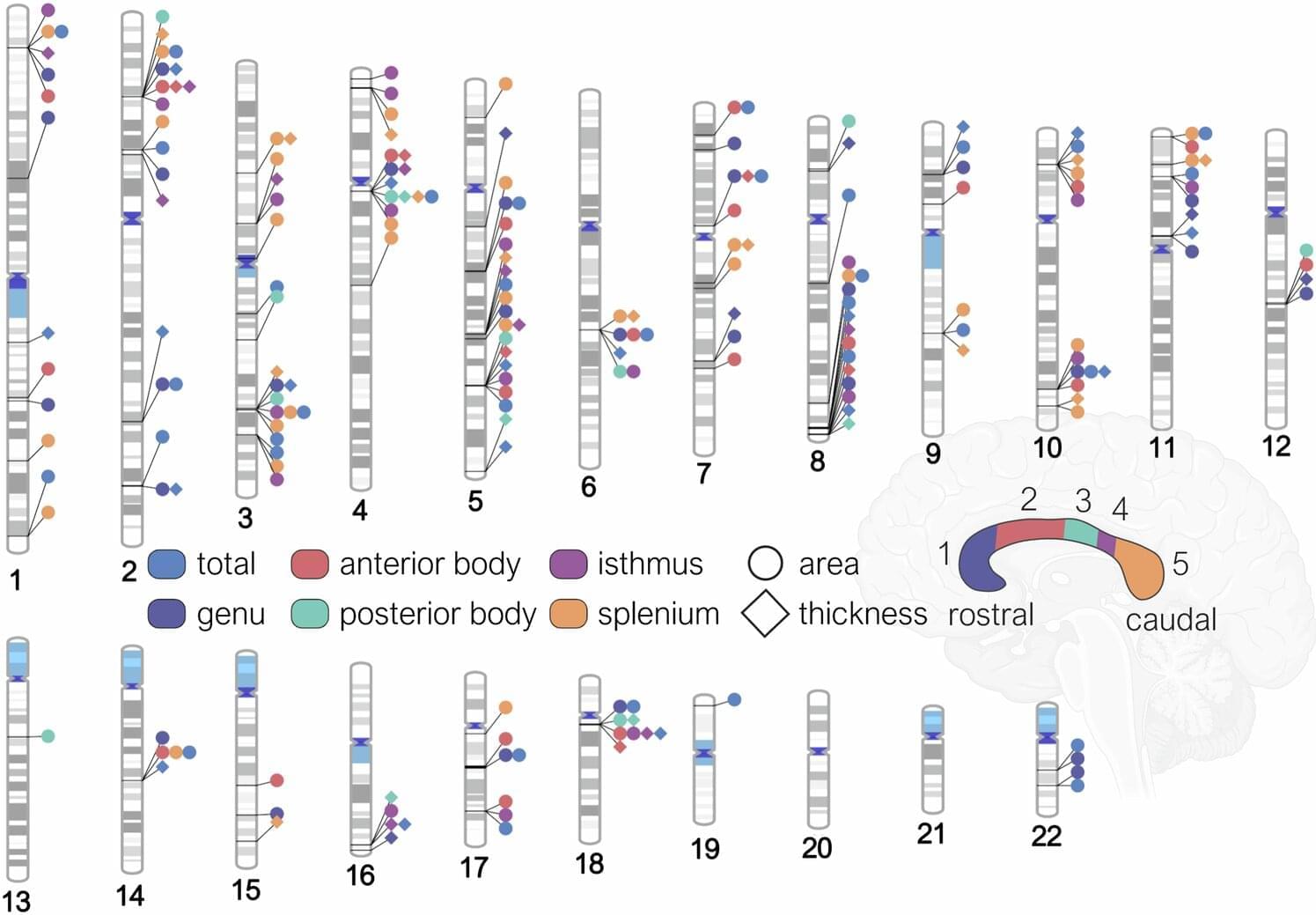

The human brain contains about 100 billion neurons and around 100 trillion synapses, allowing it to store and process enormous amounts of information within a compact space.