Ever wondered what ancient languages sounded like?

Category: robotics/AI – Page 93

Microrobots overcome navigational limitations with the help of ‘artificial spacetimes’

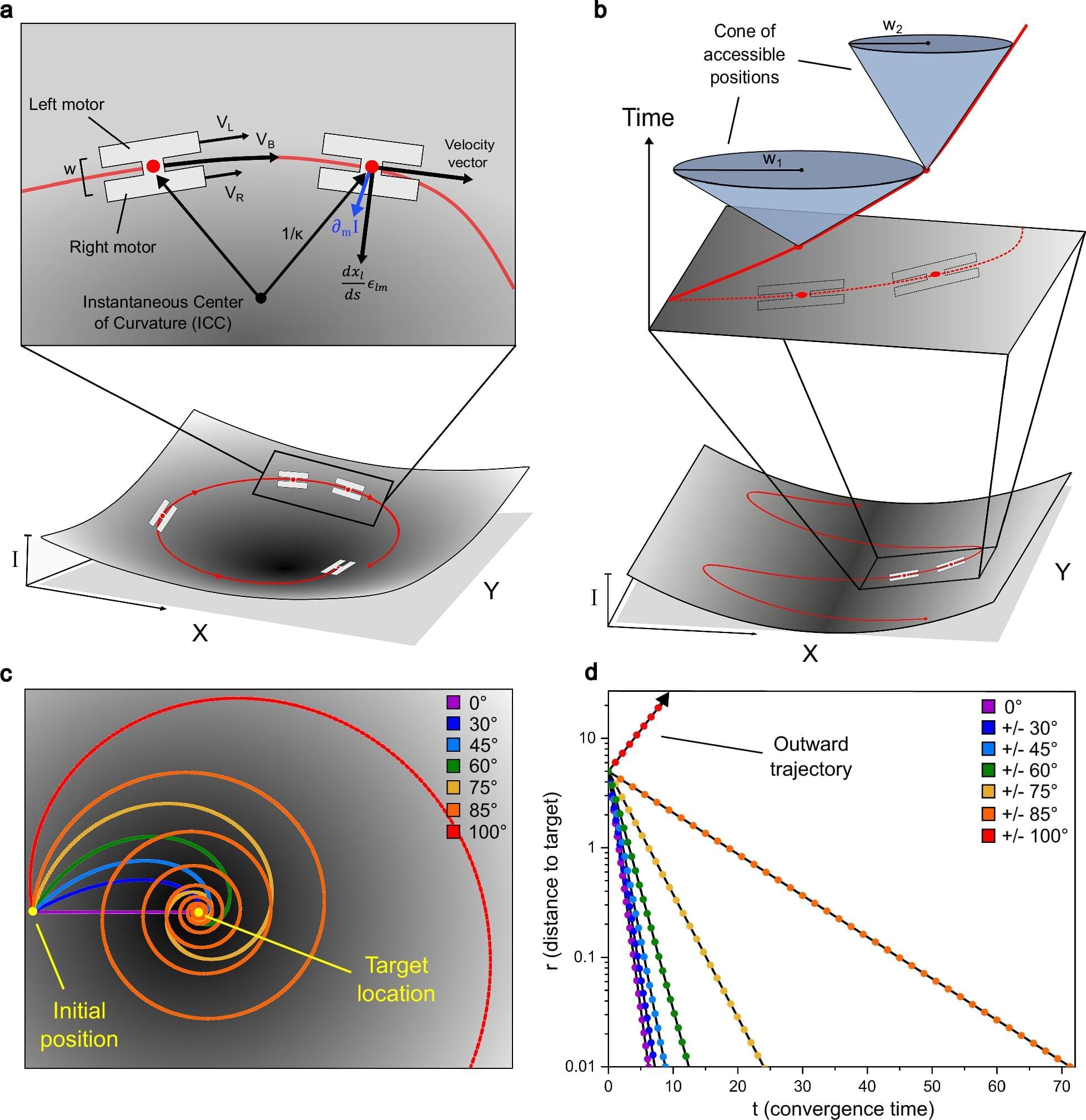

Microrobots—tiny robots less than a millimeter in size—are useful in a variety of applications that require tasks to be completed at scales far too small for other tools, such as targeted drug-delivery or micro-manufacturing. However, the researchers and engineers designing these robots have run into some limitations when it comes to navigation. A new study, published in Nature, details a novel solution to these limitations—and the results are promising.

The biggest problem when dealing with microrobots is the lack of space. Their tiny size limits the use of components needed for onboard computation, sensing and actuation, making traditional control methods hard to implement. As a result, microrobots can’t be as “smart” as their larger cousins.

Researchers have tried to cover this limitation already. In particular, two methods have been studied. One method of microrobot control uses external feedback from an auxiliary system, usually with something like optical tweezers or electromagnetic fields. This has yielded precise and adaptable control of small numbers of microrobots, beneficial for complex, multi-step tasks or those requiring high accuracy, but scaling the method for controlling large numbers of independent microrobots has been less successful.

Lockheed Martin, Google team up on generative AI

Lockheed Martin is partnering with Google Public Sector to integrate Google’s generative AI technologies, including the Gemini models, into its AI Factory.

Google’s AI tools will be introduced within Lockheed Martin’s secure, on-premises, air-gapped environments, making them accessible to personnel throughout the company.

Google Search is now using AI to create interactive UI to answer your questions

In a move that could redefine the web, Google is testing AI-powered, UI-based answers for its AI mode.

Up until now, Google AI mode, which is an optional feature, has allowed you to interact with a large language model using text or images.

When you use Google AI mode, Google responds with AI-generated content that it scrapes from websites without their permission and includes a couple of links.

New therapeutic brain implants could defy the need for surgery

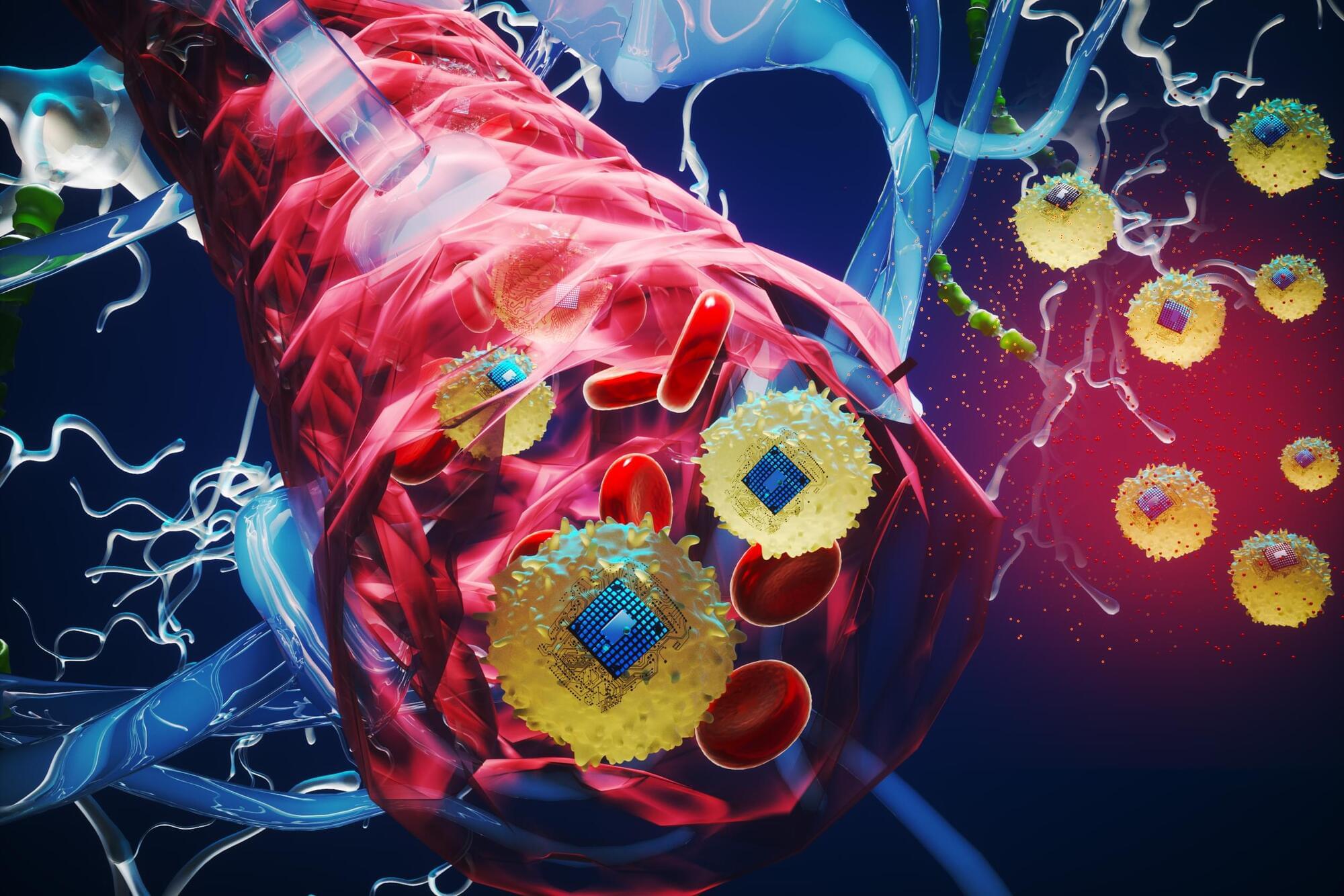

Microscopic bioelectronic devices could one day travel through the body’s circulatory system and autonomously self-implant in a target region of the brain. These “circulatronics” can be wirelessly powered to provide focused electrical stimulation to a precise region of the brain, which could be used to treat diseases like Alzheimer’s, multiple sclerosis, and cancer.

New ‘Transformer’ humanoid robot can launch a shapeshifting drone off its back — watch it in action

Developed at Caltech, a new robot is a humanoid that can launch an M4 drone, switching between different modes of motion, with wheels that can become rotors.

Grigory Tikhomirov | DNA-Based Molecular Manufacturing for Biotech and Electronics

*This video was recorded at ‘Paths to Progress’ at LabWeek hosted by Protocol Labs & Foresight Institute.*

Protocol Labs and Foresight Institute are excited to invite you to apply to a 5-day mini workshop series to celebrate LabWeek, PL’s decentralized conference to further public goods. The theme of the series, Paths to Progress, is aimed at (re)-igniting long overdue progress in longevity bio, molecular nanotechnology, neurotechnology, crypto & AI, and space through emerging decentralized, open, and technology-enabled funding mechanisms.

*This mini-workshop is focused on Paths to Progress in Molecular Nanotechnology*

Molecular manufacturing, in its most ambitious incarnation, would use programmable tools to bring together molecules to make precisely bonded components in order to build larger structures from the ground up. This would enable general-purpose manufacturing of new materials and machines, at a fraction of current waste and price. We are currently nowhere near this ambitious goal. However, recent progress in sub-fields such as DNA nanotechnology, protein-engineering, STM, and AFM provide possible building blocks for the construction of a v1 of molecular manufacturing; the molecular 3D printer. Let’s explore the state of the art and what type of innovation mechanisms could bridge the valley of death: how might we update the original Nanotech roadmap; is a tech tree enough? how might we fund the highly interdisciplinary progress needed to succeed: FRO vs. DAO?

*About The Foresight Institute*

The Foresight Institute is a research organization and non-profit that supports the beneficial development of high-impact technologies. Since our founding in 1986 on a vision of guiding powerful technologies, we have continued to evolve into a many-armed organization that focuses on several fields of science and technology that are too ambitious for legacy institutions to support. From molecular nanotechnology, to brain-computer interfaces, space exploration, cryptocommerce, and AI, Foresight gathers leading minds to advance research and accelerate progress toward flourishing futures.

*We are entirely funded by your donations. If you enjoy what we do please consider donating through our donation page:* https://foresight.org/donate/

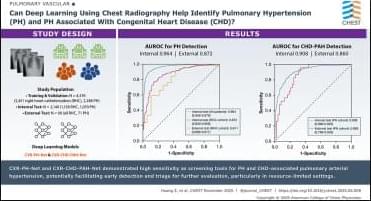

Deep Learning-Enhanced Noninvasive Detection of Pulmonary Hypertension and Subtypes via Chest Radiographs, Validated by Catheterization

The central clock drives metabolic rhythms in muscle stem cells.

Sica et al. show that the circadian clock in the brain controls daily rhythms in muscle stem cells. These rhythms affect stem cell metabolism and repair capacity, even in the absence of a local clock. The findings reveal how central signals shape tissue-specific stem cell functions through systemic cues like feeding.