Elon Musk’s sometimes spicy rival to OpenAI’s ChatGPT is trained on X posts, and can speak with a sense of humor.

Juan Bernabé-Moreno is IBM’s director of research for Ireland and the United Kingdom. The Spanish computer scientist is also responsible for IBM’s climate and sustainability strategy, which is being developed by seven global laboratories using artificial intelligence (AI) and quantum computing. He believes quantum computing is better suited to understanding nature and matter than classical or traditional computers.

Question. Is artificial intelligence a threat to humanity?

Answer. Artificial intelligence can be used to cause harm, but it’s crucial to distinguish between intentional and malicious use of AI, and unintended behavior due to lack of data control or governance rigor.

Electronics that mimic the treelike branches that form the network neurons use to communicate with each other could lead to artificial intelligence that no longer requires the megawatts of power available in the cloud. AI will then be able to run on the watts that can be drawn from the battery in a smartphone, a new study suggests.

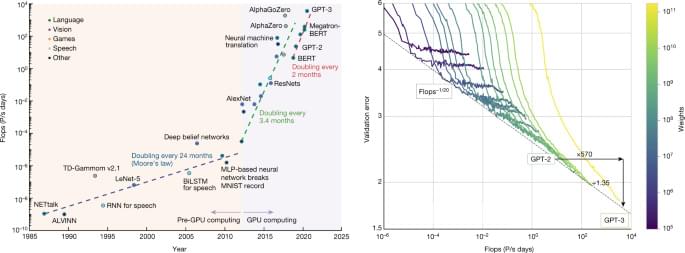

As the brain-imitating AI systems known as neural networks grow in size and power, they are becoming more expensive and energy-hungry. For instance, to train its state-of-the-art neural network GPT-3, OpenAI spent US $4.6 million to run 9,200 GPUs for two weeks. Generating the energy that GPT-3 consumed during training released as much carbon as 1,300 cars would have spewed from their tailpipes over the same time, says study author Kwabena Boahen, a neuromorphic engineer at Stanford University, in California.

Now Boahen proposes a way for AI systems to boost the amount of information conveyed in each signal they transmit. This could reduce both the energy and space they currently demand, he says.

Summary: A recent study highlights the cognitive benefits of Interlingual Respeaking (IRSP), where language professionals collaborate with speech recognition software to create live subtitles in another language. This process, which combines simultaneous translation with the addition of punctuation and content labels, was the focus of a 25-hour upskilling course involving 51 language professionals.

The course showed significant improvements in executive functioning and working memory. Researchers emphasize that such training not only enhances cognitive abilities but also equips language professionals for the rapidly evolving AI-driven language industry.

Deep learning AI models could be used to screen for autism and check on the severity of the condition, according to new research – and all the AI might need is a photo of the subject’s retina.

Previous studies have linked changes in retinal nerves with altered brain structures, and from there to Autism Spectrum Disorder (ASD). The evidence suggests the eye really is a window to the brain, via the interconnectedness of the central nervous system.

“Individuals with ASD have structural retinal changes that potentially reflect brain alterations, including visual pathway abnormalities through embryonic and anatomic connections,” researchers write in their new paper.

A system that records the brain’s electrical activity through the scalp can turn thoughts into words with help from a large language model – but the results are far from perfect.