I dont know about sleep weapons, it s possible probably. More concerning to me, i read a paper 20+ years back about cell towers and cell phone frequencies as a possible tool for mind control, some way connected to frequency of human brain.

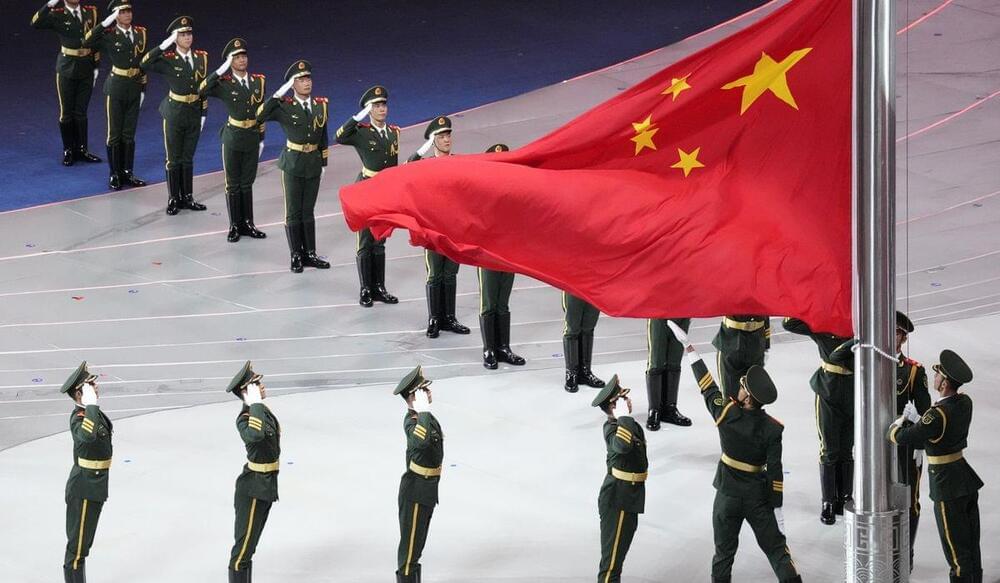

China’s military is developing advanced psychological warfare and brain-influencing weapons as part of a new warfighting strategy, according to a report on People’s Liberation Army cognitive warfare.

The report, “Warfare in the Cognitive Age: NeuroStrike and the PLA’s Advanced Psychological Weapons and Tactics,” was published earlier this month by The CCP Biothreats Initiative, a research group.

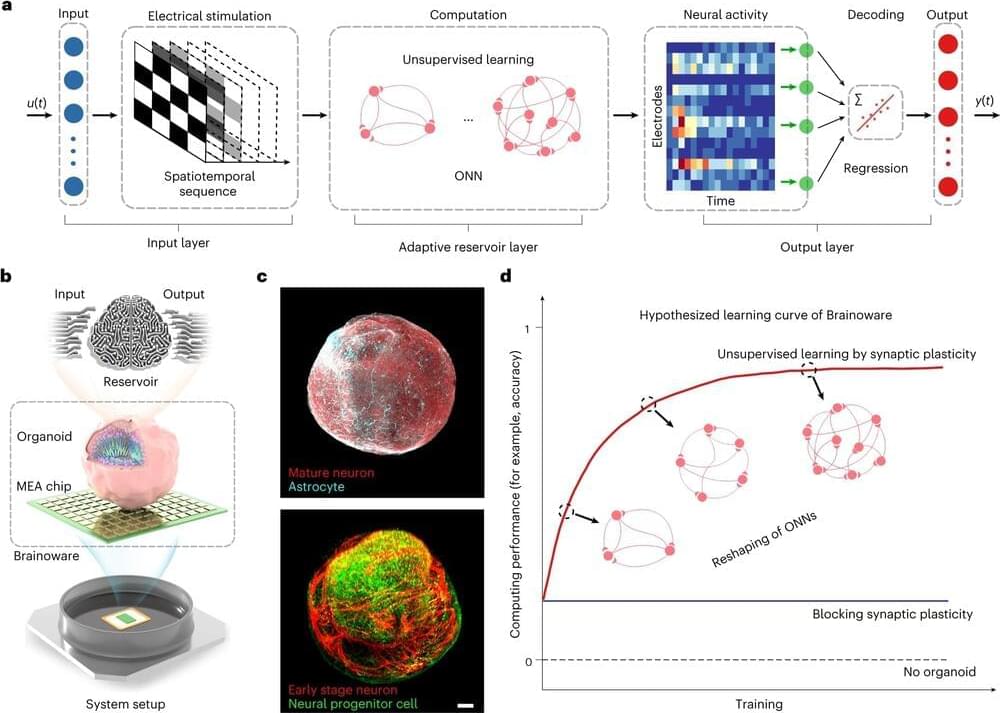

“The PLA is at the forefront of incorporating advanced technologies such as artificial intelligence, brain-computer interfaces and novel biological weapons into its military strategies,” the think tank’s analysts concluded.