One group, A.I. and Faith, convenes tech executives to discuss the important questions about faith’s contributions to artificial intelligence. The founder David Brenner explained, “The biggest questions in life are the questions that A.I. is posing, but it’s doing it mostly in isolation from the people who’ve been asking those questions for 4,000 years.” Questions such as “what is the purpose of life?” have long been tackled by religious philosophy and thought. And yet these questions remained answered and programmed by secular thinkers, and sometimes by those antagonistic toward religion. Technology creators, innovators, and corporations should create accessibility and coalitions of diverse thinkers to inform religious thought in technological development including artificial intelligence.

Independent of development, faith leaders have a critical role to play in moral accountability and upholding human rights through the technology we already use in everyday life including social media. The harms of religious illiteracy, misinformation, and persecution are largely perpetrated through existing technology such as hate speech on Facebook, which quickly escalated to mass atrocities against the Rohingya Muslims in Myanmar. Individuals who have faith in the future must take an active role in combating misinformation, hate speech, and online bullying of any group.

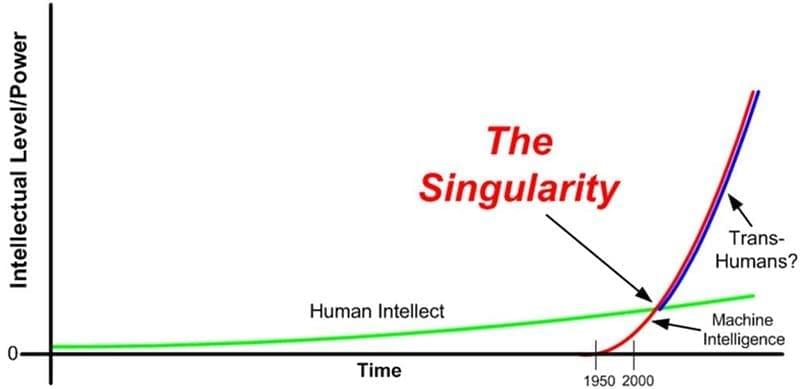

The future of artificial intelligence will require spiritual intelligence, or “the human capacity to ask questions about the ultimate meaning of life and the integrated relationship between us and the world in which we live.” Artificial intelligence becomes a threat to humanity when humans fail to protect freedom of conscience, thought, and religion and when we allow our spiritual intelligence to be superseded by the artificial.