The robot features eight adjustable water jets in its central and head regions, with a flexible firehose directed by a control unit on a trailing wheeled cart.

Functioning at a rate of 6.6 liters per second, the nozzles expel water with a pressure reaching up to one megapascal. At the tip of the hose, a combination of a traditional camera and a thermal imaging camera is integrated, facilitating the identification and location of the fire. This technological integration enhances the Dragon Firefighter’s firefighting capabilities, according to the team.

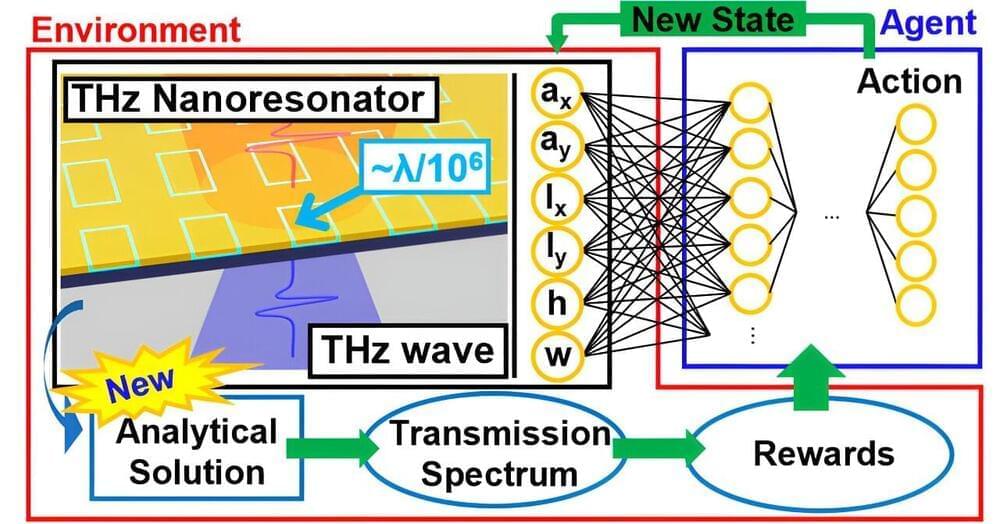

Learning process

The inaugural testing of the system took place during the opening ceremony of the World Robot Summit 2020 (WRS2020), held in September 2021 in Fukushima. Dragon Firefighter “successfully extinguished [49 min 0 s to 51 min 0 s] the ceremonial flame, consisting of fireballs lit by another robot, at a distance of four meters,” said a statement.