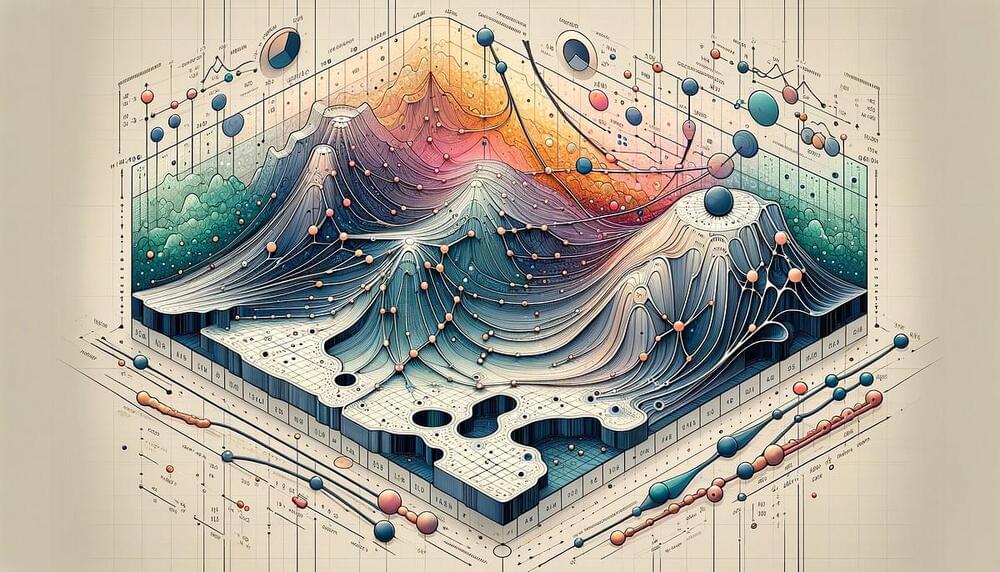

Deloitte’s Global Generative AI Innovation Leader Nitin Mittal and Tomorrow CEO Mike Walsh explore the Fifth Industrial Revolution in which the catalyst for societal transformation is the augmentation and expansion of human intelligence.

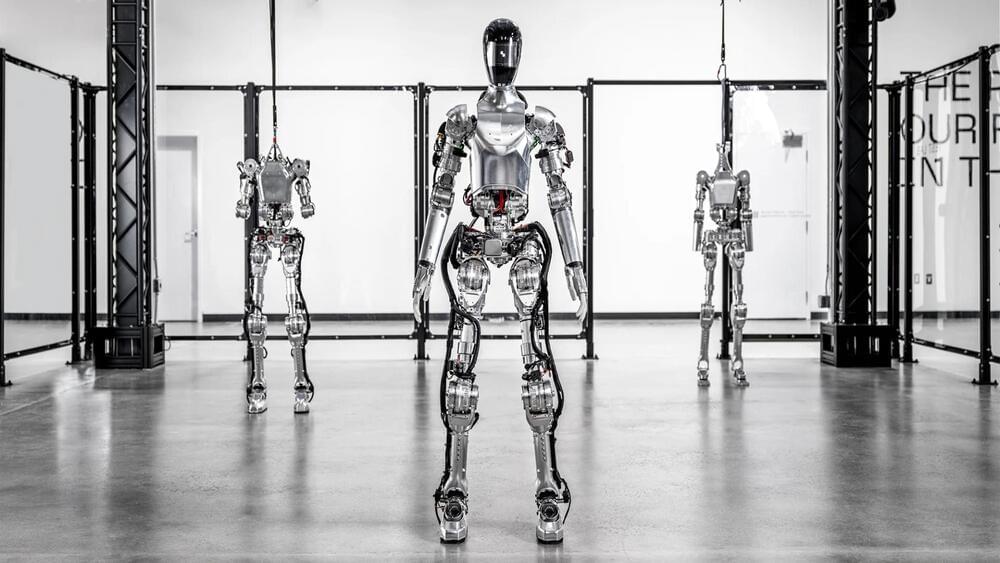

Given the recency of the Fourth Industrial Revolution, it might be a surprise that we are on the verge of an entirely new one. Rapid progress in computation, connectivity, and artificial intelligence (AI)—accelerated by the COVID-19 pandemic—has brought forward the timeline for transformation. While prior industrial revolutions were premised on gains in operational efficiency, the next revolution will be powered by minds, not just machines—where the catalyst for societal transformation is the augmentation and expansion of human intelligence.