I think it’s finally time to change the N in FAANG to Nvidia, rather than Netflix.

Futuristic advancements in AI and healthcare stole the limelight at the tech extravaganza Consumer Electronics Show (CES) 2024. However, battery technology is the game-changer at the heart of these innovations, enabling greater power efficiency. Importantly, electric vehicles are where this technology is being applied most intensely. Today’s EVs can travel around 700km on a single charge, while researchers are aiming for a 1,000km battery range. Researchers are fervently exploring the use of silicon, known for its high storage capacity, as the anode material in lithium-ion batteries for EVs. However, despite its potential, bringing silicon into practical use remains a puzzle that researchers are still working hard to piece together.

Enter Professor Soojin Park, PhD candidate Minjun Je, and Dr. Hye Bin Son from the Department of Chemistry at Pohang University of Science and Technology (POSTECH). They have cracked the code, developing a pocket-friendly and rock-solid next-generation high-energy-density Li-ion battery system using micro silicon particles and gel polymer electrolytes. This work was published on the online pages of Advanced Science on the 17th of January.

Employing silicon as a battery material presents challenges: It expands by more than three times during charging and then contracts back to its original size while discharging, significantly impacting battery efficiency. Utilizing nano-sized silicon (10-9m) partially addresses the issue, but the sophisticated production process is complex and astronomically expensive, making it a challenging budget proposition. By contrast, micro-sized silicon (10-6m) is superbly practical in terms of cost and energy density. Yet, the expansion issue of the larger silicon particles becomes more pronounced during battery operation, posing limitations for its use as an anode material.

Tech giant Google has finally unveiled its much-hyped Gemini AI, a series of generative AI models it claims are its “largest and most capable” to date.

“This new era of models represents one of the biggest science and engineering efforts we’ve undertaken as a company,” said Google CEO Sundar Pichai.

Multimodal AI: Generative AIs are algorithms trained to create original content in response to user prompts. OpenAI’s first iteration of ChatGPT, for example, can understand and produce human-like text, while its DALL-E 2 system can generate images based on text prompts.

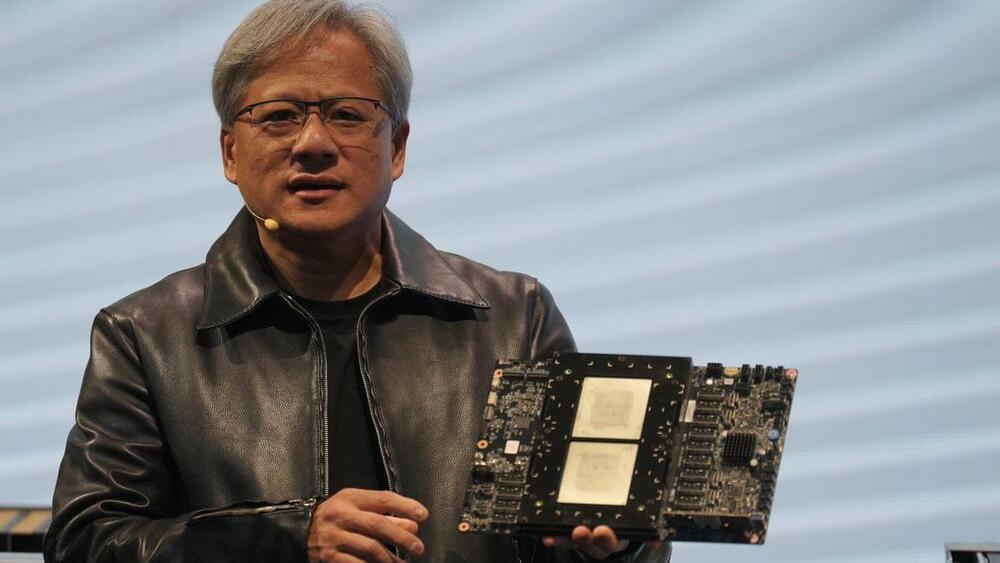

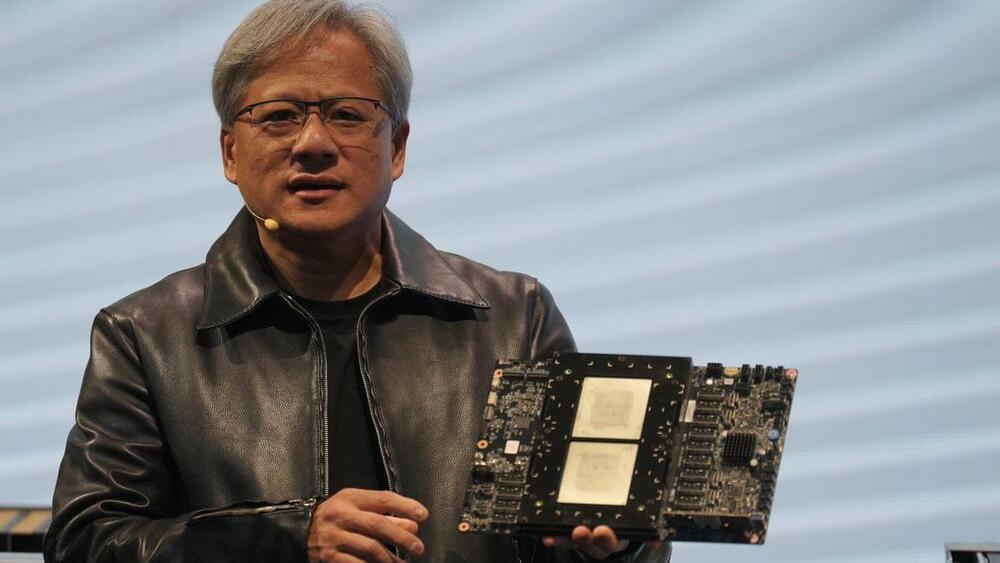

Speaker: Dr. Zhongrui WangAbstract: The rapid development in the field of artificial intelligence has relied principally on the advances in computing hardwar…

Summary: Researchers developed an innovative AI tool, DeepGO-SE, that excels in predicting the functions of unknown proteins, marking a significant advance in bioinformatics. Leveraging large language models and logical entailment, this tool can deduce molecular functions even for proteins without existing database matches, offering a groundbreaking approach to understanding cellular mechanisms.

Its precision has placed DeepGO-SE among the top algorithms in an international function prediction competition, demonstrating its potential in drug discovery, metabolic pathway analysis, and beyond. The team aims to apply this tool to explore proteins in extreme environments, opening new doors for biotechnological advancements.

This Plenary speech was delivered by Prof. Daniele Ielmini (Politecnico Di Milano) during the first edition of Artificial Intelligence International Conference that was held in Barcelona on November 21–23 of 2018.

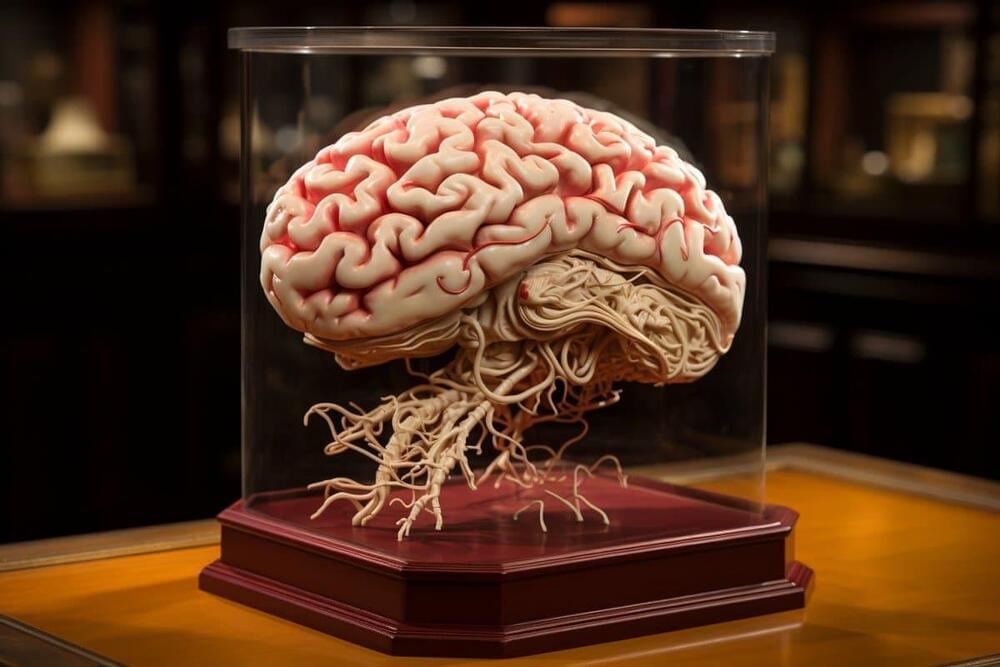

Summary: Researchers developed 20 novel recombinant rabies viral vectors that present unparalleled advantages for neural circuit mapping in aging and Alzheimer’s disease studies. These vectors are engineered to highlight microstructural changes in brain neurons through enhanced fluorescent proteins, offering insights into neural networks at both micro and macro scales.

The vectors’ unique ability to target specific neuron components and perform live imaging makes them potent tools for dissecting neural circuitry in healthy and diseased states. This innovation opens new pathways for targeted treatment strategies and will be shared with the neuroscience community through UCI’s Center for Neural Circuit Mapping.