Year 2021 face_with_colon_three

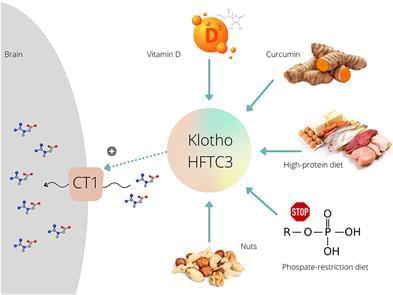

Creatine plays a pivotal role in cellular bioenergetics, acting as a temporal and spatial energy buffer in cells with high and fluctuating energy requirements (1). Jeopardizing delicate creatine homeostasis can be detrimental to many energy-demanding tissues, including the brain. For instance, cerebral creatine hypometabolism accompanies various neurological conditions, including a number of developmental disorders (2, 3), neurodegenerative and cerebrovascular diseases (4, 5), and brain cancer (6). A reduced creatine availability in the brain has been thus recognized as an apposite therapeutic target, and supplying exogenous creatine to compensate for a disease-driven shortfall emerged as a first possible approach. However, early success in animal models of neurological diseases was not corroborated in human trials, with the use of creatine supplementation proved largely disappointing in clinical studies with a number of symptomatic neurological disorders [for a detailed review, see (7)]. A meager delivery of creatine to the brain could be partly due to a low activity/density of creatine transporter (CT1 or SLC6A8), a transmembrane sodium-and chloride-dependent protein that mediates creatine uptake into the target cells (8). For that reason, the upregulation of CT1 function has been identified as an innovative course of action to facilitate creatine uptake, with several exotic agents and routes were cataloged so far, including glucocorticoid-regulated kinases, mammalian target of rapamycin, ammonia, and Klotho protein (9).

Besides other vehicles, Klotho protein (Clotho; HFTC3) is put forward as a possible stimulator of CT1 function that can uplift creatine allocation to the target tissues. This membrane-bound pleiotropic enzyme (also exists in a circulating form) participates in many metabolic pathways, including calcium-phosphate metabolism, nutrient sensing, and remyelination (10). Klotho is highly expressed in neuronal cells of the cerebral cortex, cerebellum, and spinal cord (11). The role of Klotho in high-phosphate energy metabolism modulation was revealed a few years ago when Amilaji et al. (12) found that the co-expression of Klotho protein increases a creatine-induced current in CT1-expressing cells. The authors reported that the current through CT1 was a function of the extracellular creatine levels, with the maximal creatine-induced current was higher in cells expressing CT1 together with Klotho than in cells expressing CT1 alone (29.5 vs. 20.2 nA).