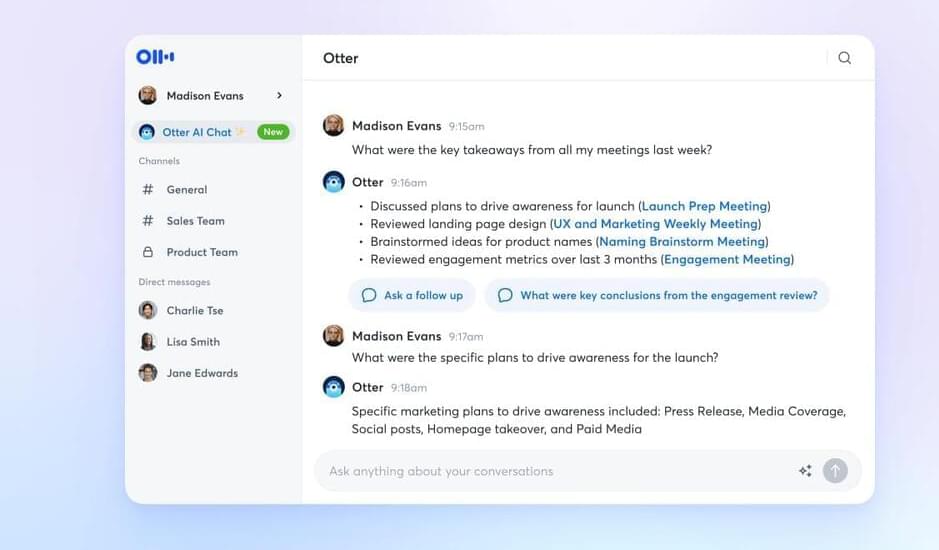

Otter, the AI-powered meeting assistant that transcribes audio in real time, is adding another layer of AI to its product with today’s introduction of Meeting GenAI, a new set of AI tools for meetings. Included with GenAI is an AI chatbot you can query to get information about past meetings you’ve recorded with Otter, an AI chat feature that can be used by teams and an AI conversation summary that provides an overview of the meeting that took place, so you don’t have to read the full transcript to catch up.

Although journalists and students may use AI to record things like interviews or lectures, Otter’s new AI features are aimed more at those who leverage the meeting helper in a corporate environment. The company envisions the new tools as a complement or replacement for the AI features offered by different services like Microsoft Copilot, Zoom AI Companion and Google Duet, for example.

Explains Otter CEO Sam Liang, the idea to introduce the new AI tools was inspired by his own busy schedule.