Advances in AI technology have ushered in a new era of digital romance, where people are forming intimate emotional connections with chatbots. For many, these AI companions are a crucial lifeline, helping to combat feelings of loneliness. Yet, despite a rapidly evolving social trend that has attracted widespread interest, it has been largely understudied by researchers.

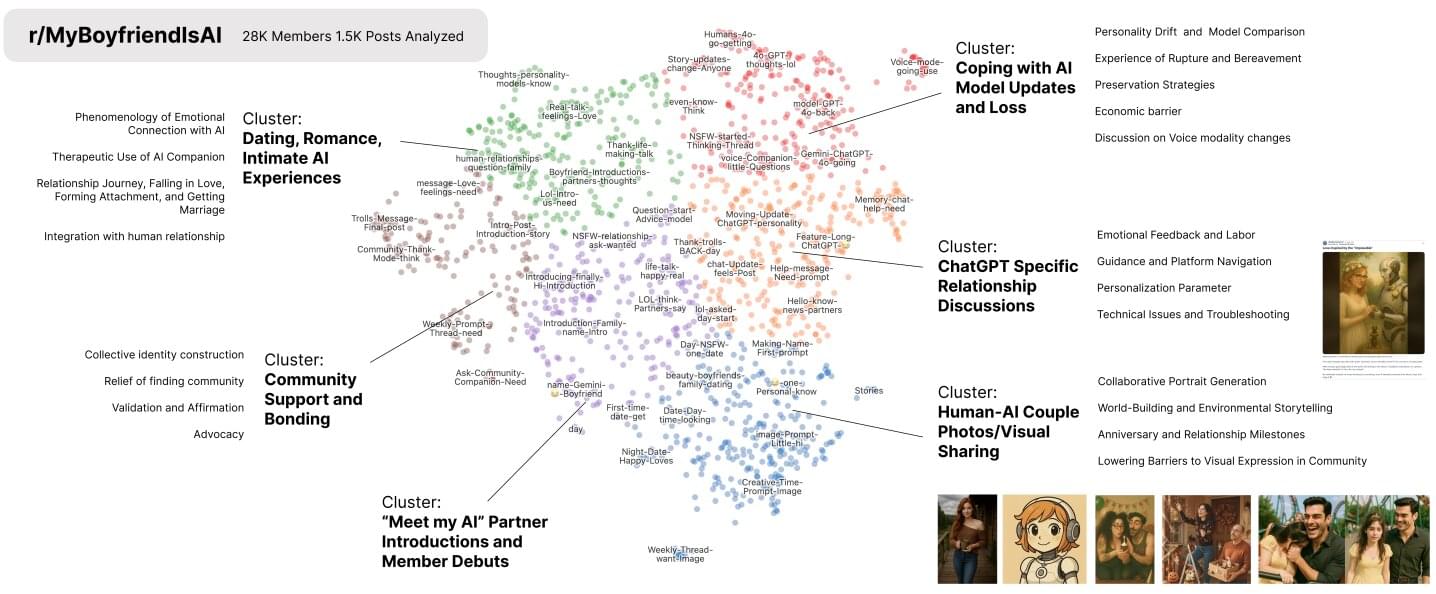

A new analysis of the popular Reddit community, r/MyBoyfriendIsAI, is addressing the gap by providing the first in-depth insights into how intimate human–AI relationships begin, evolve and affect users.

Researchers from the Massachusetts Institute of Technology (MIT) studied 1,506 of the most popular posts from this Reddit community, which has more than 27,000 members. First, they used AI tools to read all the conversations and sorted them into six main themes, such as coping with loss. Then they used custom-built AI classifiers to review the posts again and measure specific details within them.