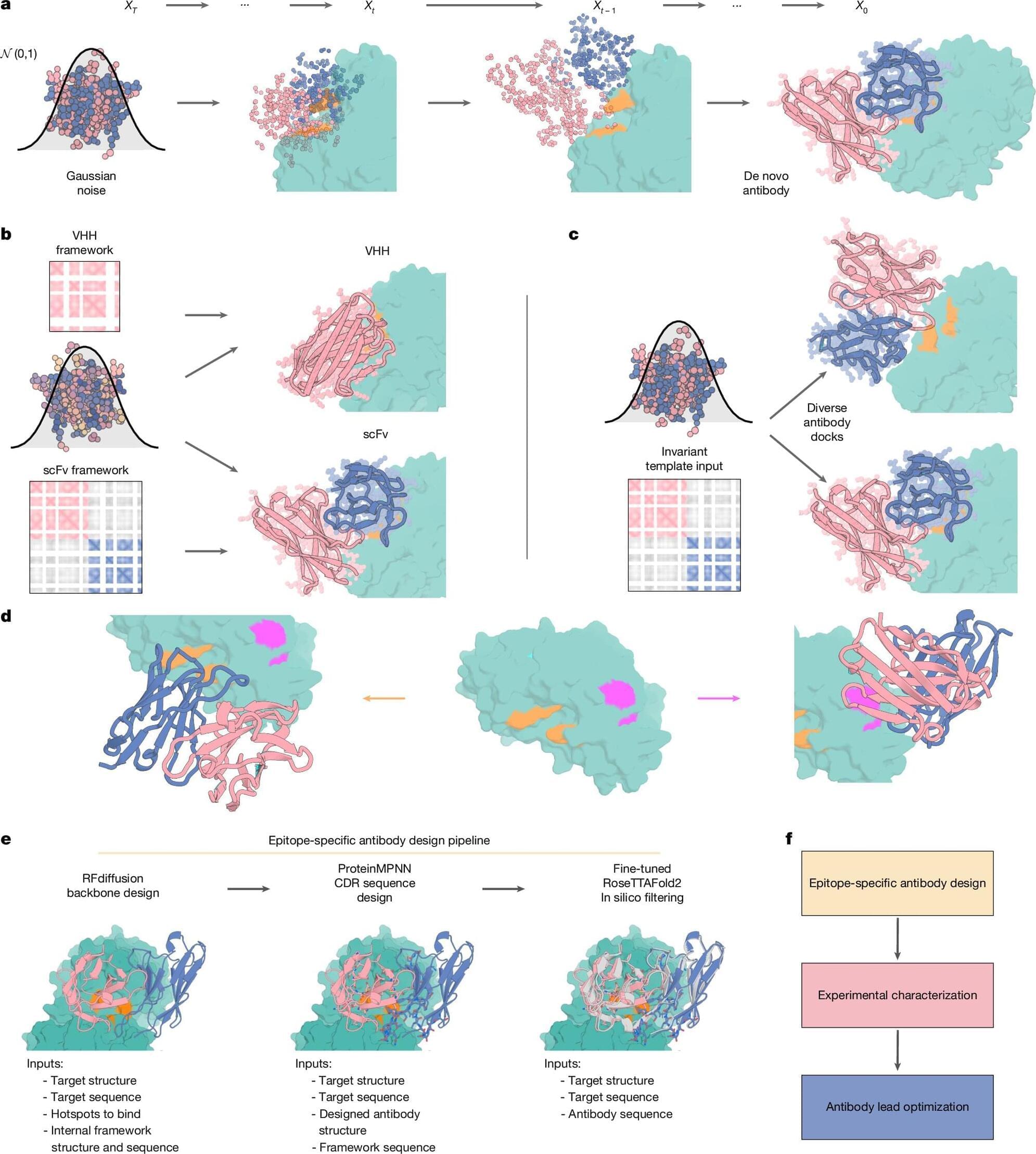

Research led by the University of Washington reports on an AI-guided method that designs epitope-specific antibodies and confirms atomically precise binding using high-resolution molecular imaging, then strengthens those designs so the antibodies latch on much more tightly.

Antibodies dominate modern therapeutics, with more than 160 products on the market and a projected value of US$445 billion in 5 years. Antibodies protect the body by locking onto a precise spot—an epitope—on a virus or toxin.

That pinpoint connection determines whether an antibody blocks infection, marks a pathogen for removal, or neutralizes a harmful protein. When a drug antibody misses its intended epitope, treatment can lose power or trigger side effects by binding the wrong target.