I don’t know if this true but it definitely could be as most civilizations are probably more advanced than the earth.

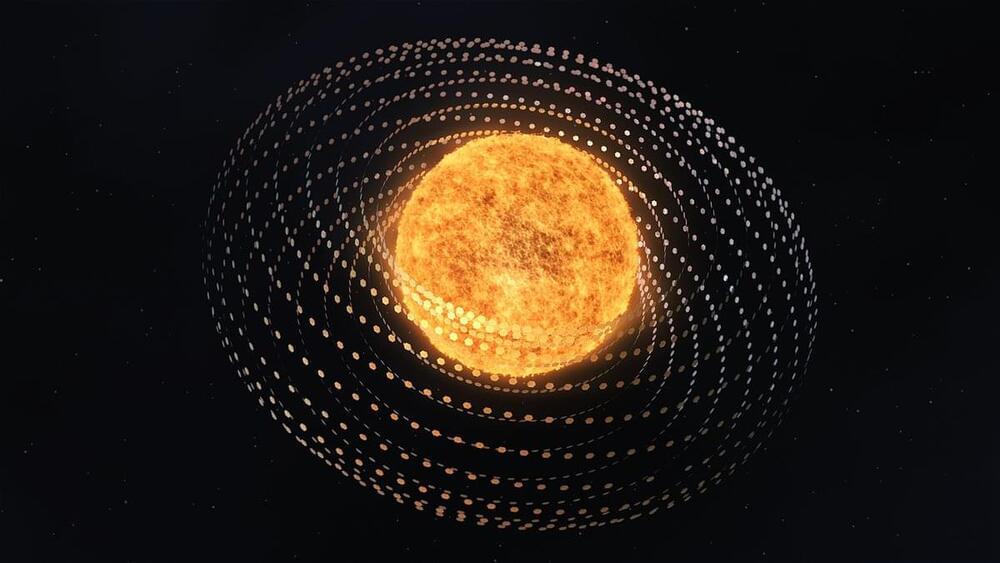

A survey of five million distant solar systems, aided by ‘neural network’ algorithms, has discovered 60 stars that appear to be surrounded by giant alien power plants.

Seven of the stars — so-called M-dwarf stars that range between 60 percent and 8 percent the size of our sun — were recorded giving off unexpectedly high infrared ‘heat signatures,’ according to the astronomers.

Natural, and better understood, outer space ‘phenomena,’ as they report in their new study, ‘cannot easily account for the observed infrared excess emission.’