They are becoming more capable, easier to program and better at explaining themselves.

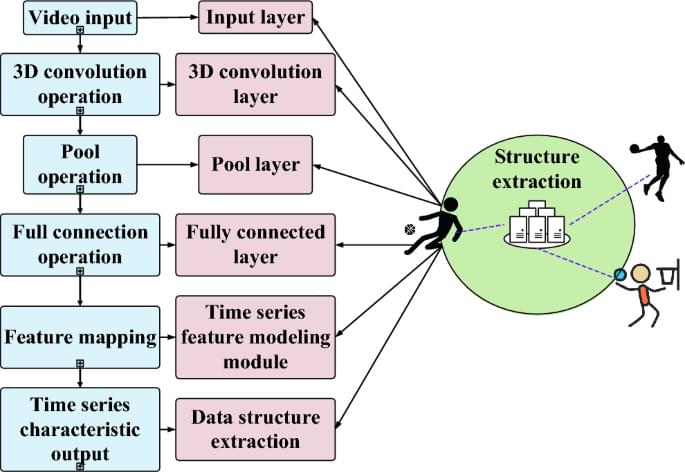

Wang, J., Zuo, L. & Cordente Martínez, C. Basketball technique action recognition using 3D convolutional neural networks. Sci Rep 14, 13,156 (2024). https://doi.org/10.1038/s41598-024-63621-8

Brighter with Herbert.

China’s first AI hospital, developed by Tsinghua University, features robot doctors capable of treating 3,000 patients daily, vastly outpacing human capacity.

These AI doctors, trained in a simulated environment, can diagnose and treat 10,000 patients in days, a task humans would need two years to…

China debuts an AI hospital town featuring AI doctors caring for virtual patients to advance medical consultation and evolve doctor agents.

Despite years of working to develop AI systems, it wasn’t until Christmas 2022 when Craig Federighi played with Copilot that the company truly got behind the idea.

The conventional wisdom is that Apple is far behind the rest of the technology industry in its use and deployment of AI. That has seemed nonsensical since Apple has had Siri for almost 15 years, and a head of AI since 2018.

However, a new report from the Wall Street Journal claims that despite all these years working on Machine Learning, and despite having ex-Google AI chief John Giannandrea, it could be true that Apple is substantially behind. Reportedly, Giannandrea and his team have struggled to fit in with Apple, and to get AI implemented.

Virgin Galactic is using its SpaceShipTwo to launch the final commercial flight of VSS Unity. This is the 17th flight of the VSS Unity, before the company plans to upgrade the vehicle.

The commercial crew on this mission is composed of a researcher affiliated with Axiom Space, two private Americans, and a private Italian. The Virgin Galactic crew on Unity will be Commander Nicola Pecile and pilot Jameel Janjua.

The ‘Galactic 07’ autonomous rack-mounted research payloads will include a Purdue University experiment designed to study propellant slosh in fuel tanks of maneuvering spacecraft, as well as a UC Berkeley payload testing a new type of 3D printing.

Expected Takeoff: 10:30 a.m. Eastern Time.

⚡ Become a member of NASASpaceflight’s channel for exclusive discord access, fast turnaround clips, and other exclusive benefits. Your support helps us continue our 24/7 coverage. ⚡