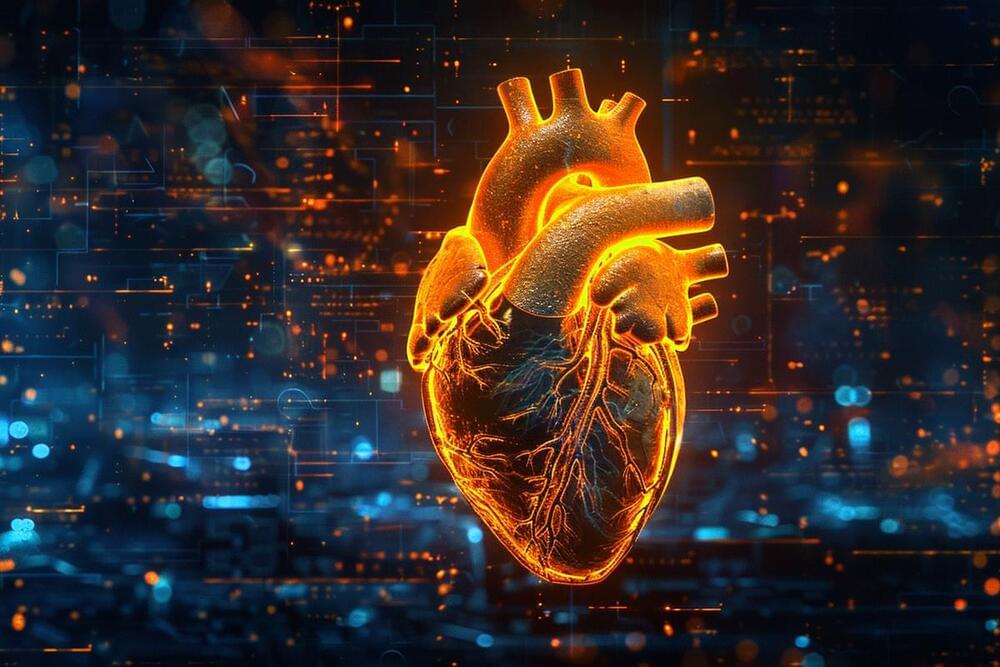

“The ORFAN study is an expanding global registry which will include long-term clinical and outcome data for 250,000 patients from around the world, and we are very pleased to publish these initial results,” said Keith Channon, MD, Professor of Cardiovascular Medicine at University of Oxford, Caristo Chief Medical Officer, and co-author of The Lancet publication.

“Coronary inflammation is a crucial piece of the puzzle in predicting heart attack risk. We are excited to discover that CaRi-Heart results performed exceptionally well in predicting patient cardiac events. This tool is well positioned to help clinicians identify high-risk patients with seemingly ‘normal’ CCTA scans.”

Ron Blankstein, MD, Professor of Medicine and Radiology at Harvard Medical School, Director of Cardiac Computed Tomography at Brigham and Women’s Hospital, and co-author of the publication, applauded The Lancet for publishing results from one of the largest studies in the field of CCTA.