The Transforming AI panel from GTC 2024 features the authors of “Attention Is All You Need,” the groundbreaking paper that introduced the transformer neural network architecture. Transformers have since dominated all areas of AI, revolutionizing the industry. The discussion covers the following topics: ▫️ The development and impact of the Transformer model. ▫️ The evolution of computing and its democratization. ▫️ The future of AI technology and its potential applications. ▫️ The role of accelerated computing. ▫️ The potential for AI to revolutionize various industries. ▫️ The need for new data and learning techniques in AI development. Feature panel host: ▫️ Jensen Huang, Founder and Chief Executive Officer, NVIDIA Panelists: ▫️ Ashish Vaswani, Co-Founder and CEO, Essential AI ▫️ Noam Shazeer, Chief Executive Officer and Co-Founder, Character. AI ▫️ Jakob Uszkoreit, Co-Founder and Chief Executive Officer, Inceptive ▫️ Llion Jones, Co-Founder and Chief Technology Officer, Sakana AI ▫️ Aidan Gomez, Co-Founder and Chief Executive Officer, Cohere ▫️ Lukasz Kaiser, Member of Technical Staff, OpenAI ▫️ Illia Polosukhin, Co-Founder, NEAR Protocol Explore more GTC 2024 sessions like this on NVIDIA On-Demand: https://nvda.ws/3U33qo Read and subscribe to the NVIDIA Technical Blog: https://nvda.ws/3XHae9F 00:00 Introduction 11:00 Panelist Discussion #GTC24 #NVIDIA #GTC #AI #GenAI #Generative AI #Transformers #FutureOfAI

Category: robotics/AI – Page 616

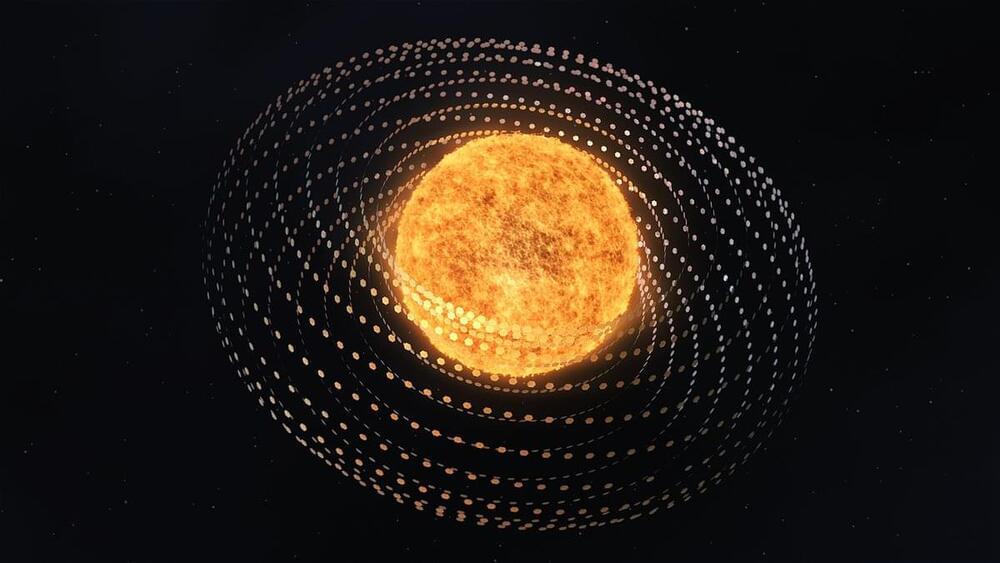

Scientists spot 60 stars appearing to show signs of alien power plants

I don’t know if this true but it definitely could be as most civilizations are probably more advanced than the earth.

A survey of five million distant solar systems, aided by ‘neural network’ algorithms, has discovered 60 stars that appear to be surrounded by giant alien power plants.

Seven of the stars — so-called M-dwarf stars that range between 60 percent and 8 percent the size of our sun — were recorded giving off unexpectedly high infrared ‘heat signatures,’ according to the astronomers.

Natural, and better understood, outer space ‘phenomena,’ as they report in their new study, ‘cannot easily account for the observed infrared excess emission.’

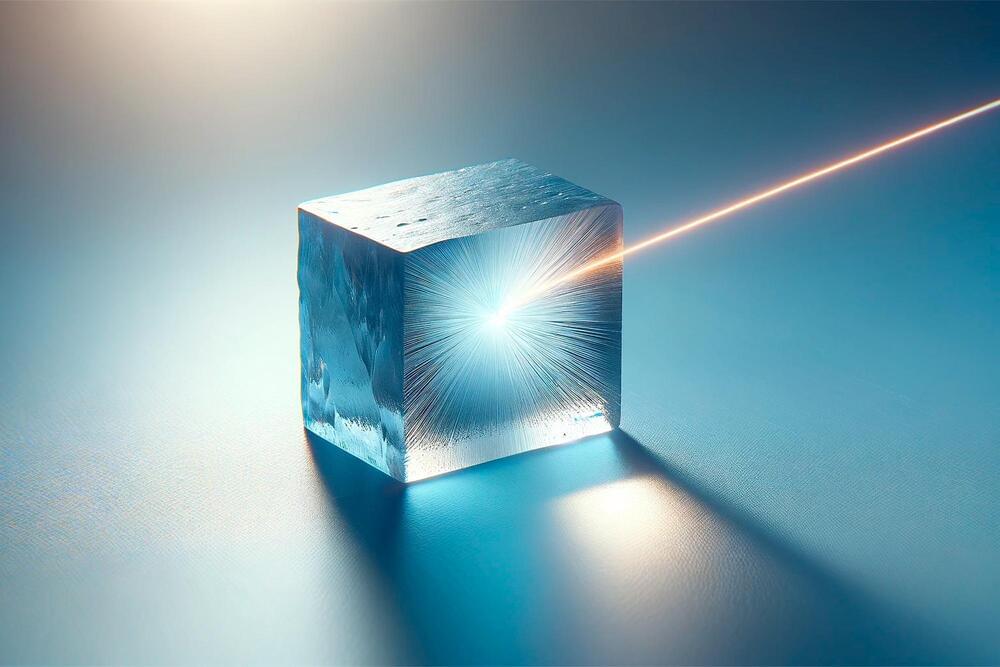

21 New Laser Materials Uncovered in Groundbreaking Global Study

Utilizing distributed self-driving lab technology, the University of Toronto’s Acceleration Consortium rapidly identified 21 high-performing organic solid-state lasers (OSL) materials, marking a significant advance in molecular optoelectronics and paving the way for future materials discovery. (Artist’s concept). Credit: SciTechDaily.com.

Organic solid-state lasers (OSLs) offer significant potential for various applications thanks to their flexibility, color adjustability, and high efficiency. Nevertheless, they are challenging to produce. With the need for potentially over 150,000 experiments to identify viable new materials, fully exploring this space could take many lifetimes. In fact, in the previous few decades, only 10–20 new OSL materials have been tested.

Researchers with the Acceleration Consortium based at the University of Toronto, took up this challenge and used self-driving lab (SDL) technology that, once set up, enabled them to synthesize and test over 1,000 potential OSL materials and discover at least 21 top performing OSL gain candidates in a matter of months.

NVIDIA CEO Jensen Huang Unveils AI Future: NIMS Digital Humans & AI Factories

The AI Factory is revolutionizing the industry by creating a new commodity of extraordinary value through the development of NIMS, which has the ability to generate language models, pre-trained models, and digital humans for various applications Questions to inspire discussion What is the impact of ChatGPT on generative AI?

Combining proteomics and AI to enable ‘a new era in healthcare’

Understanding aging and age-related diseases requires analyzing a vast number of factors, including an individual’s genetics, immune system, epigenetics, environment and beyond. While AI has long been touted for its potential to shed light on these complexities of human biology and enable the next generation of healthcare, we’ve yet to see the emergence of tools that truly deliver on this promise.

Leveraging advanced plasma proteomics, US startup Alden Scientific has developed AI models capable of making the connections needed to accurately assess an individual’s state of health and risk of disease. The company’s tool measures more than 200 different conditions, including leading causes of morbidity and mortality such as Alzheimer’s, heart disease, diabetes and stroke. Significantly, its models also enable an individual to understand how an intervention impacts these risks.

With a host of top Silicon Valley investors among its early adopters, Alden is now using its platform to conduct an IRB-approved health study designed to provide a “longitudinal understanding of the interplay between environmental, biological, and medical data.”

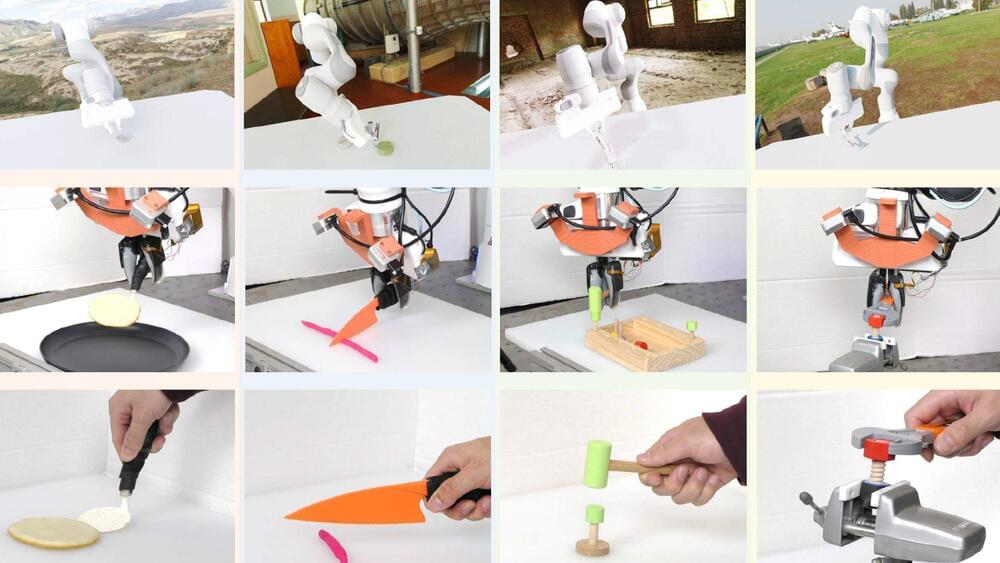

MIT: New AI power teaches robots how to hammer, screw nuts, cook toast

MIT researchers use AI to empower robots for versatile tool use in simulations and real-world settings.

MIT researchers have utilized artificial intelligence (AI) models to combine data from multiple sources to help robots learn better.

The technique employs diffusion models, a type of generative AI, to integrate multiple data sources across various domains, modalities, and tasks.

The training strategy allowed a robot to execute various tool-use activities and adapt to new tasks it was not exposed to during training, both in simulations and real-world tests.

Introducing Aurora: The first large-scale AI foundation model of the atmosphere

When Storm Ciarán battered northwestern Europe in November 2023, it left a trail of destruction. The low-pressure system associated with Storm Ciarán set new records for England, marking it as an exceptionally rare meteorological event. The storm’s intensity caught many off guard, exposing the limitations of current weather-prediction models and highlighting the need for more accurate forecasting in the face of climate change. As communities grappled with the aftermath, the urgent question arose: How can we better anticipate and prepare for such extreme weather events?

A recent study by Charlton-Perez et al. (2024) underscored the challenges faced by even the most advanced AI weather-prediction models in capturing the rapid intensification and peak wind speeds of Storm Ciarán. To help address those challenges, a team of Microsoft researchers developed Aurora, a cutting-edge AI foundation model that can extract valuable insights from vast amounts of atmospheric data. Aurora presents a new approach to weather forecasting that could transform our ability to predict and mitigate the impacts of extreme events—including being able to anticipate the dramatic escalation of an event like Storm Ciarán.

Aurora’s effectiveness lies in its training on more than a million hours of diverse weather and climate simulations, which enables it to develop a comprehensive understanding of atmospheric dynamics. This allows the model to excel at a wide range of prediction tasks, even in data-sparse regions or extreme weather scenarios. By operating at a high spatial resolution of 0.1° (roughly 11 km at the equator), Aurora captures intricate details of atmospheric processes, providing more accurate operational forecasts than ever before—and at a fraction of the computational cost of traditional numerical weather-prediction systems. We estimate that the computational speed-up that Aurora can bring over the state-of-the-art numerical forecasting system Integrated Forecasting System (IFS) is ~5,000x.

The Future of Relationships: Could Women Be Replaced by AI Sex Robots?

The rapid advancement of technology, specifically in the development of sex robots with AI capabilities, could potentially lead to the replacement of real-life partners and have a detrimental effect on meaningful romantic relationships.

Questions to inspire discussion.

How long have sex robots been around?

—Sex robots have been around for about 10 years, and despite their initial hilariously bad appearance, there is a market for them and people are buying them.