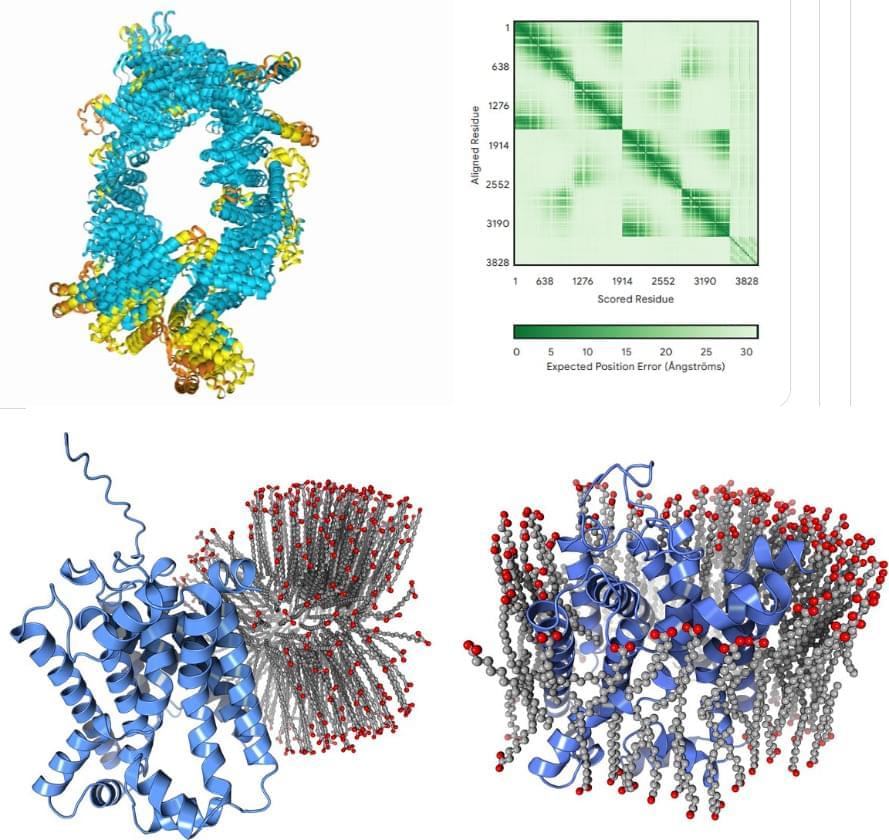

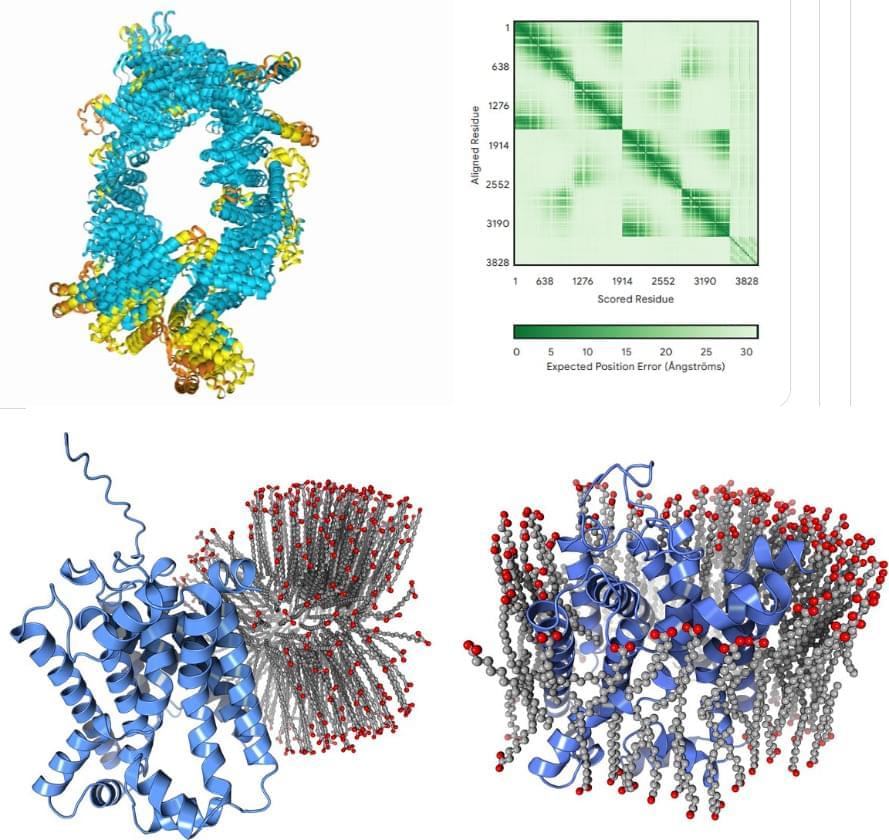

Observations after 3 weeks of DeepMind releasing its hitherto most advanced model for biomolecular structure prediction.

Basically confirmed whats been said here. AI will take over film making by 2029/2030.

Actor and entrepreneur Ashton Kutcher lauded OpenAI’s generative AI video tool Sora at a recent event. “I’ve been playing around with Sora, this latest thing that OpenAI launched that generates video,” Kutcher said. “I have a beta version of it, and it’s pretty amazing. Like, it’s pretty good.”

He explained that users specify the shot, scene, or trailer they desire. “It invents that thing. You haven’t shot any footage, the people in it don’t exist,” he said.

According to Kutcher, Sora can create realistic 10–15 second videos, despite still making minor mistakes and lacking a full understanding of physics. However he said that the technology has come a long way in just one year, with significant leaps in quality and realism.

Chinese state-owned automaker Dongfeng Motor is partnering with robotics firm UBTech to introduce the latter’s humanoid into its manufacturing process.

The industrial version of the Walker S humanoid robot from Ubtech will be used on the production line of Dongfeng Motor to carry out various manufacturing duties.

According to reports, it will involve conducting safety belt inspection, door lock testing, body quality checks, oil filling, and label application. The robot will integrate with traditional automated machinery to handle complex scenarios in unmanned manufacturing.

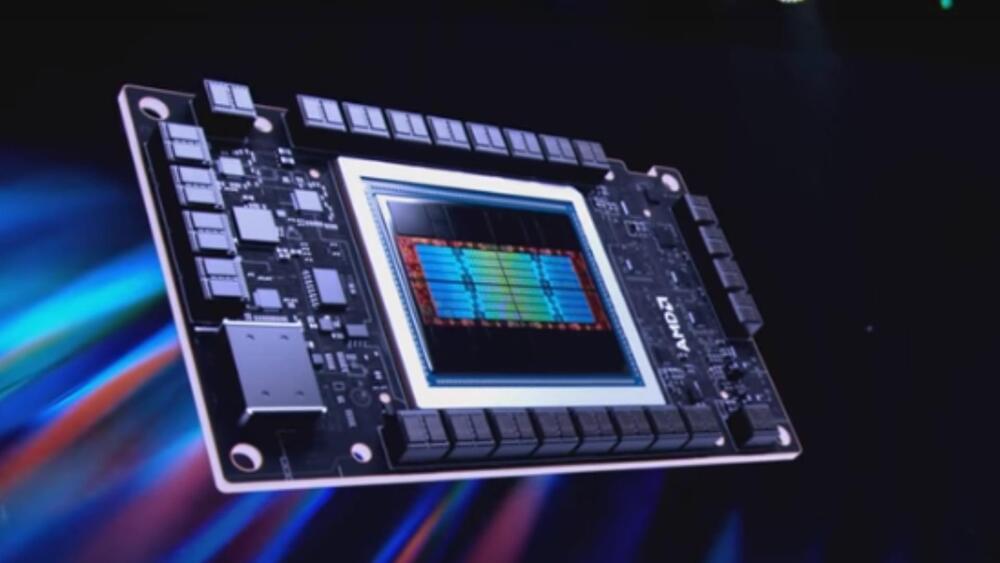

Advanced Micro Devices (AMD) has introduced its newest artificial intelligence (AI) processor, the MI325X. This advanced version of the MI300 series is expected to be available in the fourth quarter.

At the Computex technology trade show in Taipei, AMD also announced that it will develop AI chips over the next two years to challenge Nvidia’s dominance.

It should be noted that Nvidia has an almost 80% share in the market of AI semiconductors.

Nvidia CEO Jensen Huang has unveiled plans to build a next-generation AI platform called Rubin — named after astronomer Vera Rubin.

Huang made the announcement at an address ahead of the COMPUTEX technology convention in Taipei, which starts on June 4.

According to the company’s blog, Huang spoke to nearly 6,500 industry executives, reporters, entrepreneurs, gamers, inventors, and AI fans who had congregated at the glass-domed National Taiwan University Sports Center in Taipei.