To ensure we keep this website safe, please can you confirm you are a human by ticking the box below.

If you are unable to complete the above request please contact us using the below link, providing a screenshot of your experience.

We’ve reached a new milestone in the uncanny valley, folks: AIs are now Rickrolling humans.

In a now-viral post on X-formerly-Twitter, Flo Crivello, the CEO of the AI assistant firm Lindy, explained how this bizarre memetic situation featuring Rick Astley’s 1987 hit “Never Gonna Give You Up” came to pass.

Known as “Lindys,” the company’s AI assistants are intended to help customers with various tasks. Part of a Lindy’s job is to teach clients how to use the platform, and it was during this task that the AI helper provided a link to a video tutorial that wasn’t supposed to exist.

“If pursued, we might see by the end of the decade advances in AI as drastic as the difference between the rudimentary text generation of GPT-2 in 2019 and the sophisticated problem-solving abilities of GPT-4 in 2023,” Epoch wrote in a recent research report detailing how likely it is this scenario is possible.

But modern AI already sucks in a significant amount of power, tens of thousands of advanced chips, and trillions of online examples. Meanwhile, the industry has endured chip shortages, and studies suggest it may run out of quality training data. Assuming companies continue to invest in AI scaling: Is growth at this rate even technically possible?

In its report, Epoch looked at four of the biggest constraints to AI scaling: Power, chips, data, and latency. TLDR: Maintaining growth is technically possible, but not certain. Here’s why.

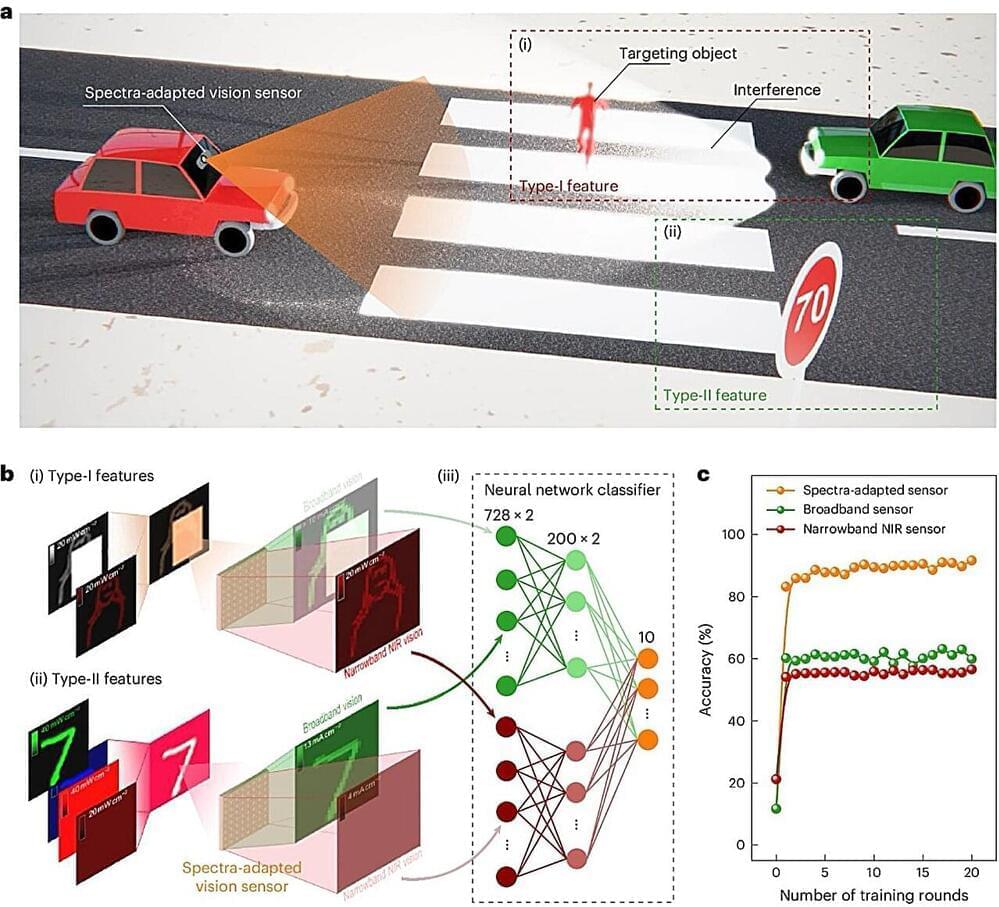

The ability to detect objects in settings with unfavorable lighting, for example at night, in shadowed locations or in foggy conditions, could greatly improve the reliability of autonomous vehicles and mobile robotic systems. Most widely employed computer vision methods, however, have been found to perform under poor lighting.

Researchers at the Hong Kong Polytechnic University recently introduced a new bio-inspired vision sensor that can adapt to the spectral features of the environments it captures, thus successfully detecting objects in a wider range of lighting conditions. This newly developed sensor, introduced in a paper published in Nature Electronics, is based on an array of photodiodes arranged back-to-back.

“In a previous paper in Nature Electronics, we presented a simple in-sensor light intensity adaptation approach to improve the recognition accuracy of machine vision systems,” Bangsen Ouyang, co-author of the paper, told Tech Xplore.

Image: Prof Thomas Hartung.

Over just a few decades, computers shrunk from massive installations to slick devices that fit in our pockets. But this dizzying trend might end soon, because we simply can’t produce small enough components. To keep driving computing forward, scientists are looking for alternative approaches. An article published in Frontiers in Science presents a revolutionary strategy, called organoid intelligence.

The standard model of the universe relies on just six numbers. Using a new approach powered by artificial intelligence, researchers at the Flatiron Institute and their colleagues extracted information hidden in the distribution of galaxies to estimate the values of five of these so-called cosmological parameters with incredible precision.