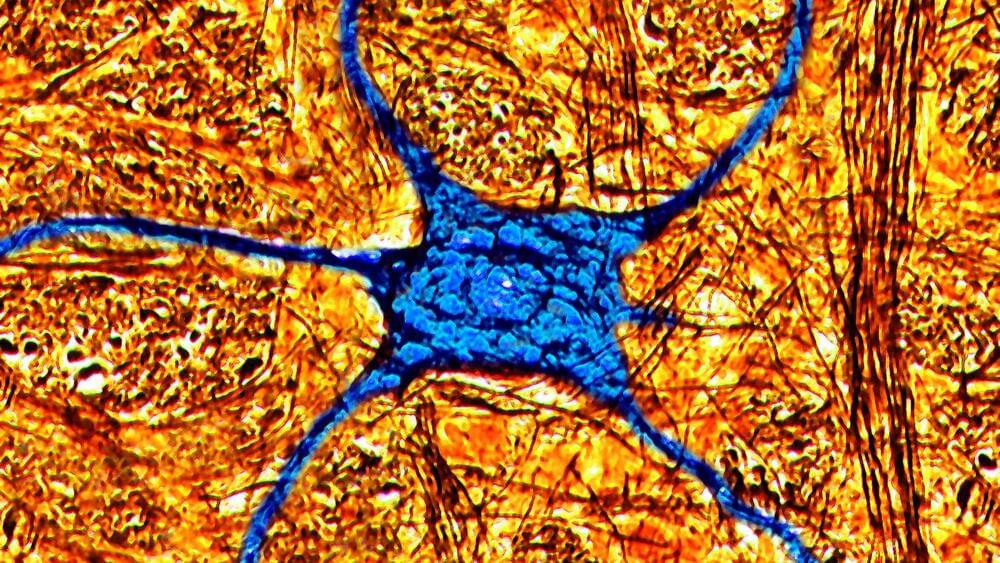

As artificial intelligence (AI) becomes increasingly ubiquitous in business and governance, its substantial environmental impact — from significant increases in energy and water usage to heightened carbon emissions — cannot be ignored. By 2030, AI’s power demand is expected to rise by 160%. However, adopting more sustainable practices, such as utilizing foundation models, optimizing data processing locations, investing in energy-efficient processors, and leveraging open-source collaborations, can help mitigate these effects. These strategies not only reduce AI’s environmental footprint but also enhance operational efficiency and cost-effectiveness, balancing innovation with sustainability.

Page-utils class= article-utils—vertical hide-for-print data-js-target= page-utils data-id= tag: blogs.harvardbusiness.org, 2007/03/31:999.386782 data-title= How Companies Can Mitigate AI’s Growing Environmental Footprint data-url=/2024/07/how-companies-can-mitigate-ais-growing-environmental-footprint data-topic= Environmental sustainability data-authors= Christina Shim data-content-type= Digital Article data-content-image=/resources/images/article_assets/2024/06/Jul24_04_1298348302-383x215.jpg data-summary=

Practical steps for reducing AI’s surging demand for water and energy.