From AI-generated video advancements to new AI-powered AR glasses, here were the 5 biggest announcements.

Category: robotics/AI – Page 535

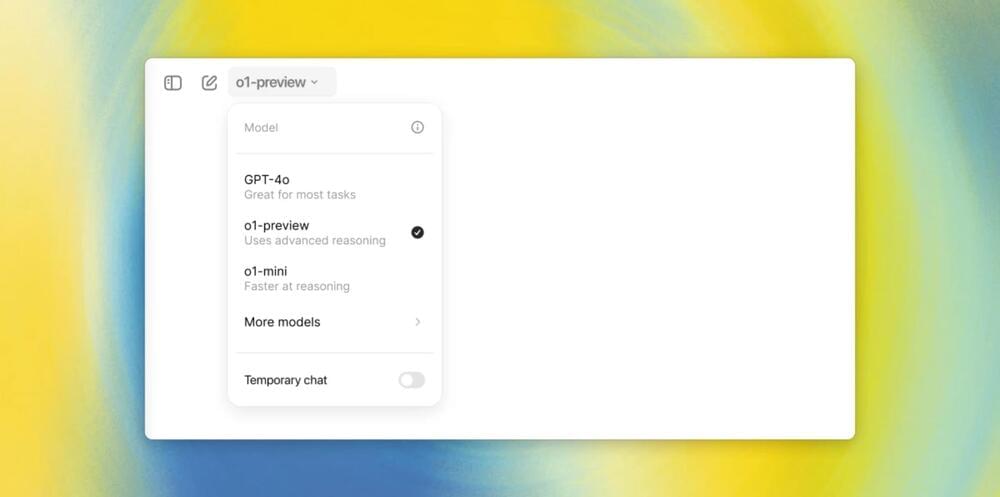

OpenAI releases new o1 AI, its first model capable of reasoning

To expand its GPT capabilities, OpenAI released its long-anticipated o1 model, in addition to a smaller, cheaper o1-mini version. Previously known as Strawberry, the company says these releases can “reason through complex tasks and solve harder problems than previous models in science, coding, and math.”

Although it’s still a preview, OpenAI states this is the first of this series in ChatGPT and on its API, with more to come.

The company says these models have been training to “spend more time thinking through problems before they respond, much like a person would. Through training, they learn to refine their thinking process, try different strategies, and recognize their mistakes.”

Only $20K! Elon Musk CONFIRMS All Tasks Tesla Bot 2.0 Optimus Gen 3 Can Do! Next Gen Homemaker

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

Is AI Now Thinking More Like Humans?

In today’s fast-paced world, speed is celebrated. Instant messaging outpaces thoughtful letters, and rapid-fire tweets replace reflective essays. We’ve become conditioned to believe that faster is better. But what if the next great leap in artificial intelligence challenges that notion? What if slowing down is the key to making AI think more like us—and in doing so, accelerating progress?

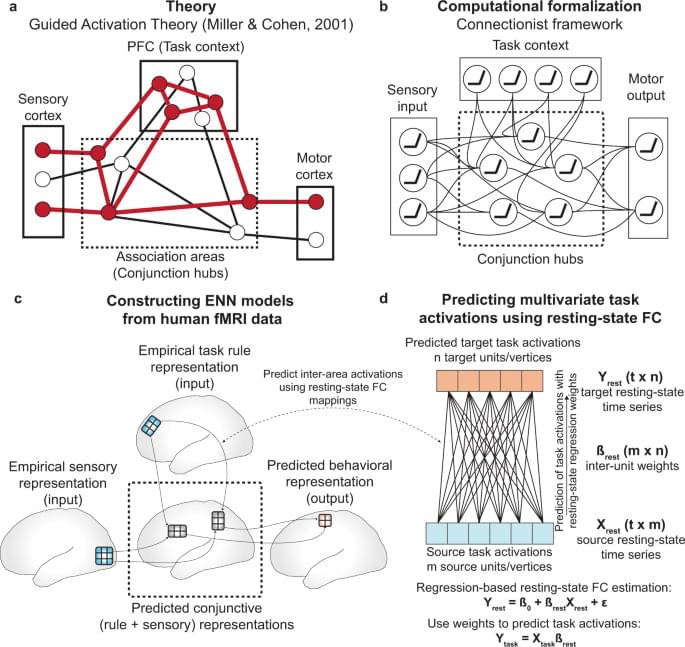

OpenAI’s new o1 model, built on the transformative concept of the hidden Chain of Thought, offers an interesting glimpse into this future. Unlike traditional AI systems that rush to deliver answers by scanning data at breakneck speeds, o1 takes a more human-like approach. It generates internal chains of reasoning, mimicking the kind of reflective thought humans use when tackling complex problems. This evolution not only marks a shift in how AI operates but also brings us closer to understanding how our own brains work.

This concept of AI thinking more like humans is not just a technical accomplishment—it taps into fascinating ideas about how we experience reality. In his book The User Illusion, Tor Nørretranders reveals a startling truth about our consciousness: only a tiny fraction of the sensory input we receive reaches conscious awareness. He argues that our brains process vast amounts of information—up to a million times more than we are consciously aware of. Our minds act as functional filters, allowing only the most relevant information to “bubble up” into our conscious experience.