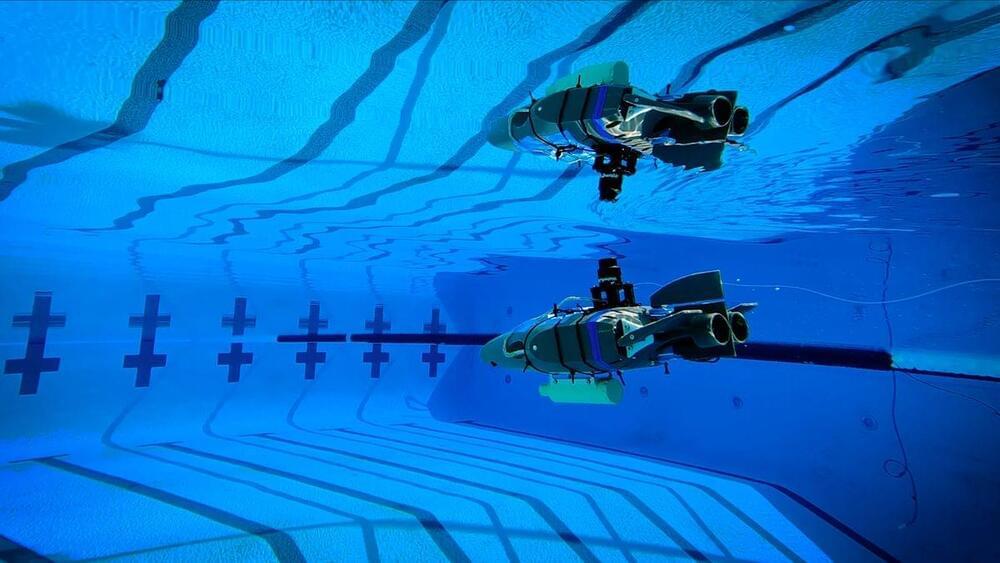

Designed to one day search for evidence of life in the briny ocean beneath the icy shell of Jupiter’s moon Europa, these robots could play a key role in detecting chemical and temperature signals that might indicate alien life, according to scientists at NASA’s Jet Propulsion Laboratory (JPL), who designed and tested the robots.

“People might ask, why is NASA developing an underwater robot for space exploration?” said Ethan Schaler, the project’s principal investigator at JPL. “It’s because there are places we want to go in the solar system to look for life, and we think life needs water.”