“The challenge is applying agentic AI in the enterprise setting or in innovation-driven industries, like materials science R&D or pharma, where there is higher uncertainty and risk,” said Connell. “These more complex environments require a very nuanced understanding by the agent in order to make trustworthy, reliable decisions.”

Also: What is Google’s Project Mariner? This AI agent can navigate the web for you.

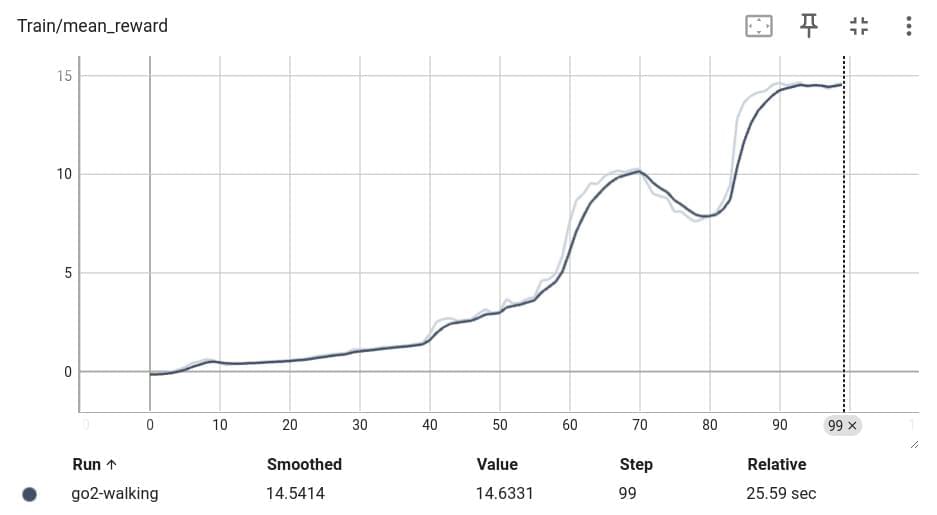

As with analytical and gen AI, data — particularly real-time data — is at the core of agentic AI success. It’s important “to have an understanding of how agentic AI will be used and the data that is powering the agent, as well as a system for testing,” said Connell. “To build AI agents, you need clean and, for some applications, labeled data that accurately represents the problem domain, along with sufficient volume to train and validate your models.”