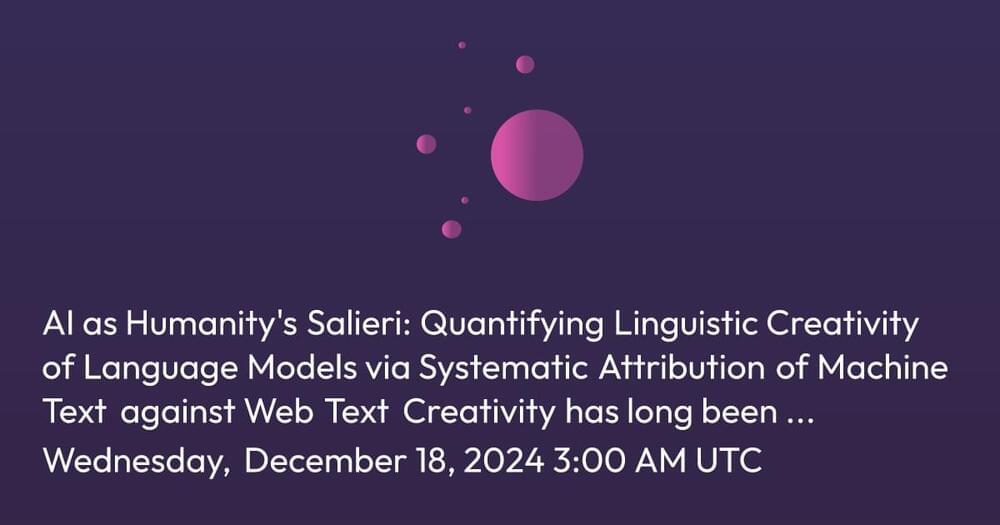

Today I have the pleasure of speaking with a visionary thinker and innovator who’s making waves in the world of artificial intelligence and the future of human health. Dr. Ben Goertzel is the founder and CEO of SingularityNET, a decentralized AI platform that aims to democratize access to advanced artificial intelligence. He’s also the mind behind OpenCog, an open-source project dedicated to developing artificial general intelligence, and he’s a key figure at Hanson Robotics, where he helped create the well-known AI robot, Sophia.

Beyond AI, Dr. Goertzel is deeply involved in exploring how technology can enhance human longevity, contributing to initiatives like Rejuve, which aims to leverage AI and blockchain to advance life extension research. With a career that spans cognitive science, AI development, and innovative health tech, Dr. Goertzel is shaping the future in ways that will impact all of us. Please join me in welcoming Dr. Ben Goertzel!

PRODUCTION CREDITS

⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺⎺

Host, Writer — @emmettshort.

Executive Producer — Keith Comito