In 2014, a team of Googlers (many of whom were former educators) launched Google Classroom as a “mission control” for teachers. With a central place to bring Google’s collaboration tools together, and a constant feedback loop with schools through the Google for Education Pilot Program, Classroom has evolved from a simple assignment distribution tool to a destination for everything a school needs to deliver real learning impact.

Category: robotics/AI – Page 454

Frontiers: Introduction: The integration of ChatGPT, an advanced AI-powered chatbot, into educational settings, has caused mixed reactions among educators

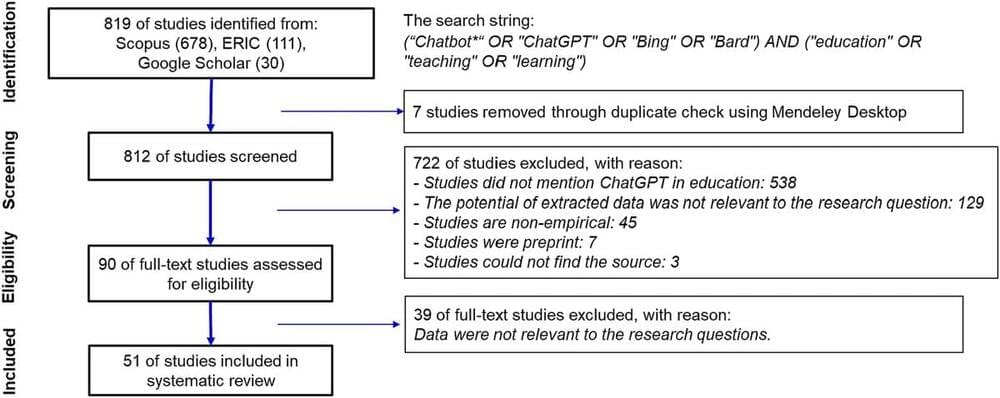

Introduction: The integration of ChatGPT, an advanced AI-powered chatbot, into educational settings, has caused mixed reactions among educators. Therefore, we conducted a systematic review to explore the strengths and weaknesses of using ChatGPT and discuss the opportunities and threats of using ChatGPT in teaching and learning.

Methods: Following the PRISMA flowchart guidelines, 51 articles were selected among 819 studies collected from Scopus, ERIC and Google Scholar databases in the period from 2022–2023.

Results: The synthesis of data extracted from the 51 included articles revealed 32 topics including 13 strengths, 10 weaknesses, 5 opportunities and 4 threats of using ChatGPT in teaching and learning. We used Biggs’s Presage-Process-Product (3P) model of teaching and learning to categorize topics into three components of the 3P model.

How to Prompt ChatGPT to Teach You Anything

Basically chat gpt, gemini, and apple intelligence all can be a great teaching tool that can teach oneself nearly anything. Essentially college even can be quickly solved with AI like chat gpt 4 because it can do more advanced thinking processing than even humans can in any subject. The way to think of this is that chat gpt 4 is like having a neuralink without even needing a physical device inside the brain. Essentially AI can augmented us to become god like just by being able to farm out computer AI instead needing to use our brains for hard mental labor.

I created a prompt chain that enables you to learn any complex concept from ChatGPT.

Neural networks unlock potential of high-entropy carbonitrides in extreme environments

The melting point is one of the most important measurements of material properties, which informs potential applications of materials in various fields. Experimental measurement of the melting point is complex and expensive, but computational methods could help achieve an equally accurate result more quickly and easily.

A research group from Skoltech conducted a study to calculate the maximum melting point of a high-entropy carbonitrides—a compound of titanium, zirconium, tantalum, hafnium, and niobium with carbon and nitrogen.

The results published in the Scientific Reports journal indicate that high-entropy carbonitrides can be used as promising materials for protective coatings of equipment operating under extreme conditions —high temperature, thermal shock, and chemical corrosion.

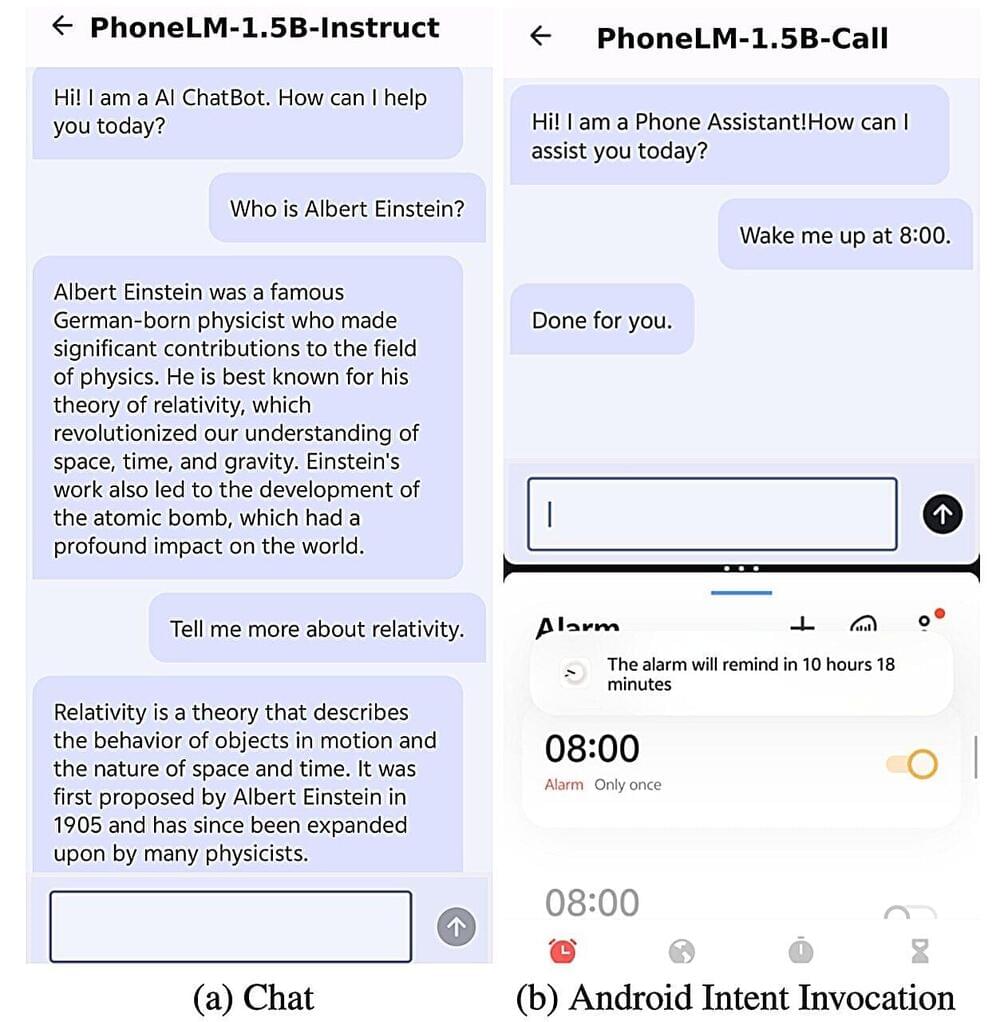

Shrinking AI for Personal Devices: An efficient small language model that could perform better on smartphones

Large language models (LLMs), such as Open AI’s renowned conversational platform ChatGPT, have recently become increasingly widespread, with many internet users relying on them to find information quickly and produce texts for various purposes. Yet most of these models perform significantly better on computers, due to the high computational demands associated with their size and data processing capabilities.

To tackle this challenge, computer scientists have also been developing small language models (SLMs), which have a similar architecture but are smaller. These models could be easier to deploy directly on smartphones, allowing users to consult ChatGPT-like platforms more easily daily.

Researchers at Beijing University of Posts and Telecommunications (BUPT) recently introduced PhoneLM, a new SLM architecture for smartphones that could be both efficient and highly performing. Their proposed architecture, presented in a paper published on the arXiv preprint server, was designed to attain near-optimal runtime efficiency before it undergoes pre-training on text data.

10 Things They’re NOT Telling You About The Coming AI (Must Watch!)

Have you ever wondered what hidden truths are being kept from you about the coming AI revolution? The rapid advancement of artificial intelligence is creating waves of change, but are there crucial aspects that the mainstream isn’t revealing? In this eye-opening video, we dive deep into the 10 things they’re not telling you about the future of AI. From secretive developments to potential societal impacts, this video will reveal the truth behind the hype and what you need to know to stay ahead.

We’ll explore the unspoken implications of AI advancements and how they might affect various industries and everyday life. Discover the hidden agendas and overlooked details that could shape the future of technology and its integration into our daily routines. Whether you’re an AI enthusiast, a tech professional, or simply curious about the future, this video provides essential insights you won’t want to miss.

The coming AI era is not just about technological advancements but also about the underlying narratives that influence public perception and policy decisions. Stay informed and prepared by understanding the full picture of what AI could mean for you and the world at large.

What are the hidden truths about the future of AI?How will the coming AI revolution affect us?What are the secrets behind artificial intelligence advancements?What are the unspoken implications of AI technology?This video will address all these questions. Make sure to watch until the end to get the complete story.

#ai.

#artificialintelligence.

#airevolution.

⭐️ Brand New Channel (Animated):

OpenAI announces first partnership with a university

OpenAI on Thursday announced its first partnership with a higher education institution. Starting in February, Arizona State University will have full access to ChatGPT Enterprise and plans to use it for coursework, tutoring, research and more.

The partnership has been in the works for at least six months, when ASU Chief Information Officer Lev Gonick first visited OpenAI’s HQ, which was preceded by the university faculty and staff’s earlier use of ChatGPT and other artificial intelligence tools, Gonick told CNBC in an interview.

ChatGPT Enterprise, which debuted in August, is ChatGPT’s business tier and includes access to GPT-4 with no usage caps, performance that’s up to two times faster than previous versions and API credits.