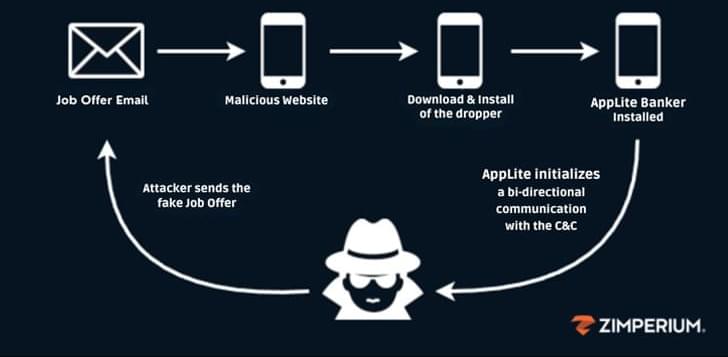

Updated Antidot banking trojan targets Android users via fake job offers, stealing credentials and taking remote control.

Researchers at Lawrence Livermore National Laboratory (LLNL) have developed a new approach that combines generative artificial intelligence (AI) and first-principles simulations to predict three-dimensional (3D) atomic structures of highly complex materials.

This research highlights LLNL’s efforts in advancing machine learning for materials science research and supporting the Lab’s mission to develop innovative technological solutions for energy and sustainability.

The study, recently published in Machine Learning: Science and Technology, represents a potential leap forward in the application of AI for materials characterization and inverse design.

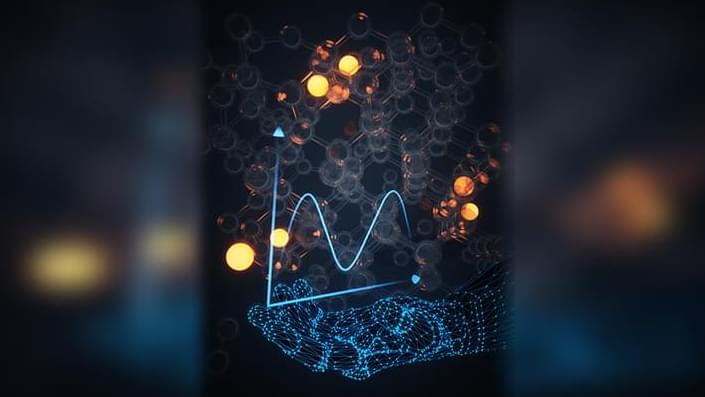

Initially a variant of LSTM known as AWD LSTM was pre trained (unsupervised pre training) for language modelling task using wikipedia articles. In the next step the output layer was turned into a classifier and was fine tuned using various datasets from IMDB, yelp etc. When the model was tested on unseen data, sate of the art results were obtained. The paper further went on to claim that if a model was built using 10,000 rows from scratch then fine tuning the above model (transfer learning) would give much better results with 100 rows only. The only thing to keep in mind is they did not used a transformer in their architecture. This was because both these concepts were researched parallely (transformers and transfer learning) so researchers on both the sides had no idea of what work the other was doing. Transformers paper came in 2017 and ULMFit paper (transfer learning) came in early 2018.

Now architecture wise we had state of the art architecture i.e. Transformers and training wise we have a very beautiful and elegant concept of Transfer Learning. LLMs were the outcome of the combination of these 2 ideas.

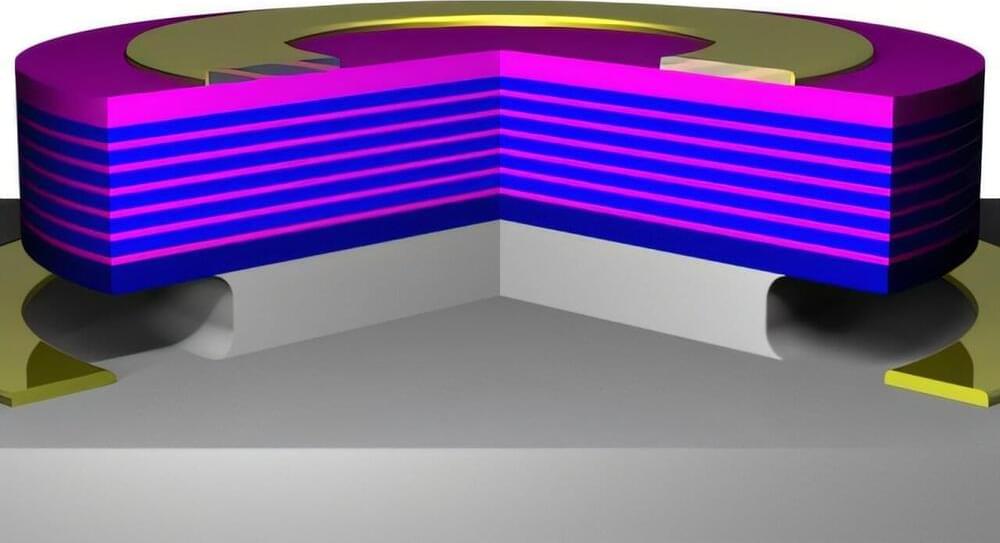

Scientists have developed the first electrically pumped continuous-wave semiconductor laser composed exclusively of elements from the fourth group of the periodic table—the “silicon group.”

Built from stacked ultrathin layers of silicon germanium-tin and germanium-tin, this new laser is the first of its kind directly grown on a silicon wafer, opening up new possibilities for on-chip integrated photonics. The findings have been published in Nature Communications. The team includes researchers from Forschungszentrum Jülich, FZJ, the University of Stuttgart, and the Leibniz Institute for High Performance Microelectronics (IHP), together with their French partner CEA-Leti.

The rapid growth of artificial intelligence and the Internet of Things are driving the demand for increasingly powerful, energy-efficient hardware. Optical data transmission, with its ability to transfer vast amounts of data while minimizing energy loss, is already the preferred method for distances above 1 meter and is proving advantageous even for shorter distances. This development points towards future microchips featuring low-cost photonic integrated circuits (PICs), offering significant cost savings and improved performance.

There are contexts where human cognitive and emotional intelligence takes precedence over AI, which serves a supporting role in decision-making without overriding human judgment. Here, AI “protects” human cognitive processes from things like bias, heuristic thinking, or decision-making that activates the brain’s reward system and leads to incoherent or skewed results. In the human-first mode, artificial integrity can assist judicial processes by analyzing previous law cases and outcomes, for instance, without substituting a judge’s moral and ethical reasoning. For this to work well, the AI system would also have to show how it arrives at different conclusions and recommendations, considering any cultural context or values that apply differently across different regions or legal systems.

4 – Fusion Mode:

Artificial integrity in this mode is a synergy between human intelligence and AI capabilities combining the best of both worlds. Autonomous vehicles operating in Fusion Mode would have AI managing the vehicle’s operations, such as speed, navigation, and obstacle avoidance, while human oversight, potentially through emerging technologies like Brain-Computer Interfaces (BCIs), would offer real-time input on complex ethical dilemmas. For instance, in unavoidable crash situations, a BCI could enable direct communication between the human brain and AI, allowing ethical decision-making to occur in real-time, and blending AI’s precision with human moral reasoning. These kinds of advanced integrations between humans and machines will require artificial integrity at the highest level of maturity: artificial integrity would ensure not only technical excellence but ethical robustness, to guard against any exploitation or manipulation of neural data as it prioritizes human safety and autonomy.

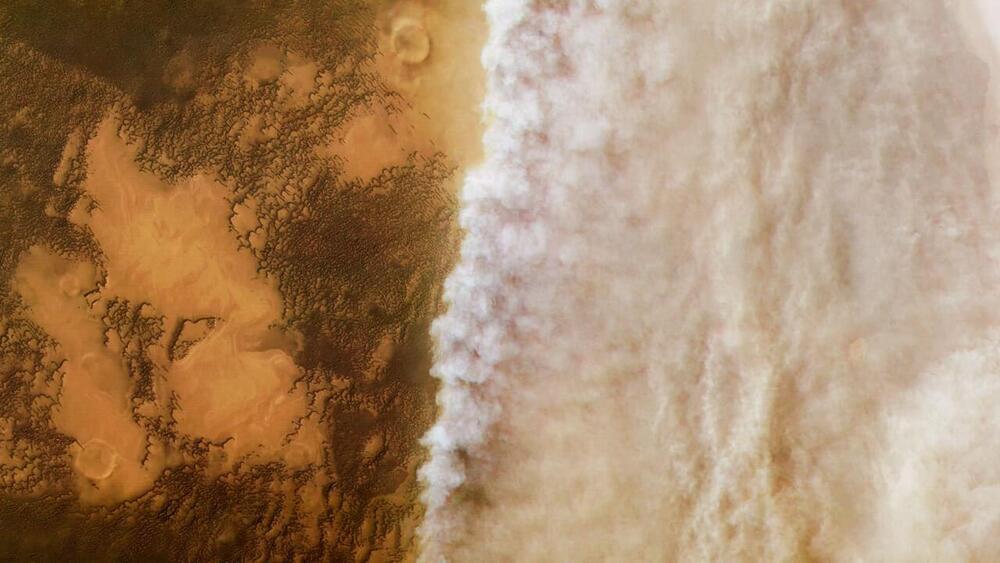

What processes are responsible for dust storms on Mars? This is what a study presented today at the American Geophysical Union 2024 Fall Meeting hopes to address as a pair of researchers from the University of Colorado Boulder (CU Boulder) investigated the causes behind the massive dust storms on Mars, which periodically grow large enough to engulf the entire planet. This study holds the potential to help researchers predict dust storms on Mars, which could help current and future robotic missions survive these calamities, along with future human crews to the Red Planet.

“Dust storms have a significant effect on rovers and landers on Mars, not to mention what will happen during future crewed missions to Mars,” said Heshani Pieris, who is a PhD Candidate in planetary science at CU Boulder and lead author of the study. “This dust is very light and sticks to everything.”

For the study, the researchers examined 15 (Earth) years of data obtained from NASA’s Mars Reconnaissance Orbiter (MRO) to ascertain the processes responsible for kickstarting dust storms. After analyzing countless datasets of Martian surface temperatures, the researchers found that 68 percent of large dust storms on Mars resulted from spikes in surface temperatures during periods of increased sunlight through Mars’ thin atmosphere.

A year later, he got a myoelectric arm, a type of prosthetic powered by the electrical signals in his residual limb’s muscles. But Smith hardly used it because it was “very, very slow” and had a limited range of movements. He could open and close the hand, but not do much else. He tried other robotic arms over the years, but they had similar problems.

“They’re just not super functional,” he says. “There’s a massive delay between executing a function and then having the prosthetic actually do it. In my day-to-day life, it just became faster to figure out other ways to do things.”

Recently, he’s been trying out a new system by Austin-based startup Phantom Neuro that has the potential to provide more lifelike control of prosthetic limbs. The company is building a thin, flexible muscle implant to allow amputees a wider, more natural range of movement just by thinking about the gestures they want to make.

MovieNet mimics the brain to analyze dynamic scenes with exceptional accuracy.

By simulating how the human brain processes a moving world, Scripps researchers have reached a breakthrough in AI as current models only recognize still images.

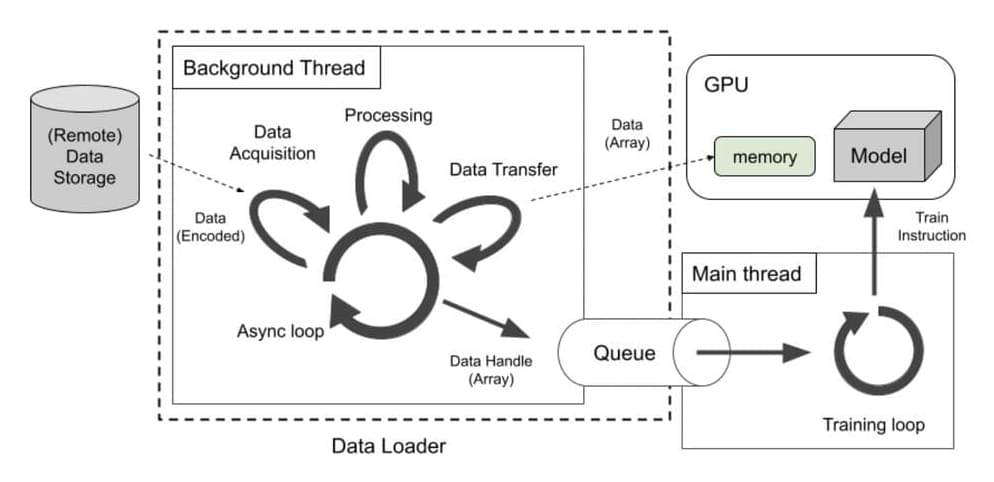

Training AI models today isn’t just about designing better architectures—it’s also about managing data efficiently. Modern models require vast datasets and need those datasets delivered quickly to GPUs and other accelerators. The problem? Traditional data loading systems often lag behind, slowing everything down. These older systems rely heavily on process-based methods that struggle to keep up with the demand, leading to GPU downtime, longer training sessions, and higher costs. This becomes even more frustrating when you’re trying to scale up or work with a mix of data types.

To tackle these issues, Meta AI has developed SPDL (Scalable and Performant Data Loading), a tool designed to improve how data is delivered during AI training. SPDL uses thread-based loading, which is a departure from the traditional process-based approach, to speed things up. It handles data from all sorts of sources—whether you’re pulling from the cloud or a local storage system—and integrates it seamlessly into your training workflow.

SPDL was built with scalability in mind. It works across distributed systems, so whether you’re training on a single GPU or a large cluster, SPDL has you covered. It’s also designed to work well with PyTorch, one of the most widely used AI frameworks, making it easier for teams to adopt. And since it’s open-source, anyone can take advantage of it or even contribute to its improvement.