Surging emissions, battlefield algorithms, Trump’s chip war, and other predictions.

If you’re setting out to build an underwater robot that’s speedy, maneuverable and versatile, why not just copy what already works in the natural world? That’s exactly what China’s Beatbot has done, with its bio-inspired Amphibious RoboTurtle.

Unveiled in prototype form last week at CES, the autonomous robot is designed for applications including ecological research, environmental monitoring, and disaster response.

As such, it can be equipped with hardware such as a water sampling unit, GPS module, ultrasonic sensors, and AI-enabled cameras. The latter reportedly allow it to perceive and react to changes in its environment, and to autonomously track/follow marine animals.

In today’s AI news, Macquarie will invest up to $5 billion in data centers being built by artificial-intelligence infrastructure company Applied Digital, adding to the Australian bank’s substantial AI-related investments.

And, President Joe Biden will issue an executive order on Tuesday to provide federal support to address massive energy needs for fast-growing advanced artificial intelligence data centers, the White House said.

The order calls for leasing federal sites owned by Defense and Energy departments to host gigawatt-scale AI data centers and new clean power facilities — to address enormous power needs on a short time frame.

Then, Microsoft is creating a new engineering group that’s focused on artificial intelligence. Led by former Meta engineering chief Jay Parikh, the new CoreAI – Platform and Tools division will combine Microsoft’s Dev Div and AI platform teams together to focus on building an AI platform and tools.

S strategy to enhance its AI capabilities across hybrid cloud environments.” + In videos, Snowflake CEO Sridhar Ramaswamy announces a new “upskill” initiative on AI as they work to address a global skills shortage. He joins Caroline Hyde on “Bloomberg Technology” to discuss the companies investment in educating people on AI skills.

Then Eleven Labs’ Louis Jordan demonstrates a conversational AI voice agent that can be integrated with Stripe to promote purchases, issue refunds, apply coupons and credits as well as many other payment related transactions with customers. Louis remarks that this is the future of in-app customer service.

And, did you know the U.S. nurse labor market is over $600 billion annually, but the dedicated software market for nurses is almost zero? In this episode, a16z General Partners Alex Rampell, David Haber, and Angela Strange discuss how AI is revolutionizing labor by automating tasks traditionally done by humans.

A tiny cooling device can automatically reset malfunctioning components of a quantum computer. Its performance suggests that manipulating heat could also enable other autonomous quantum devices.

Quantum computers aren’t yet fully practical because they make too many errors. In fact, if qubits – key components of this type of computer – accidentally heat up and become too energetic, they can end up in an erroneous state before the calculation even begins. One way to “reset” the qubits to their correct states is to cool them down.

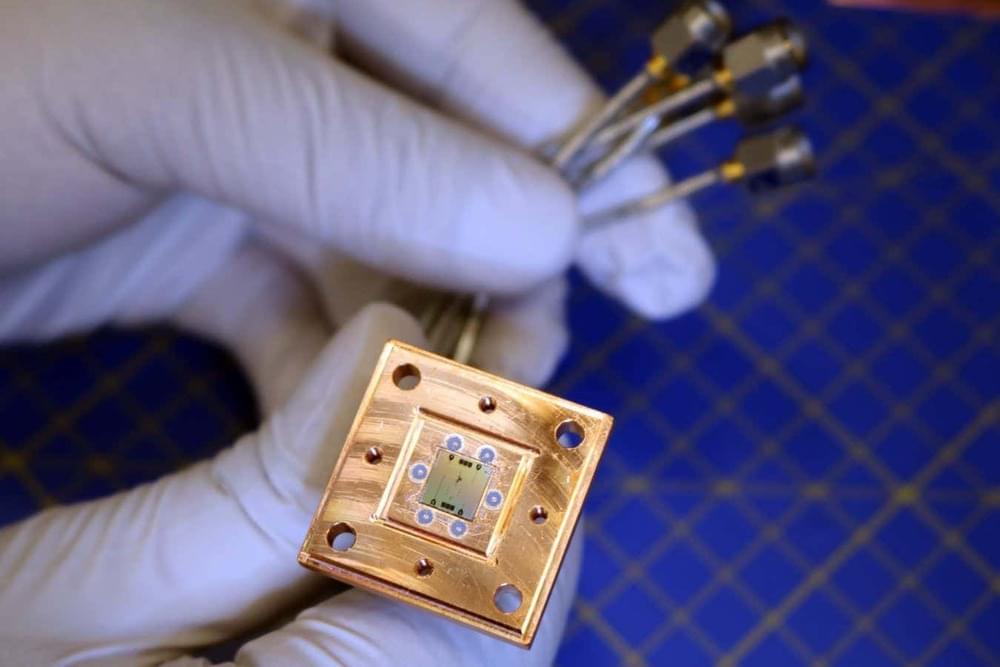

Image: chalmers university of technology, lovisa håkansson.

A tiny quantum “refrigerator” can ensure that a quantum computer’s calculations start off error-free – without requiring oversight or even new hardware.

Adobe has added numerous features to its Firefly GenAI suite since its introduction in 2023. The latest update enables companies to adjust images in bulk – and when they say bulk they are claiming by the thousands, if necessary. Known as Firefly Bulk Create, this tool aims to accelerate advertising and messaging campaigns by making image alterations more efficient. While some critics worry that this technology might erode human artistry in advertising, Adobe’s press release promotes the new tools as a means to cut through tedious work.

Increasingly, AI systems are interconnected, which is generating new complexities and risks. Managing these ecosystems effectively requires comprehensive training, designing technological infrastructures and processes so they foster collaboration, and robust governance frameworks. Examples from healthcare, financial services, and legal profession illustrate the challenges and ways to overcome them.

Page-utils class= article-utils—vertical hide-for-print data-js-target= page-utils data-id= tag: blogs.harvardbusiness.org, 2007/03/31:999.397802 data-title= A Guide to Managing Interconnected AI Systems data-url=/2024/12/a-guide-to-managing-interconnected-ai-systems data-topic= AI and machine learning data-authors= I. Glenn Cohen; Theodoros Evgeniou; Martin Husovec data-content-type= Digital Article data-content-image=/resources/images/article_assets/2024/12/Dec24_13_BrianRea-383x215.jpg data-summary=

The risks and complexities of these ecosystems require specific training, infrastructure, and governance.

The once shiny, exciting use cases for quantum technology may turn out to be pretty mundane if a small, but courageous band of researchers proves their theories correct. After all, using quantum computers to find new drug treatments, navigate the world without global positioning systems, and optimize complex portfolios may seem downright boring compared to using them to explore the myriad of questions that surround the hard problems of consciousness. Questions like: what the heck even is consciousness — and, does it have a connection to quantum mechanics? And, can quantum computing help make robots conscious — and should we make them conscious?

Tough questions, for sure, but here we’ll introduce a few researchers and entrepreneurs who are heading in that direction right now and leaning into what might turn out to be the ultimate quantum computing use case of all time: consciousness.

Hartmut Neven, a physicist and computational neuroscientist leading Google’s Quantum Artificial Intelligence Lab, believes quantum computing could help explore consciousness. Speaking to New Scientist, Neven outlined experiments and theories suggesting consciousness might emerge from quantum phenomena, such as entanglement and superposition, within the human brain. He proposes leveraging quantum computers to test these ideas, potentially expanding our understanding of how the mind interacts with the physical world.