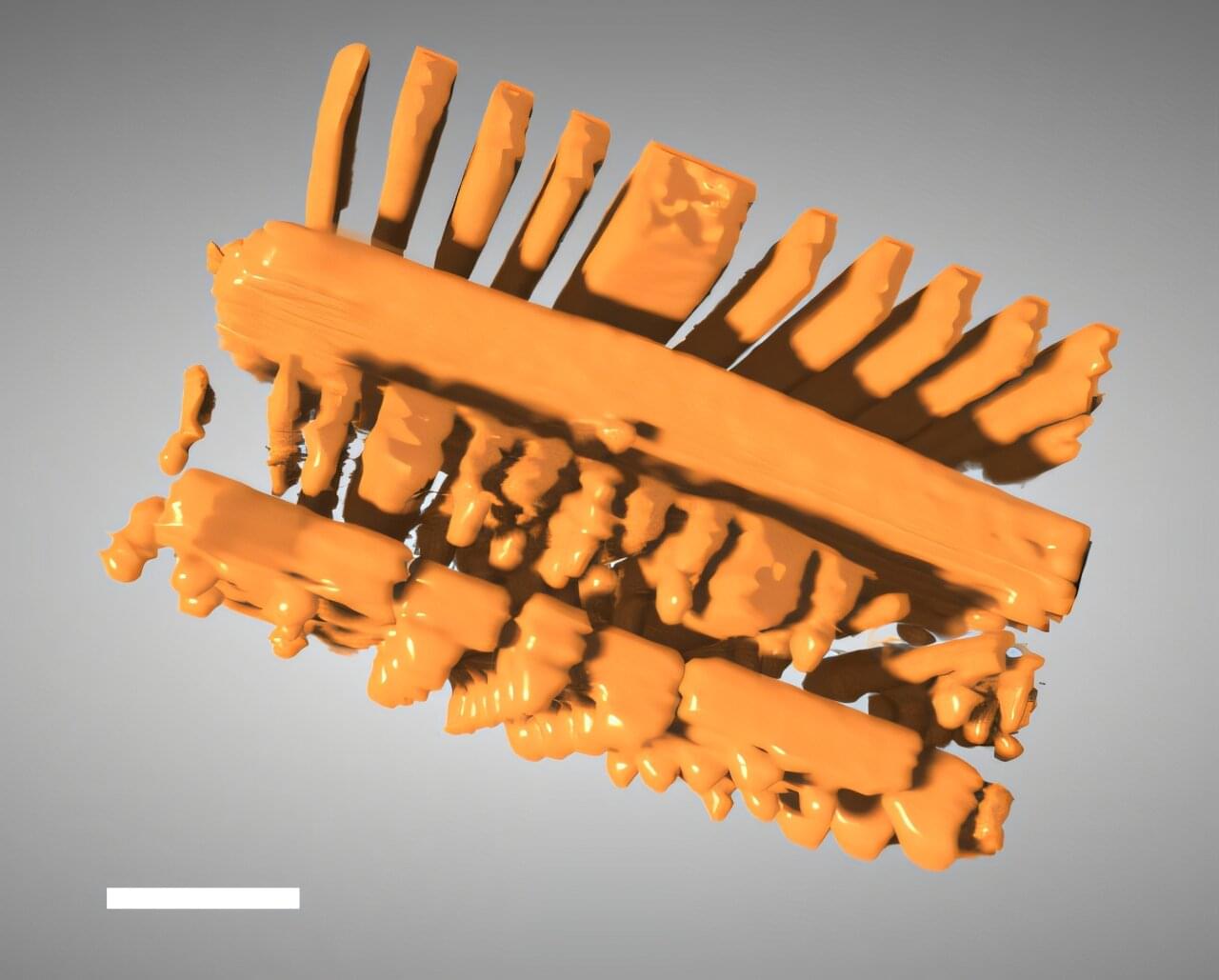

X-ray tomography is a powerful tool that enables scientists and engineers to peer inside of objects in 3D, including computer chips and advanced battery materials, without performing anything invasive. It’s the same basic method behind medical CT scans.

Scientists or technicians capture X-ray images as an object is rotated, and then advanced software mathematically reconstructs the object’s 3D internal structure. But imaging fine details on the nanoscale, like features on a microchip, requires a much higher spatial resolution than a typical medical CT scan—about 10,000 times higher.

The Hard X-ray Nanoprobe (HXN) beamline at the National Synchrotron Light Source II (NSLS-II), a U.S. Department of Energy (DOE) Office of Science user facility at DOE’s Brookhaven National Laboratory, is able to achieve that kind of resolution with X-rays that are more than a billion times brighter than traditional CT scans.