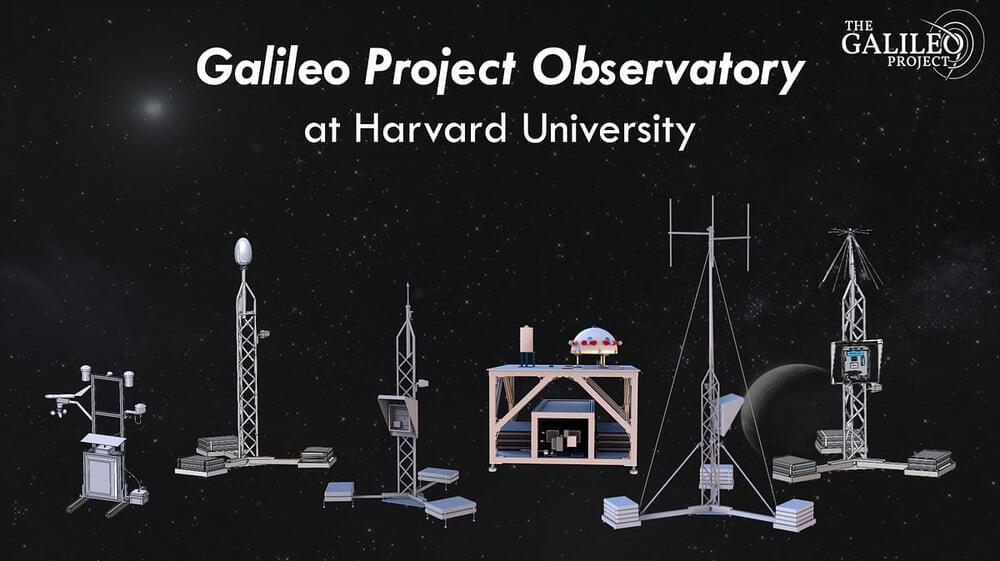

Over the past few months, I was asked multiple times by Staff of the House Committee on Oversight and Accountability whether I am available to testify before the U.S. Congress on Unidentified Anomalous Phenomena (UAPs). As a result, I cleared my calendar for November 13, 2024 and prepared the following written statement. At the end, I was not called to testify before Congress and so I am posting below my intended statement. The Galileo Project under my leadership is about to release this week unprecedented results from commissioning data of its unique Observatory at Harvard University. Half a million objects were monitored on the sky and their appearance was analyzed by state-of-the-art machine learning algorithms. Are any of them UAPs and if so — what are their flight characteristics? Unfortunately, the congressional hearing chairs chose not to hear about these scientific results, nor about the scientific findings from our ocean expedition to the site of the first reported meteor from interstellar space.

Stay tuned for the first extensive paper on the commissioning data from the first Galileo Project Observatory, to be posted publicly in the coming days. Here is my public statement.