Researchers from the University of California, Santa Barbara (UCSB) designed a “material-like” collective of programmable micro-robots, which can behave like a fluid or bond together to create new solid structures. The technology could lead to the development of a new sub-field of robotics.

The UCSB scientists set out to design simple robots that could work together, like a colony of ants or other collective groups. The study, recently published in Science, describes micro-robotic units that can switch from a “fluidizing” state to a more “solid” shape based on the rotational state of the robots.

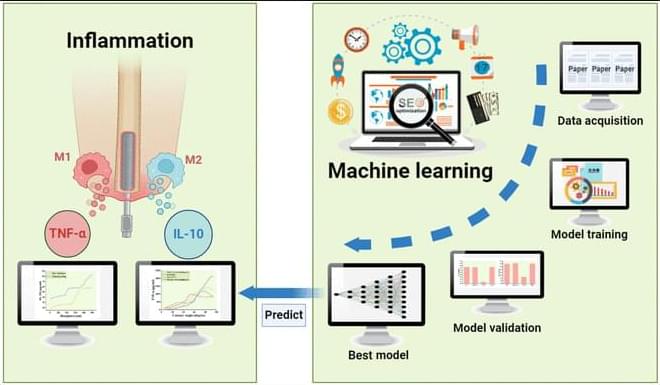

The idea is ripped straight from science fiction concepts like the T-1000 from Terminator 2: Judgement Day. The researchers claim they have turned this theoretical vision into reality after studying embryonic morphogenesis, the biological process through which cells can change their shapes and turn into different tissues in the human body.