The MUSK model combines clinical notes and images to predict prognosis and immunotherapy response.

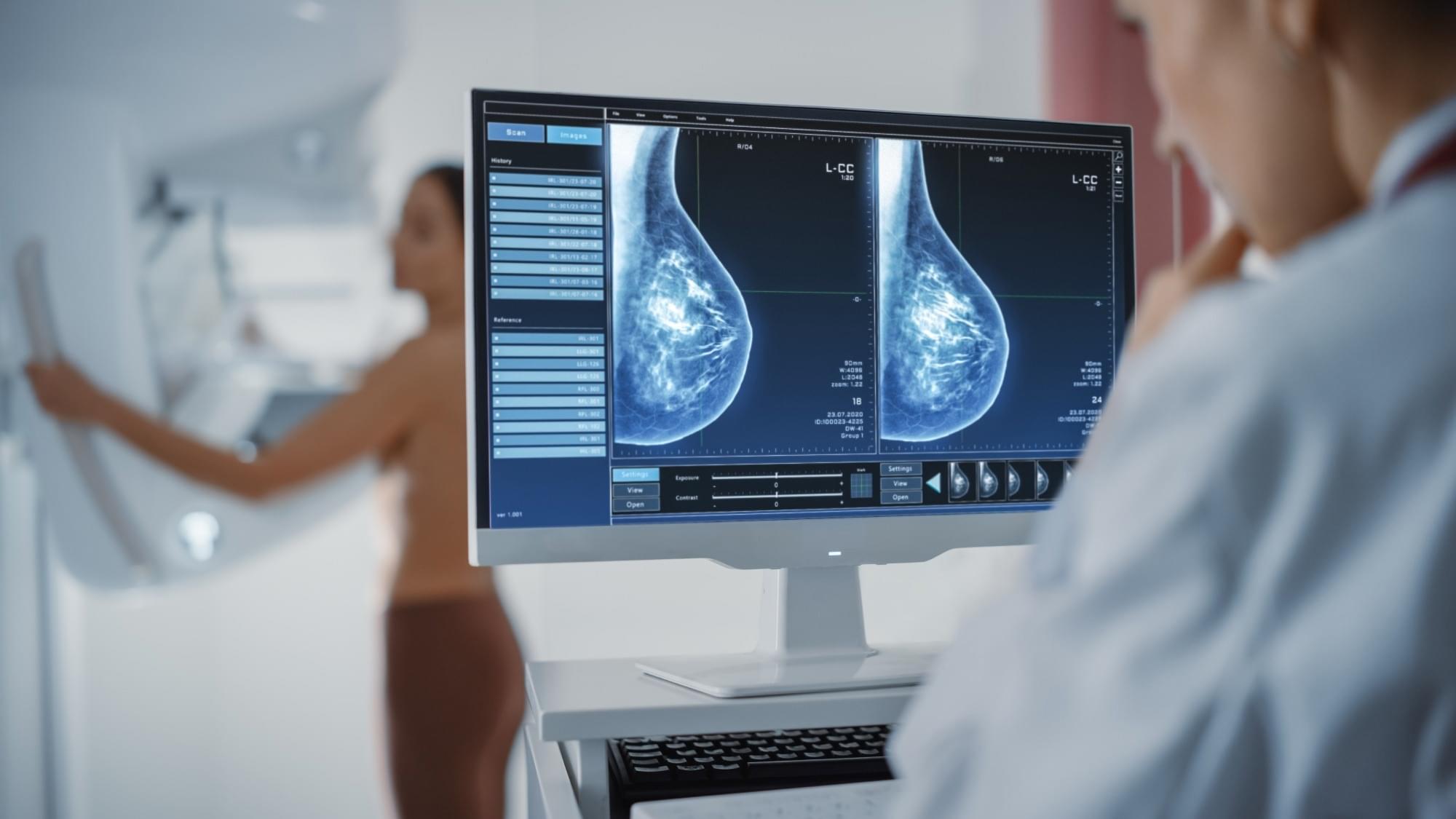

A few prospective studies have suggested that AI use in mammography screening increases cancer detection. However, cancer detection supported with AI should not predominately identify indolent cancers or occur at the expense of more false positives; instead, AI usage should increase the detection of clinically relevant cancers.

About the study

In the present study, researchers assessed the performance of cancer screening measures in the MASAI trial. The trial was designed to compare AI-supported mammography screening with standard double-reading.

The concept of computational consciousness and its potential impact on humanity is a topic of ongoing debate and speculation. While Artificial Intelligence (AI) has made significant advancements in recent years, we have not yet achieved a true computational consciousness capable of replicating the complexities of the human mind.

AI technologies are becoming increasingly sophisticated, performing tasks that were once exclusive to human intelligence. However, fundamental differences remain between AI and human consciousness. Human cognition is not purely computational; it encompasses emotions, subjective experiences, self-awareness, and other dimensions that machines have yet to replicate.

The rise of advanced AI systems will undoubtedly transform society, reshaping how we work, communicate, and interact with the digital world. AI enhances human capabilities, offering powerful tools for solving complex problems across diverse fields, from scientific research to healthcare. However, the ethical implications and potential risks associated with AI development must be carefully considered. Responsible AI deployment, emphasizing fairness, transparency, and accountability, is crucial.

In this evolving landscape, ETER9 introduces an avant-garde and experimental approach to AI-driven social networking. It redefines digital presence by allowing users to engage with AI entities known as ‘noids’ — autonomous digital counterparts designed to extend human presence beyond time and availability. Unlike traditional virtual assistants, noids act as independent extensions of their users, continuously learning from interactions to replicate communication styles and behaviors. These AI-driven entities engage with others, generate content, and maintain a user’s online presence, ensuring a persistent digital identity.

ETER9’s noids are not passive simulations; they dynamically evolve, fostering meaningful interactions and expanding the boundaries of virtual existence. Through advanced machine learning algorithms, they analyze user input, adapt to personal preferences, and refine their responses over time, creating an AI representation that closely mirrors its human counterpart. This unique integration of AI and social networking enables users to sustain an active online presence, even when they are not physically engaged.

The advent of autonomous digital counterparts in platforms like ETER9 raises profound questions about identity and authenticity in the digital age. While noids do not possess true consciousness, they provide a novel way for individuals to explore their own thoughts, behaviors, and social interactions. Acting as digital mirrors, they offer insights that encourage self-reflection and deeper understanding of one’s digital footprint.

As this frontier advances, it is essential to approach the development and interaction with digital counterparts thoughtfully. Issues such as privacy, data security, and ethical AI usage must be at the forefront. ETER9 is committed to ensuring user privacy and maintaining high ethical standards in the creation and functionality of its noids.

ETER9’s vision represents a paradigm shift in human-AI relationships. By bridging the gap between physical and virtual existence, it provides new avenues for creativity, collaboration, and self-expression. As we continue to explore the potential of AI-driven digital counterparts, it is crucial to embrace these innovations with mindful intent, recognizing that while AI can enhance and extend our digital presence, it is our humanity that remains the core of our existence.

As ETER9 pushes the boundaries of AI and virtual presence, one question lingers:

— Could these autonomous digital counterparts unlock deeper insights into human consciousness and the nature of our identity in the digital era?

© 2025 __Ӈ__

In December, the company gave access to developers and trusted testers, as well as wrapping some features into Google products, but this is a “general release,” according to Google.

The suite of models includes 2.0 Flash, which is billed as a “workhorse model, optimal for high-volume, high-frequency tasks at scale,” as well as 2.0 Pro Experimental for coding performance, and 2.0 Flash-Lite, which the company calls its “most cost-efficient model yet.”

Gemini Flash costs developers 10 cents per million tokens for text, image and video inputs, while Flash-Lite, its more cost-effective version, costs 0.75 of a cent for the same. Tokens refer to each individual unit of data that the model processes.

Figure AI CEO Brett Adcock promised to deliver “something no one has ever seen on a humanoid” in the next 30 days.

“We found that to solve embodied AI at scale in the real world, you have to vertically integrate robot AI.”

“We can’t outsource AI for the same reason we can’t outsource our hardware.”

Figure AI, a robotics company working to bring a general-purpose humanoid robot into commercial and residential use, announced Tuesday on X that it is exiting a deal with OpenAI. The Bay Area-based outfit has instead opted to focus on in-house AI owing to a “major breakthrough.” In conversation with TechCrunch afterward, founder and CEO Brett Adcock was tightlipped in terms of specifics, but he promised to deliver “something no one has ever seen on a humanoid” in the next 30 days.

OpenAI has been a longtime investor in Figure. The two companies announced a deal last year that aimed to “develop next generation AI models for humanoid robots.” At the same time, Figure announced a $675 million raise, valuing the company at $2.6 billion. Figure has so far raised a total of $1.5 billion from investors.

The news is a surprise, given the role that OpenAI plays in the cultural zeitgeist. Mere association with the company comes with a rapid profile boost. In August, the two companies announced that the Figure 2 humanoid would use OpenAI models for natural language communication.

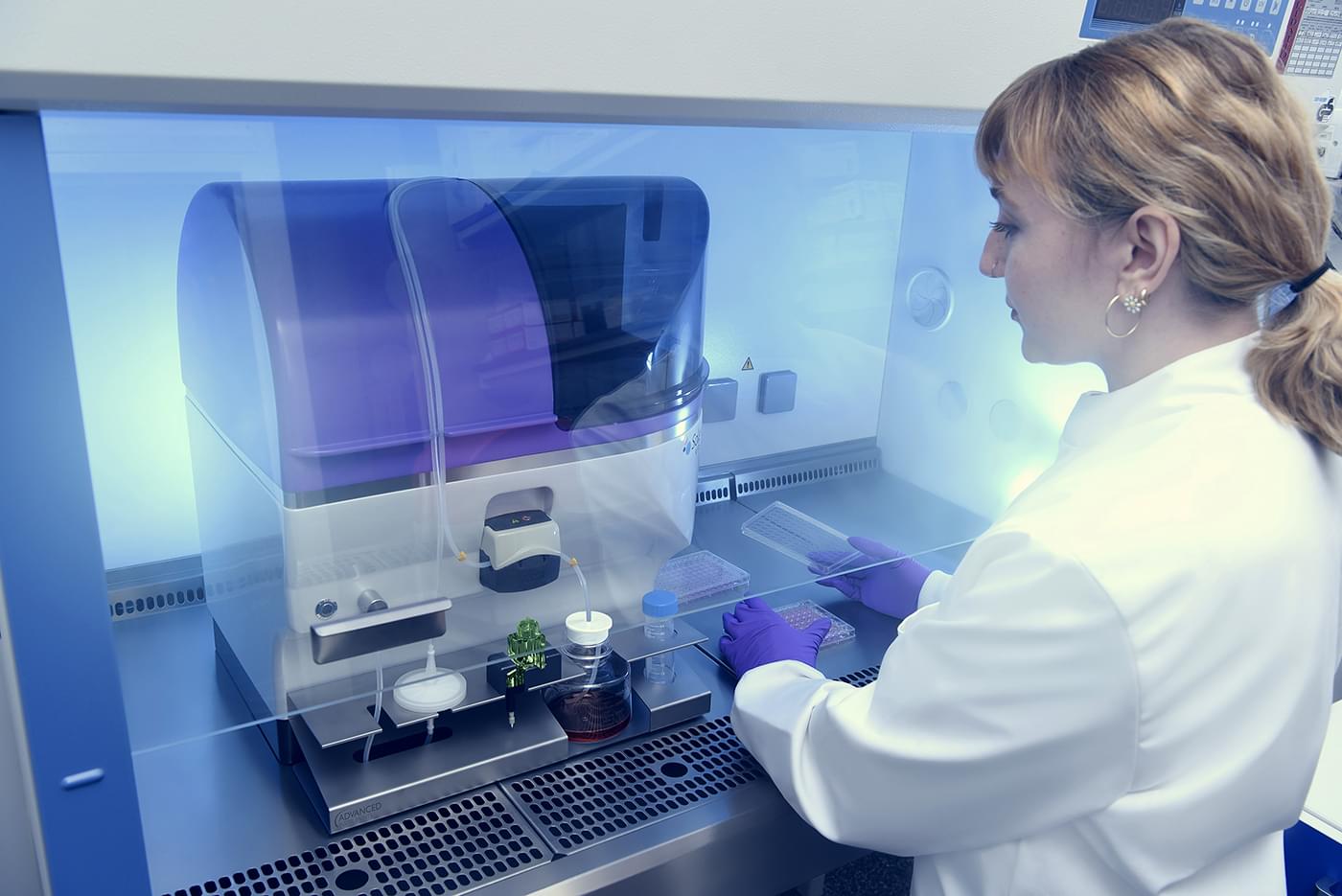

The rise of antimicrobial resistance has rendered many treatments ineffective, posing serious public health challenges. Intracellular infections are particularly difficult to treat since conventional antibiotics fail to neutralize pathogens hidden within human cells. However, designing molecules that penetrate human cells while retaining antimicrobial activity has historically been a major challenge. Here, we introduce APEXDUO, a multimodal artificial intelligence (AI) model for generating peptides with both cell-penetrating and antimicrobial properties. From a library of 50 million AI-generated compounds, we selected and characterized several candidates. Our lead, Turingcin, penetrated mammalian cells and eradicated intracellular Staphylococcus aureus. In mouse models of skin abscess and peritonitis, Turingcin reduced bacterial loads by up to two orders of magnitude. In sum, APEXDUO generated multimodal antibiotics, opening new avenues for molecular design.

CFN provides consulting services to Invaio Sciences and is a member of the Scientific Advisory Boards of Nowture S.L., Peptidus, European Biotech Venture Builder and Phare Bio. CFN is also a member of the Advisory Board for the Peptide Drug Hunting Consortium (PDHC). The de la Fuente Lab has received research funding or in-kind donations from United Therapeutics, Strata Manufacturing PJSC, and Procter & Gamble, none of which were used in support of this work. An invention disclosure associated with this work has been filed. All other authors declare no competing interests.