Using common kitchen ingredients such as citric acid and sodium bicarbonate, scientists have created an edible pneumatic battery and valve system to power soft robots.

Soft, biodegradable robots are used in various fields, such as environmental monitoring and targeted drug delivery, and are designed to completely disappear after performing their tasks. However, the main problem with them is that they rely on conventional batteries (such as lithium), which are toxic and non-biodegradable. And until now, no successful system has been developed that can provide repeated, self-sustained motion using only edible materials.

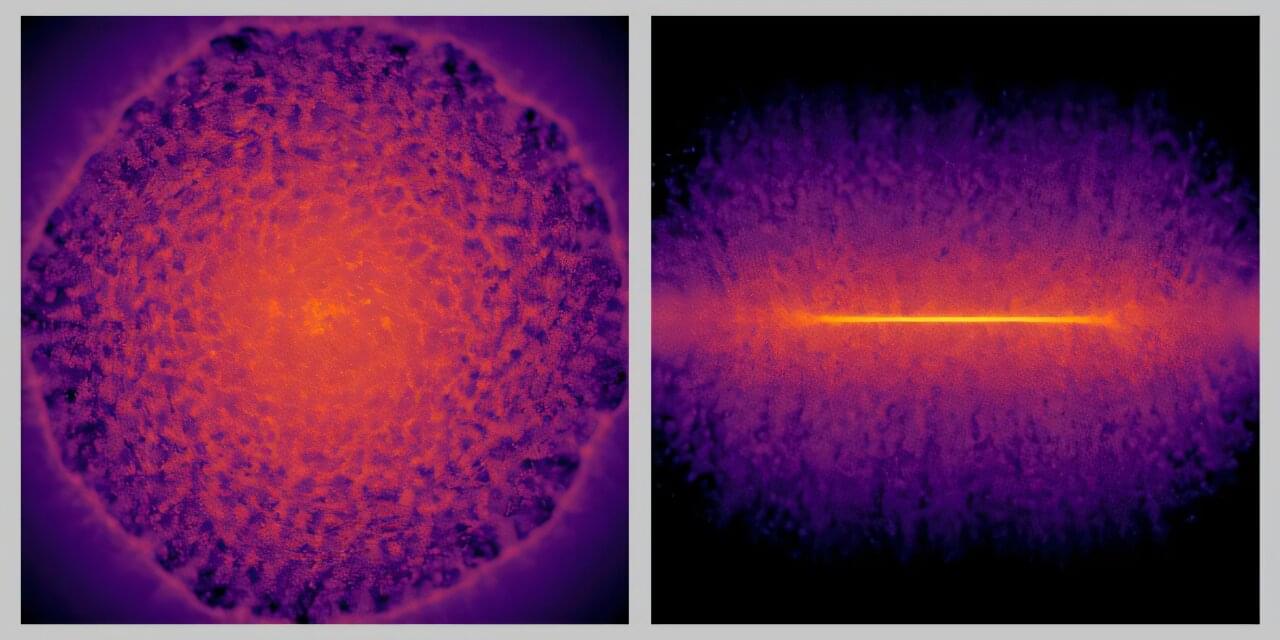

In a new paper published in the journal Advanced Science, researchers from Dario Floreano’s Laboratory of Intelligent Systems at EPFL in Switzerland describe how they developed a fully edible power source (battery), a valve system (controller), and an actuator (the robot’s muscle).