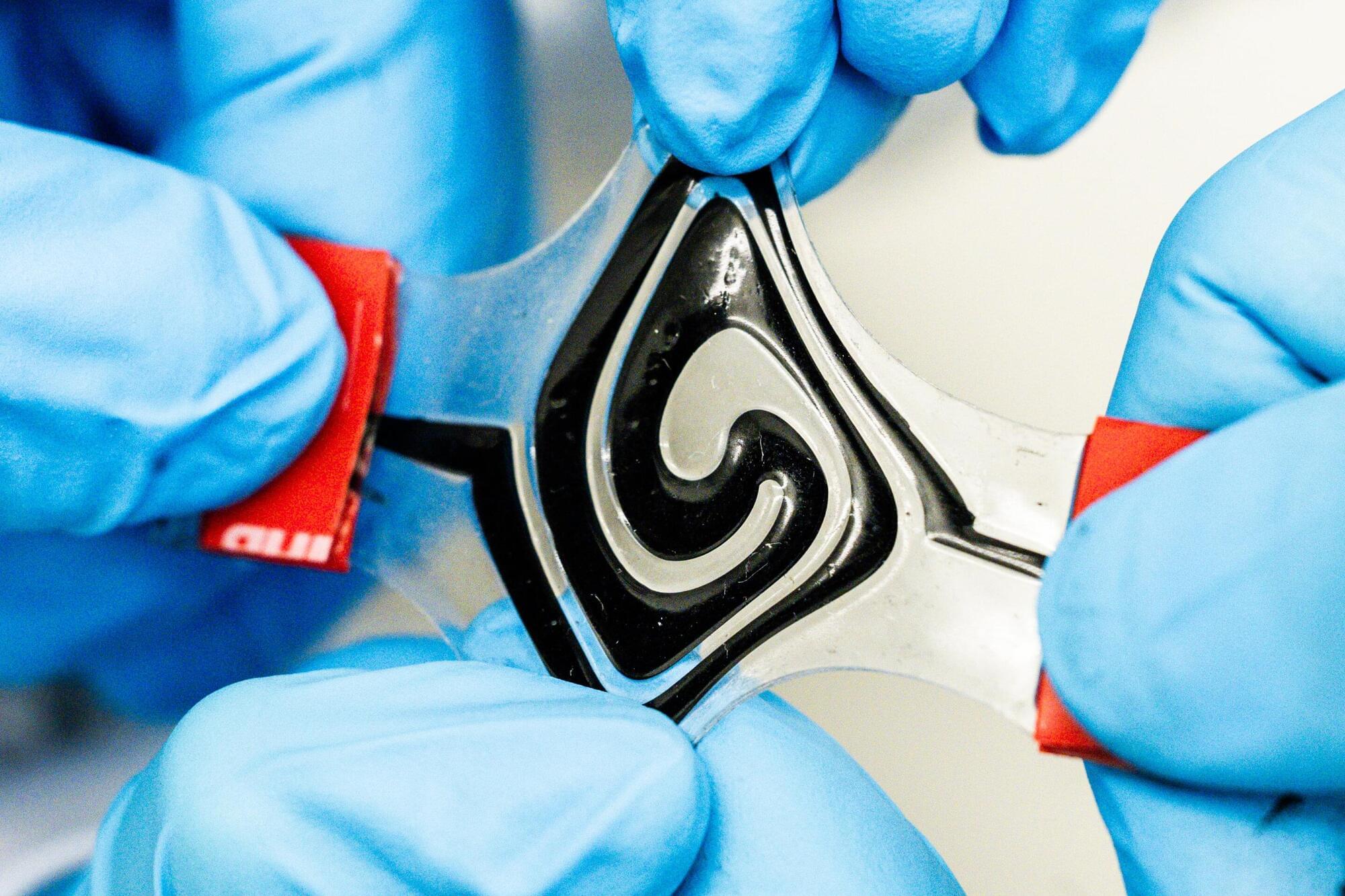

Using electrodes in a fluid form, researchers at Linköping University have developed a battery that can take any shape. This soft and conformable battery can be integrated into future technology in a completely new way. Their study has been published in the journal Science Advances.

“The texture is a bit like toothpaste. The material can, for instance, be used in a 3D printer to shape the battery as you please. This opens up for a new type of technology,” says Aiman Rahmanudin, assistant professor at Linköping University.

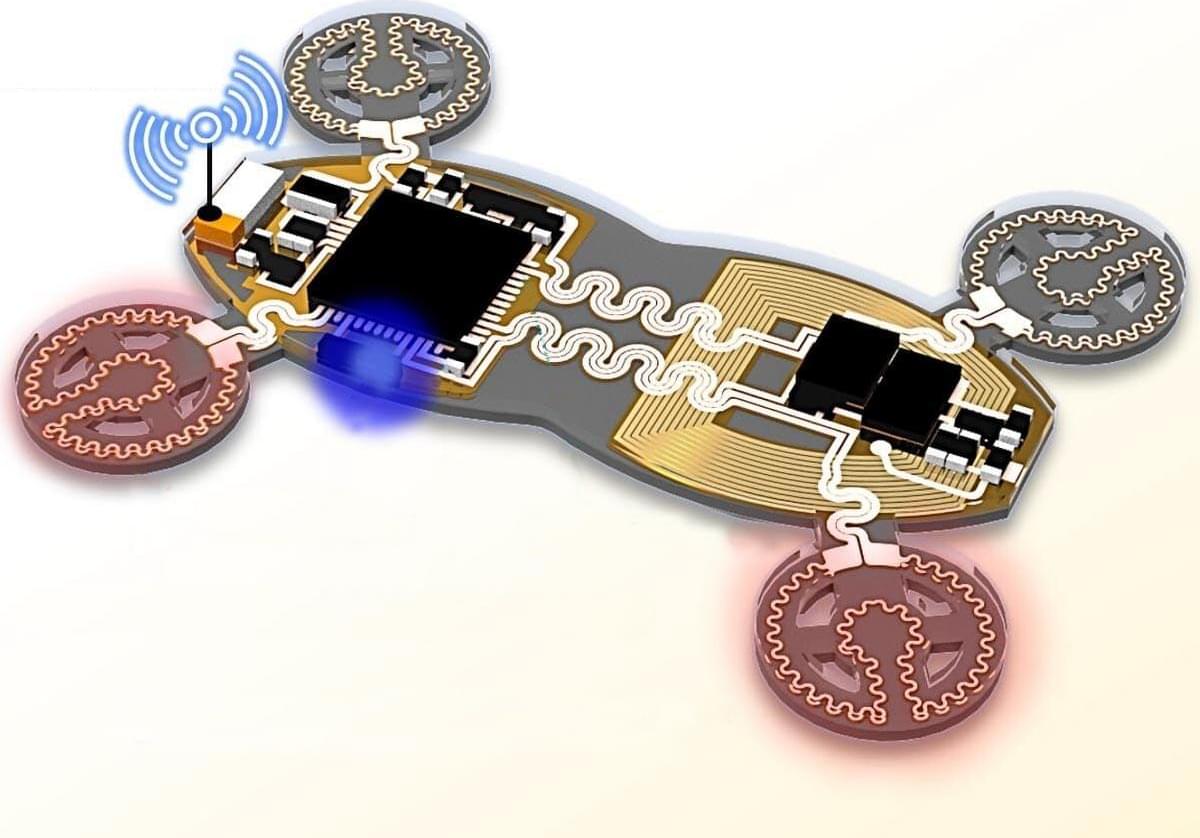

It is estimated that more than a trillion gadgets will be connected to the Internet in 10 years’ time. In addition to traditional technology such as mobile phones, smartwatches and computers, this could involve wearable medical devices such as insulin pumps, pacemakers, hearing aids and various health monitoring sensors, and in the long term also soft robotics, e-textiles and connected nerve implants.