Firefly’s Blue Ghost Mission 1 set a new benchmark for commercial lunar exploration, lasting longer than any previous private mission and delivering 10 NASA instruments to the Moon. The mission achieved several firsts, including the deepest robotic thermal probe on another planetary body and the

Category: robotics/AI – Page 301

Boston Dynamics Atlas AI Robot’s New Shocking Capabilities and Unitree G1 Humanoid’s Latest Record

The world-famous Atlas humanoid robot by Boston Dynamics is showing off its new look and capabilities.

According to the Massachusetts-based robotics leader, Atlas achieved its smooth movements and human-like walking gait with reinforcement learning and motion capture. Boston Dynamics released new demo footage as the company shared its progress at Nvidia’s GTC 2025 conference.

The AI-powered humanoid robot became a pop culture icon in the twenty tens. Viral videos showcasing its increasingly agile and human-like capabilities amazed and horrified millions of people. Others worried the AI robot would evolve to resent humanity for its harsh treatment from its creators.

Since becoming fully electric in 2024, Atlas has been more low-key, demonstrating industrial tasks rather than parkour.

Now it’s learning at an accelerated rate and it’s showing off. It has a new look, a more powerful brain, and it’s doing stunts again. But the humanoid robotics market is in a very different place, with dozens of firms, mostly from China and the United States, boasting new capabilities nearly every day.

Can Atlas come out ahead as it competes with Tesla, Figure AI, Agility Robotics and a growing array of Chinese firms racing to mass deploy their humanoids?

A marine robot that can swim, crawl and glide untethered in the deepest parts of the ocean

A team of mechanical engineers at Beihang University, working with a deep-sea diving specialist from the Chinese Academy of Sciences and a mechanic from Zhejiang University, all in China, have designed, built, and tested a marine robot that can swim, crawl, and glide untethered in the deepest parts of the ocean.

In their paper published in the journal Science Robotics, the group describes the factors that went into their design and how well their robot performed when tested.

Over the past several decades, underwater robots have become very important tools for studying the various parts of the world’s oceans and the creatures that live in them. More recently, it has been noted that most such craft, especially those that are sent to very deep parts of the sea, are cumbersome and not very agile.

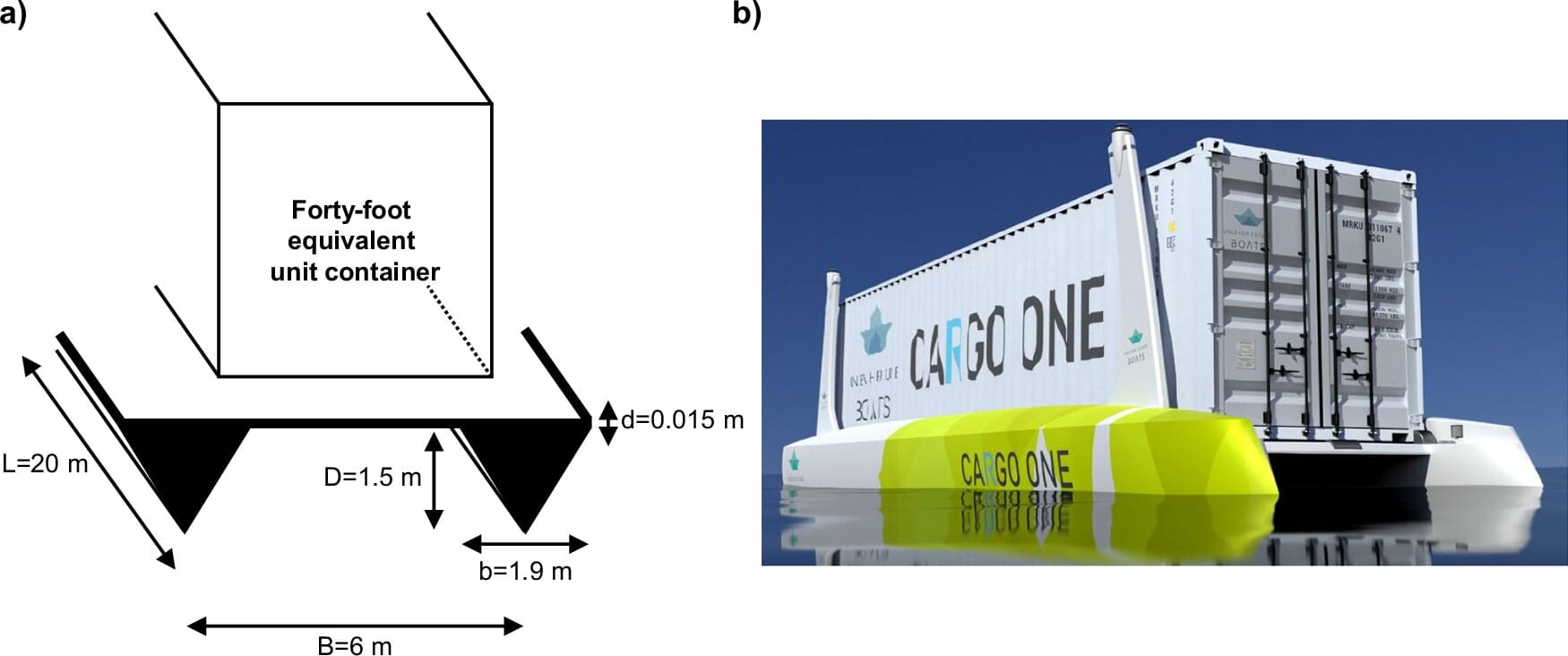

Hydrogen-powered boats offer climate-friendly alternative to road transport

Cargo transport is responsible for an enormous carbon footprint. Between 2010 and 2018, the transport sector generated about 14% of global greenhouse gas emissions. To address this problem, experts are looking for alternative, climate-friendly solutions—not only for road transport, but also for shipping, a sector in which powering cargo ships with batteries has proved especially difficult.

One promising but under-researched solution involves small, autonomous, hydrogen-powered boats that can partially replace long-haul trucking. A research team led by business chemist Prof Stephan von Delft from the University of Münster has now examined this missing link in a new study published in Communications Engineering.

The team has mathematically modeled such a boat for the first time and carried out a life cycle- and cost analysis. “Our calculations show in which scenarios hydrogen-powered boats are not only more sustainable but also more economical compared to established transport solutions,” explains von Delft. “They are therefore relevant for policymakers and industry.”

From robot swarms to human societies, good decisions rely on the right mix of perspectives

When groups make decisions—whether it’s humans aligning on a shared idea, robots coordinating tasks, or fish deciding where to swim—not everyone contributes equally. Some individuals have more reliable information, whereas others are more connected and have higher social influence.

A new study by researchers at the Cluster of Excellence Science of Intelligence shows that a combination of uncertainty and heterogeneity plays a crucial role in how groups reach consensus.

The findings, published in Scientific Reports by Vito Mengers, Mohsen Raoufi, Oliver Brock, Heiko Hamann, and Pawel Romanczuk, show that groups make faster and more accurate decisions when individuals factor in not only the opinions of their neighbors but also their confidence about these opinions and how connected those others are within the group.

The way we train AI is fundamentally flawed

To understand exactly what’s going on, we need to back up a bit. Roughly put, building a machine-learning model involves training it on a large number of examples and then testing it on a bunch of similar examples that it has not yet seen. When the model passes the test, you’re done.

What the Google researchers point out is that this bar is too low. The training process can produce many different models that all pass the test but—and this is the crucial part—these models will differ in small, arbitrary ways, depending on things like the random values given to the nodes in a neural network before training starts, the way training data is selected or represented, the number of training runs, and so on. These small, often random, differences are typically overlooked if they don’t affect how a model does on the test. But it turns out they can lead to huge variation in performance in the real world.

In other words, the process used to build most machine-learning models today cannot tell which models will work in the real world and which ones won’t.

Exposing Mark Rober’s Tesla Crash Story

Mark Rober’s Tesla crash story and video on self-driving cars face significant scrutiny for authenticity, bias, and misleading claims, raising doubts about his testing methods and the reliability of the technology he promotes.

Questions to inspire discussion.

Tesla Autopilot and Testing 🚗 Q: What was the main criticism of Mark Rober’s Tesla crash video? A: The video was criticized for failing to use full self-driving mode despite it being shown in the thumbnail and capable of being activated the same way as autopilot. 🔍 Q: How did Mark Rober respond to the criticism about not using full self-driving mode? A: Mark claimed it was a distinction without a difference and was confident the results would be the same if he reran the experiment in full self-driving mode. 🛑 Q: What might have caused the autopilot to disengage during the test?