Mitochondrial dysfunction is associated with various chronic diseases and cancers, including neurodegenerative diseases and metabolic syndrome. Gently extracting a single mitochondrion from within a living cell—without causing damage and without the guidance of fluorescent makers—has long been a challenge akin to threading a needle in a storm for scientists.

A team led by Prof. Richard Gu Hongri, Assistant Professor in the Division of Integrative Systems and Design of the Academy of Interdisciplinary Studies at The Hong Kong University of Science and Technology (HKUST), in collaboration with experts in mechanical engineering and biomedicine, has developed an automated robotic nanoprobe.

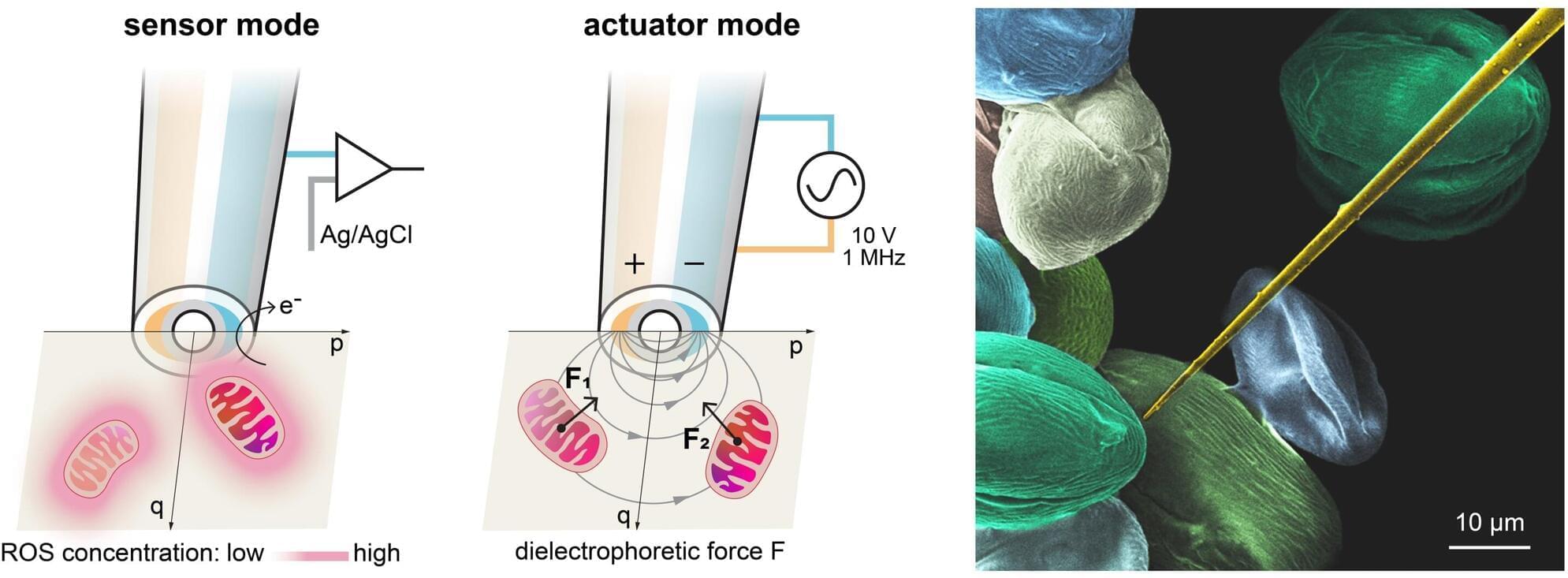

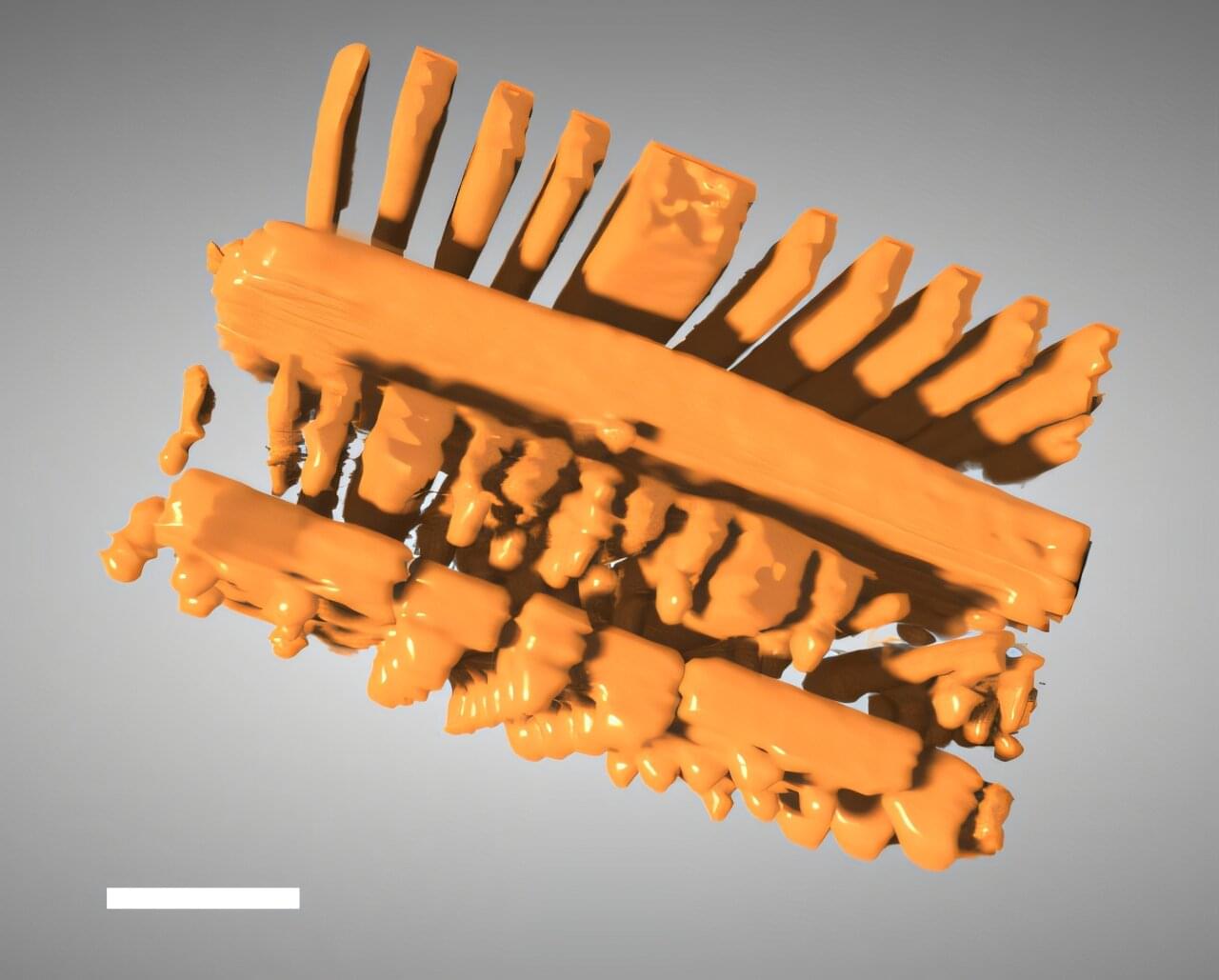

The device can navigate within a living cell, sense metabolic whispers in real time, and pluck an individual mitochondrion for analysis or—all without the need for fluorescent labeling. It is the world’s first cell-manipulation nanoprobe that integrates both sensors and actuators at its tip, enabling a micro-robot to autonomously navigate inside live cells. The breakthrough holds great promise for advancing future treatment strategies for chronic diseases and cancer.