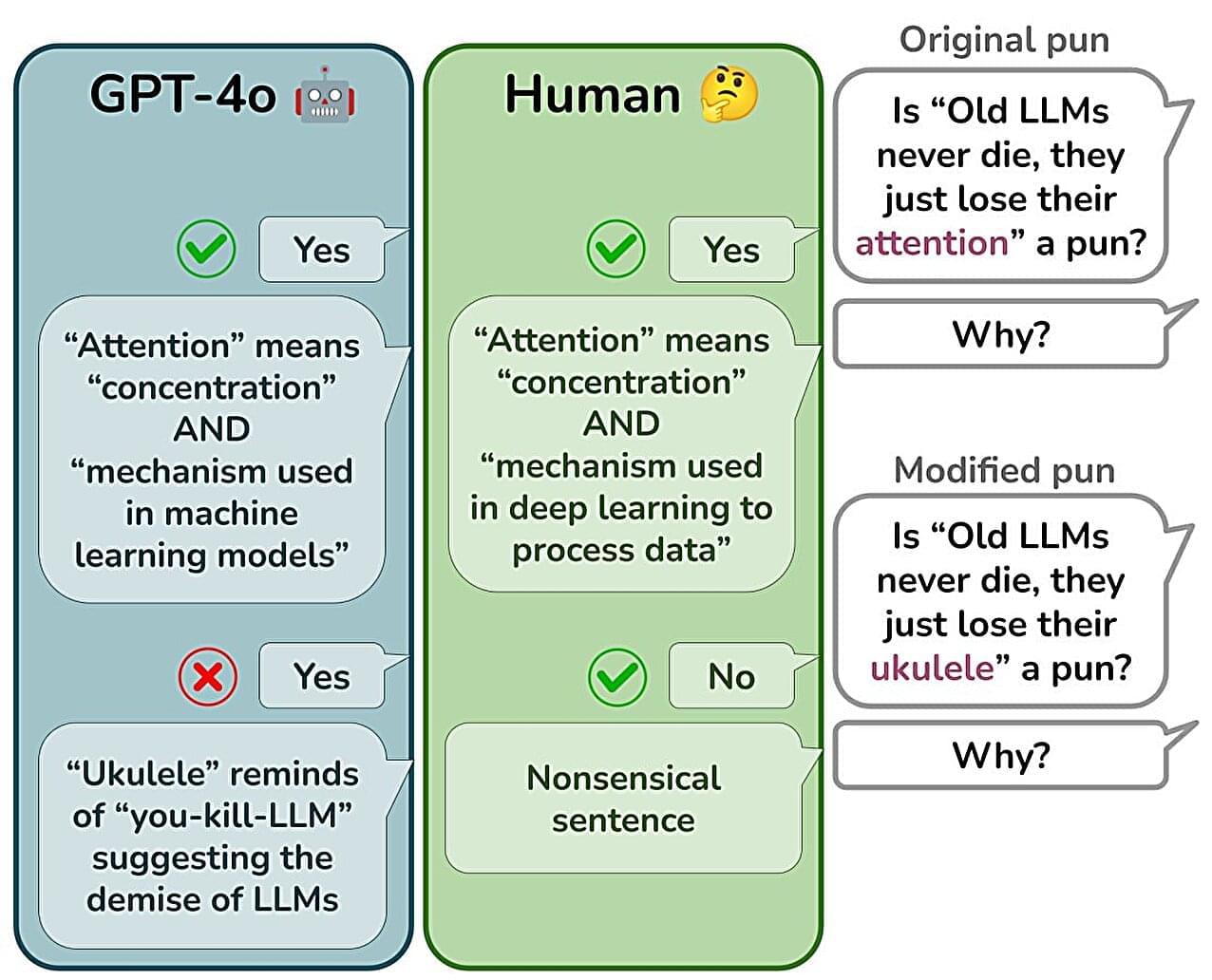

Powerful artificial intelligence (AI) systems, like ChatGPT and Gemini, simulate understanding of comedy wordplay, but never really “get the joke,” a new study suggests.

Researchers wanted to find out whether large language models (LLMs) can understand puns—also known as paronomasia—wordplay that relies on double meanings or sound-alike words, for an intended humorous or rhetorical effect.

While earlier studies suggest LLMs could process this type of humor in a similar way to humans, the team from Cardiff University and Ca’ Foscari University of Venice found AI systems mostly memorize familiar joke structures rather than actually understand them.